You know how we're always telling out non-technical non-gender-specific spouses and parents to be safe and careful online? You know how we teach non-technical friends about the little lock in the browser and making sure that their bank's little lock turned green?

HTTPS & SSL doesn't mean "trust this." It means "this is private." You may be having a private conversation with Satan.

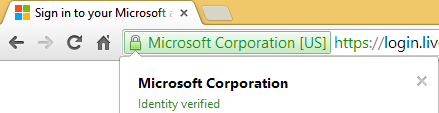

— Scott Hanselman (@shanselman) April 4, 2012Well, we know that HTTPS and SSL don't imply trust, they imply (some) privacy. But we have some cues, at least, and after many years while a good trustable UI isn't there, at least web browsers TRY to expose information for technical security decisions. Plus, bad guys can't spell.

But what about mobile apps?

I download a new 99 cent app and perhaps it wants a name and password. What standard UI is there to assure me that the transmission is secure? Do I just assume?

What about my big reliable secure bank? Their banking app is secure, right? If they use SSL, that's cool, right? Well, are they sure who they are talking too?

OActive Labs researcher Ariel Sancheztested 40 mobile banking apps from the "top 60 most influential banks in the world."

40% of the audited apps did not validate the authenticity of SSL certificates presented. This makes them susceptible to man-in-the-middle (MiTM) attacks.

Many of the apps (90%) contained several non-SSL links throughout the application. This allows an attacker to intercept the traffic and inject arbitrary JavaScript/HTML code in an attempt to create a fake login prompt or similar scam.

If I use an app to log into another service, what assurance is there that they aren't storing my password in cleartext?

It is easy to make mistakes such as storing user data (passwords/usernames) incorrectly on the device, in the vast majority of cases credentials get stored either unencrypted or have been encoded using methods such as base64 encoding (or others) and are rather trivial to reverse,” says Andy Swift, mobile security researcher from penetration testing firm Hut3.

I mean, if Starbucks developers can't get it right (they stored your password in the clear, on your device) then how can some random Jane or Joe Developer? What about cleartext transmission?

"This mistake extends to sending data too, if developers rely on the device too much it becomes quite easy to forget altogether about the transmission of the data. Such data can be easily extracted and may include authentication tokens, raw authentication data or personal data. At the end of the day if not investigated, the end user has no idea what data the application is accessing and sending to a server somewhere." - Andy Swift

I think that it's time for operating systems and SDKs to start imposing much more stringent best practices. Perhaps we really do need to move to an HTTPS Everywhere Internet as the Electronic Frontier Foundation suggests.

Transmission security doesn't mean that bad actors and malware can't find their way into App Stores, however. Researchers have been able to develop, submit, and have approved bad apps in the iOS App Store. I'm sure other stores have the same problems.

The NSA has a 37 page guide on how to secure your (iOS5) mobile device if you're an NSA employee, and it mostly consists of two things: Check "secure or SSL" for everything and disable everything else.

What do you think? Should App Stores put locks or certification badges on "secure apps" or apps that have passed a special review? Should a mobile OS impose a sandbox and reject outgoing non-SSL traffic for a certain class of apps? Is it too hard to code up SSL validation checks? '

Whose problem is this? I'm pretty sure it's not my Dad's.

Sponsor: Big thanks to Red Gate for sponsoring the blog feed this week! Easy release management: Deploy your SQL Server databases in a single, repeatable process with Red Gate’s Deployment Manager. There’s a free Starter edition, so get started now!

© 2014 Scott Hanselman. All rights reserved.