Azure Security Center helps customers deal with myriads of threats using advanced analytics backed by global threat intelligence. In addition, a team of security researchers often work directly with customers to gain insight into security incidents affecting Microsoft Azure customers, with the goal of constantly improving Security Center detection and alerting capabilities.

In the previous blog post "How Azure Security Center helps reveal a Cyberattack", security researchers detailed the stages of one real-world attack campaign that began with a brute force attack detected by Security Center and the steps taken to investigate and remediate the attack. In this post, we’ll focus on an Azure Security Center detection that led researchers to discover a ring of mining activity, which made use of a well-known bitcoin mining algorithm named Cryptonight.

Before we get into the details, let’s quickly explain some terms that you’ll see throughout this blog. “Bitcoin Miners” are a special class of software that use mining algorithms to generate or “mine” bitcoins, which are a form of digital currency. Mining software is often flagged as malicious because it hijacks system hardware resources like the Central Processing Unit (CPU) or Graphics Processing Unit (GPU) as well as network bandwidth of an affected host. Cryptonight is one such mining algorithm which relies specifically on the host’s CPU. In our investigations, we’ve seen bitcoin miners installed through a variety of techniques including malicious downloads, emails with malicious links, attachments downloaded by already-installed malware, peer to peer file sharing networks, and through cracked installers/bundlers.

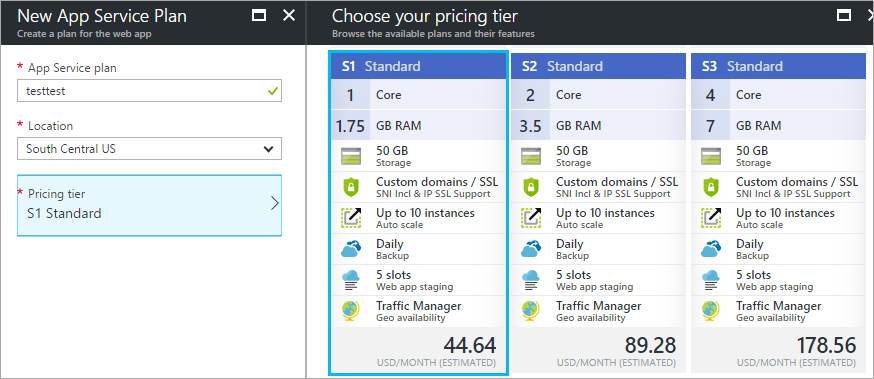

Initial Azure Security Center alert details

Our initial investigation started when Azure Security Center detected suspicious process execution and created an alert like the one below. The alert provided details such as date and time of the detected activity, affected resources, subscription information, and included a link to a detailed report about hacker tools like the one detected in this case.

|

|

We began a deeper investigation, which revealed the initial compromise was through a suspicious download that got detected as “HackTool: Win32/Keygen". We suspect one of the administrators on the box was trying to download tools that are usually used to patch or "crack" some software keys. Malware is frequently installed along with these tools allowing attackers a backdoor and access to the box.

- Based on our log analysis, the attack began with the creation of a user account named “*server$”.

- The “*server$” account then created a scheduled task called "ngm”. This task launched a batch script named "kit.bat” located in the "C:WindowsTempngmtx" folder.

- We then observed process named "servies.exe“ being launched with cryptonight related parameters.

- Note: The ‘bond007.01’ represents the bitcoin user’s account behind this activity and ‘x’ represents the password.

Two days later we observed the same activity with different file names. In the screenshot below, sst.bat has now replaced kit.bat and mstdc.exe has replaced servies.exe . This same cycle of batch file and process execution was observed periodically.

These .bat scripts appear to be used for making connections to the crypto net pool (XCN or Shark coin) and launched by a scheduled task that restarts these connections approximately every hour.

Additional Observation: The downloaded executables used for connecting to the bitcoin service and generating the bitcoins are renamed from the original, 32.exe or 64.exe, to “mstdc.exe” and “servies.exe” respectively. These executable’s naming schemes are based on an old technique used by attackers trying to hide malicious binaries in plain sight. The technique attempts to make files look like legitimate benign-sounding Windows filenames.

- Mstdc.exe: “mstdc.exe” looks like “msdtc.exe” which is a legitimate executable on Windows systems, namely Microsoft Distributed Transaction Coordinator required by various applications such as Microsoft Exchange or SQL Server installed in clusters.

- Servies.exe: Similarly, “services.exe” is a legitimate Service Control Manager (SCM) is a special system process under the Windows NT family of operating systems, which starts, stops and interacts with Windows service processes. Here again attackers are trying to hide by using similar looking binaries. “Servies.exe” and “services.exe”, they look very similar, don’t they? Great tactic used by attackers.

As we did our timeline log analysis, we noted other activity including wscript.exe using the “VBScript.Encode” to execute ‘test.zip’.

On extraction, it revealed ‘iissstt.dat’ file that was communicating with an IP address in Korea. The ‘mofcomp.exe’ command appears to be registering the file iisstt.dat with WMI. The mofcomp.exe compiler parses a file containing MOF statements and adds the classes and class instances defined in the file to the WMI repository.

Recommended remediation and mitigation steps

The initial compromise was the result of malware installation through cracked installers/bundlers which resulted in complete compromise of the machine. With that, our recommendation was first to rebuild the machine if possible. However, with the understanding that this sometimes cannot be done immediately, we recommend implementing the following remediation steps:

1. Password Policies: Reset passwords for all users of the affected host and ensure password policies meet best practices.

2. Defender Scan: Run a full antimalware scan using Microsoft Antimalware or another solution, which can flag potential malware.

3. Software Update Consideration: Ensure the OS and applications are being kept up to date. Azure Security Center can help you identify virtual machines that are missing critical and security OS updates.

4. OS Vulnerabilities & Version: Align your OS configurations with the recommended rules for the most hardened version of the OS. For example, do not allow passwords to be saved. Update the operating system (OS) version for your Cloud Service to the most recent version available for your OS family. Azure Security Center can help you identify OS configurations that do not align with these recommendations as well as Cloud Services running outdates OS version.

5. Backup: Regular backups are important not only for the software update management platform itself, but also for the servers that will be updated. To ensure that you have a rollback configuration in place in case an update fails, make sure to back up the system regularly.

6. Avoid Usage of Cracked Software: Using cracked software introduces unwanted risk into your home or business by way of malware and other threats that are associated with pirated software. Microsoft highly recommends evading usage of cracked software and following legal software policy as recommended by their respective organization.

More information can be found at:

- Educate yourself on software piracy risk.

- Learn more by reading “SIRv13: Be careful where you go looking for software and media files”.

7. Email Notification: Finally, configure Azure Security Center to send email notifications when threats like these are detected.

- Click on Policy tile in Prevention Section.

- On the Security Policy blade, you pick which Subscription you want to configure Email Alerts for.

- This brings us to the Security Policy blade. Click on the Email Notifications option to configure email alerting.

An email alert from Azure Security Center will look like the one below.

To learn more about Azure Security Center, see the following:

To learn more about Azure Security Center, see the following:

- Azure Security Center detection capabilities — Learn about Azure Security Center’s advanced detection capabilities.

- Managing and responding to security alerts in Azure Security Center — Learn how to manage and respond to security alerts.

- Managing security recommendations in Azure Security Center — Learn how recommendations help you protect your Azure resources.

- Security health monitoring in Azure Security Center — Learn how to monitor the health of your Azure resources.

- Monitoring partner solutions with Azure Security Center — Learn how to monitor the health status of your partner solutions.

- Azure Security Center FAQ — Find frequently asked questions about using the service.

- Azure Security blog — Get the latest Azure security news and information.