![Packages and Containers]() Today we are releasing a set of providers for ASP.NET 4.7.1 that make it easier than ever to deploy your applications to cloud services and take advantage of cloud-scale features. This release includes a new CosmosDb provider for session state and a collection of configuration builders.

Today we are releasing a set of providers for ASP.NET 4.7.1 that make it easier than ever to deploy your applications to cloud services and take advantage of cloud-scale features. This release includes a new CosmosDb provider for session state and a collection of configuration builders.

A Package-First Approach

With previous versions of the .NET Framework, new features were provided “in the box” and shipped with Windows and new versions of the entire framework. This means that you can be assured that your providers and capabilities were available on every current version of Windows. It also means that you had to wait until a new version of Windows to get new .NET Framework features. We have adopted a strategy starting with .NET Framework 4.7 to deliver more abstract features with the framework and deploy providers through the NuGet package manager service. There are no concrete ConfigurationBuilder classes in the .NET Framework 4.7.1, and we are now making available several for your use from the NuGet.org repository. In this way, we can update and deploy new ConfigurationBuilders without requiring a fresh install of Windows or the .NET Framework.

ConfigurationBuilders Simplify Application Management

In .NET Framework 4.7.1 we introduced the concept of ConfigurationBuilders. ConfigurationBuilders are objects that allow you to inject application configuration into your .NET Framework 4.7.1 application and continue to use the familiar ConfigurationManager interface to read those values. Sure, you could always write your configuration files to read other config files from disk, but what if you wanted to apply configuration from environment variables? What if you wanted to read configuration from a service, like Azure Key Vault? To work with those configuration sources, you would need to rewrite some non-trivial amount of your application to consume these services.

With ConfigurationBuilders, no code changes are necessary in your application. You simply add references from your web.config or app.config file to the ConfigurationBuilders you want to use and your application will start consuming those sources without updating your configuration files on disk. One form of ConfigurationBuilder is the KeyValueConfigBuilder that matches a key to a value from an external source and adds that pair to your configuration. All of the ConfigurationBuilders we are releasing today support this key-value approach to configuration. Lets take a look at using one of these new ConfigurationBuilders, the EnvironmentConfigBuilder.

When you install any of our new ConfigurationBuilders into your application, we automatically allocate the appropriate new configSections in your app.config or web.config file as shown below:

The new “builders” section contains information about the ConfigurationBuilders you wish to use in your application. You can declare any number of ConfigurationBuilders, and apply the settings they retrieve to any section of your configuration. Let’s look at applying our environment variables to the appSettings of this configuration. You specify which ConfigurationBuilders to apply to a section by adding the configBuilders attribute to that section, and indicate the name of the defined ConfigurationBuilder to apply, in this case “Environment”

<appSettings configBuilders="Environment">

<add key="COMPUTERNAME" value="VisualStudio" />

</appSettings>

The COMPUTERNAME is a common environment variable set by the Windows operating system that we can use to replace the VisualStudio setting defined here. With the below ASPX page in our project, we can run our application and see the following results.

![AppSettings Reported in the Browser]()

AppSettings Reported in the Browser

The COMPUTERNAME setting is overwritten by the environment variable. That’s a nice start, but what if I want to read ALL the environment variables and add them as application settings? You can specify Greedy Mode for the ConfigurationBuilder and it will read all environment variables and add them to your appSettings:

<add name="Environment" mode="Greedy"

type="Microsoft.Configuration.ConfigurationBuilders.EnvironmentConfigBuilder, Microsoft.Configuration.ConfigurationBuilders.Environment, Version=1.0.0.0, Culture=neutral" />

There are several Modes that you can apply to each of the ConfigurationBuilders we are releasing today:

- Greedy – Read all settings and add them to the section the ConfigurationBuilder is applied to

- Strict – (default) Update only those settings where the key matches the configuration source’s key

- Expand – Operate on the raw XML of the configuration section and do a string replace where the configuration source’s key is found.

The Greedy and Strict options only apply when operating on AppSettings or ConnectionStrings sections. Expand can perform its string replacement on any section of your config file.

You can also specify prefixes for your settings to be handled by adding the prefix attribute. This allows you to only read settings that start with a known prefix. Perhaps you only want to add environment variables that start with “APPSETTING_”, you can update your config file like this:

<add name="Environment"

mode="Greedy" prefix="APPSETTING_"

type="Microsoft.Configuration.ConfigurationBuilders.EnvironmentConfigBuilder, Microsoft.Configuration.ConfigurationBuilders.Environment, Version=1.0.0.0, Culture=neutral" />

Finally, even though using the APPSETTING_ prefix is a nice catch to only read those settings, you may not want your configuration to actually be called “APPSETTING_Setting” in code. You can use the stripPrefix attribute (default value is false) to omit the prefix when the value is added to your configuration:

![Greedy AppSettings with Prefixes Stripped]()

Greedy AppSettings with Prefixes Stripped

Notice that the COMPUTERNAME was not replaced in this mode. You can add a second EnvironmentConfigBuilder to read and apply settings by adding another add statement to the configBuilders section and adding an entry to the configBuilders attribute on the appSettings:

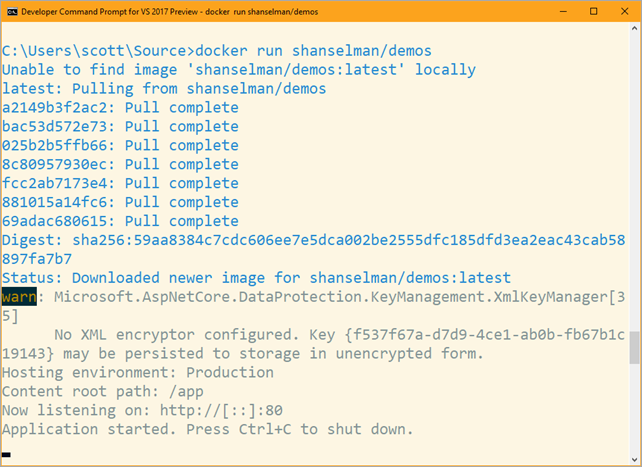

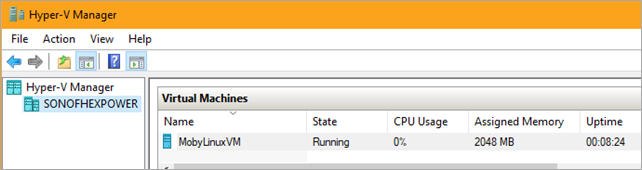

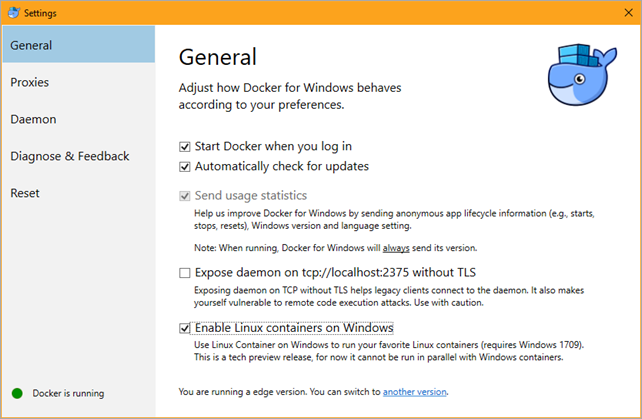

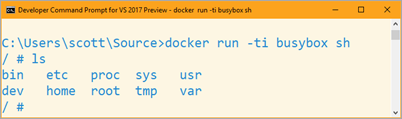

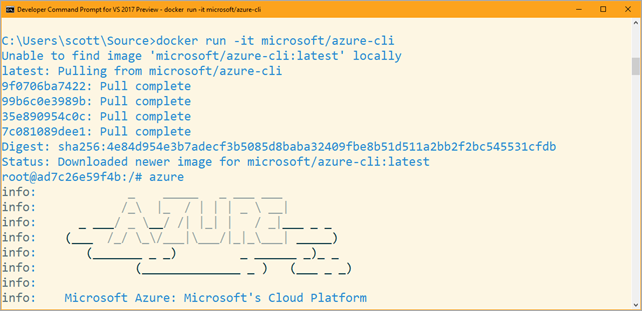

Try using the EnvironmentConfigBuilder from inside a Docker container to inject configuration specific to your running instances of your application. We’ve found that this significantly improves the ability to deploy existing applications in containers without having to rewrite your code to read from alternate configuration sources.

Secure Configuration with Azure Key Vault

We are happy to include a ConfigurationBuilder for Azure Key Vault in this initial collection of providers. This ConfigurationBuilder allows you to secure your application using the Azure Key Vault service, without any required login information to access the vault. Add this ConfigurationBuilder to your config file and build an add statement like the following:

<add name="AzureKeyVault"

mode="Strict"

vaultName="MyVaultName"

type="Microsoft.Configuration.ConfigurationBuilders.AzureKeyVaultConfigBuilder, Microsoft.Configuration.ConfigurationBuilders.Azure" />

If your application is running on an Azure service that has , this is all you need to read configuration from the vault and add it to your application. Conversely, if you are not running on a service with MSI, you can still use the vault by adding the following attributes:

- clientId – the Azure Active Directory application key that has access to your key vault

- clientSecret – the Azure Active Directory application secret that corresponds to the clientId

The same mode, prefix, and stripPrefix features described previously are available for use with the AzureKeyVaultConfigBuilder. You can now configure your application to grab that secret database connection string from the keyvault “conn_mydb” setting with a config file that looks like this:

You can use other vaults by using the uri attribute instead of the vaultName attribute, and providing the URI of the vault you wish to connect to. More information about getting started configuring key vault is available online.

Other Configuration Builders Available

Today we are introducing five configuration builders as a preview for you to use and extend:

This new collection of ConfigurationBuilders should help make it easier than ever to secure your applications with Azure Key Vault, or orchestrate your applications when you add them to a container by no longer embedding configuration or writing extra code to handle deployment settings.

We plan to fully release the source code and make these providers open source prior to removing the preview tag from them.

Store SessionState in CosmosDb

Today we are also releasing a session state provider for Azure Cosmos Db. The globally available CosmosDb service means that you can geographically load-balance your ASP.NET application and your users will always maintain the same session state no matter the server they are connected to. This async provider is available as a NuGet package and can be added to your project by installing that package and updating the session state provider in your web.config as follows:

<connectionStrings

<add name="myCosmosConnString"

connectionString="- YOUR CONNECTION STRING -"/>

</connectionStrings>

<sessionState mode="Custom" customProvider="cosmos">

<providers>

<add name="cosmos"

type="Microsoft.AspNet.SessionState.CosmosDBSessionStateProviderAsync, Microsoft.AspNet.SessionState.CosmosDBSessionStateProviderAsync"

connectionStringName="myCosmosConnString"/>

</providers>

</sessionState>

Summary

We’re continuing to innovate and update .NET Framework and ASP.NET. With these new providers, they should make it easier to deploy your applications to Azure or make use of containers without having to rewrite your application. Update your applications to .NET 4.7.1 and start using these new providers to make your configuration more secure, and to start using CosmosDb for your session state.

Today we are releasing a set of providers for ASP.NET 4.7.1 that make it easier than ever to deploy your applications to cloud services and take advantage of cloud-scale features. This release includes a new CosmosDb provider for session state and a collection of configuration builders.

Today we are releasing a set of providers for ASP.NET 4.7.1 that make it easier than ever to deploy your applications to cloud services and take advantage of cloud-scale features. This release includes a new CosmosDb provider for session state and a collection of configuration builders.