DevOps and VSTS Videos from Connect(); 2017

VSTS Analytics OData now publicly available

Microsoft showcases latest industrial IoT innovations at SPS 2017

We are excited to extend our lead in standards-based Industrie 4.0 cloud solutions using the industrial interoperability standard OPC UA, with several new product announcements at SPS IPC Drives 2017 in Nuernberg, Europe’s leading industrial automation exhibition, which takes place next week.

We continue to be the only cloud provider that offers both OPC UA client/server as well as the upcoming (in OPC UA version 1.04) Publish/Subscribe communication pattern to the cloud and back with open-source modules for easy connection to existing machines, without requiring changes to these machines and without requiring opening the on-premises firewall. We achieve this though the two Azure IoT Edge modules OPC Proxy and OPC Publisher, which are available open-source on GitHub and as Docker containers on DockerHub.

As previously announced, we have now contributed an open-source OPC UA Global Discovery Server (GDS) and Client to the OPC Foundation GitHub. This contribution now brings us close to the 4.5 million source lines of contributed code landmark, keeping us in the lead as largest contributor of open-source software to the OPC Foundation. This server can be run in a container and used for self-hosting in the OT network.

Additionally at SPS, Microsoft will demo its commercial, fully Azure IoT integrated version of the GDS and accompanying client at the OPC Foundation booth. This version runs as part of the Azure IoT Edge offering made available to the public last week.

We have continued to release monthly updates to our popular open-source Azure IoT Suite Connected Factory preconfigured solution which we launched at Hannover Messe this year. Most recently, we have updated the look and feel to be in line with the new set of preconfigured solutions currently being released. We will continue to release new versions of the Connected Factory on a monthly basis with improvements and new features, so check them out on a regular basis.

Our OPC UA Gateway program also keeps growing rapidly. Since we launched the program just six months ago at Hannover Messe, we now have 13 partners signed up including Softing, Unified Automation, Hewlett Packard Enterprise, Kepware, Cisco, Beckhoff, Moxa, Advantech, Nexcom, Prosys OPC, MatrikonOPC, Kontron, and Hilscher.

Furthermore, we are excited to announce that the Industrial IoT Starter Kit, previously announced at OPC Day Europe, is now available to order online from our partner Softing. The kit empowers companies to securely link their production line from the physical factory floor to the Microsoft Azure IoT Suite Connected Factory in less than one day. This enables the management, collection, and analysis of OPC UA telemetry data in a centralized way to gain valuable business insights immediately and improve operational efficiency. As with all our Industrial IoT products, this kit uses OPC UA, but also comes with a rich PLC protocol translation software from Softing called dataFEED OPC Suite. It comes with industry-grade hardware from Hewlett Packard Enterprise in the form of the HPE GL20 IoT Gateway. Swing by the Microsoft booth to check it out and get the chance to try it out for 6 months in your own industrial installation.

Stop by our booth in Hall 6, as well as the OPC Foundation booth in Hall 7 to see all of this for yourself!

Learnings from 5 months of R-Ladies Chicago (Part 1)

by Angela Li, founder and organizer of R-Ladies Chicago. This article also appears on Angela's personal blog.

It’s been a few months since I launched R-Ladies Chicago, so I thought I’d sit down and write up some things that I’ve learned in the course of organizing this wonderful community. Looking back, there are a few things I wish someone told me at the beginning of the process, which I’ll share here over the course of a few weeks. The hope is that you can use these learnings to organize tech communities in your own area.

Chicago #RLadies ❤️ R! Group photo from tonight's Meetup @MicrosoftR (thanks @revodavid for the shirts) @RLadiesGlobal @d4tagirl #rstats pic.twitter.com/VUiym1LlID

— R-Ladies Chicago (@RLadiesChicago) October 27, 2017

Note: some of this information may be more specific to Chicago, a major city in the US that has access to many resources, or to R-Ladies as a women’s group in particular. I tried to write down more generalizable takeaways. If you’re interested in starting a tech meetup in your community, R-related or not, use this series as a resource!

Part 1: Starting the Meetup

Sometimes, it just takes someone who’s willing:

- The reason R-Ladies didn’t exist in Chicago before July wasn’t because there weren’t women using R in Chicago, or because there wasn’t interest in the community in starting a Meetup. It was just that no one had gotten around to it. I had a few people after our first Meetup say to me that they’d been interested in a R-Ladies Meetup for a long time, but had been waiting for someone to organize it. Guess what…that person could be you!

- I also think people might have been daunted by the prospect of starting a Chicago Meetup, because it’s such a big city and it’s intimidating to organize a group without already knowing a few people. If you’re in a smaller place, consider it a benefit that you're drawing from a smaller, tight-knit community of folks who use R.

- If you can overcome the hurdle of starting something, you’ll be amazed at how many people will support you. Start the group and folks will come.

You don’t have to be the most skilled programmer to lead a successful Meetup:

- This is a super harmful mindset, and something that plagues women in tech in particular. I struggled with this a lot at the outset: “I’m not qualified to lead this. There’s no way I can explain in great detail EVERY SINGLE THING about R to someone else. Heck, I haven’t used R for 10+ years. Someone else would probably be better at this than am.”

- The thing is, the skillset that it takes to organize a community is vastly different than the skillset that it takes to write code. You’re thinking about how to welcome beginners, encourage individuals to contribute, teach new skills, and form relationships between people. The very things that you believe make you “less qualified” as a programmer are the exact things that are valuable in this context — you understand the struggles of learning R, because you were recently going through that process yourself. Or you're more accessible for someone to ask questions to, because they aren’t intimidated by you.

- Being able to support and encourage your fellow R users is something you can do no matter what your skill level is. There are women in our group who have scads more experience in R than I do. That’s fantastic! My job as an organizer is to showcase and use the skills of the individuals in our community, and if I can get these amazing women to lead workshops, that’s less work for me AND great for them! Pave the way for people to do awesome stuff.

Get yourself cheerleaders:

- I cannot emphasize enough how important it was to have voices cheering me on as I was setting this up. The women in my office who told me they’d help me set up the first Meetup. My friends who told me they’d come to the first meeting (even if they didn’t use R). The R-Ladies across the globe who were so supportive and excited that a Chicago group was starting. When I doubted myself, there was someone there to encourage me.

- Even better, start a Meetup with a friend and cheer each other on! If I could do this over again, I’d make sure I had co-organizers from the very start. More about this in weeks to come :)

- Especially if you’re starting a R-Ladies group, realize that there’s a wider #rstats and @RLadiesGlobal community in place to support you. Each group is independent and has its own needs and goals, but there are so many people to turn to if you have questions. The beauty of tech communities is that you’re often already connected to people across the globe through online platforms. All you need to know is: you’re not going it alone!

- The R community itself is incredibly supportive, and I’d be remiss if I didn’t mention how much support R-Ladies Chicago has received from David Smith and the team at Microsoft. Not only did they promote the group, but I reached out to David a month or so after starting the group, and he immediately offered to sponsor the group, get swag, and provide space for us. R-Ladies Chicago would be in a different place without Microsoft's generous contributions. I’m grateful for their support of the group as we got off the ground.

Next week: I’ll be talking about Meetup-to-Meetup considerations, or things you should be thinking about in the process of organizing an event for your group!

The UWP Community Toolkit v2.1

We are extremely excited to announce the latest update to the UWP Community Toolkit, version 2.1!

This update builds on top of the previous version and continues to align the toolkit closer to the Windows 10 Fall Creators Update SDK. Thanks to the continued support and help of the community, all packages have been updated to target the Fall Creators Update, several controls, helpers, and extensions have been added or updated, and the documentation and design time experience have been greatly improved.

Below is a quick list of few of the major updates in this release. Head over to the release notes for a complete overview of what’s new in 2.1.

DockPanel

This release introduces the DockPanel control that provides an easy docking of elements to the left, right, top, bottom or center.

#DockPanel is now part of #UwpToolkit get the pre-release from here https://t.co/ccEz8R6qSa thanks to @metulev & @dotMorten for their review pic.twitter.com/Gfp566kFAE

— Ibraheem Osama (@IbraheemOM) November 2, 2017

HeaderedContentControl and HeaderedItemsControl

There are now two controls, HeaderedContentControl and HeaderedItemsControl that allow content to be easily displayed with a header that can be templated.

<controls:HeaderedContentControl Header="Hello header!">

<Grid Background="Gray">

</Grid>

</<controls:HeaderedContentControl>

Connected and Implicit Animation in XAML

There are two new sets of XAML attached properties that enable working with composition animations directly in XAML

- Implicit animations (including show and hide) can now be directly added to the elements in XAML

<Border extensions:VisualExtensions.NormalizedCenterPoint="0.5">

<animations:Implicit.ShowAnimations>

<animations:TranslationAnimation Duration="0:0:1"

To="0, 100, 0" ></animations:TranslationAnimation>

<animations:OpacityAnimation Duration="0:0:1"

To="1.0"></animations:OpacityAnimation>

</animations:Implicit.ShowAnimations>

</Border>

- Connected animations can now be defined directly on the element in XAML by simply adding the same key on elements on different pages

<!-- Page 1 --> <Border x:Name="Element" animations:Connected.Key="item"></Border> <!-- Page 2 --> <Border x:Name="Element" animations:Connected.Key="item"></Border>

Improved design time experience

Added designer support for controls, including toolbox integration and improved design time experience by placing properties in the proper category in the properties grid with hover tooltip.

Added @VisualStudio Toolbox integration to #UWPToolkit: https://t.co/SZ6Tf3b0cf #DragNDropLikeItsHot pic.twitter.com/G4s73wXUsi

— Morten Nielsen (@dotMorten) August 31, 2017

New SystemInformation properties

SystemInformation class now includes new properties and methods to make it easier to provide first run (or related) experiences or collect richer analytics.

The #uwptoolkit got some new SystemInformation properties fresh from the oven thanks to @mrlacey. What would you use these for? https://t.co/cFjGWSBxPX pic.twitter.com/Pft6nWbx0M

— Nikola Metulev (@metulev) October 13, 2017

Easy transition to new Fall Creators Update controls

To enable a smooth transition from existing toolkit controls to the new Fall Creators Update controls, the HamburgeMenu and SlidableListItem have new properties to use the NavigationView and SwipeControl respectively when running on Fall Creators Update. Take a look at the documentation on how this works.

Documentation

All documentation is now available at Microsoft docs. In addition, there is new API documentation as part of .NET API Browser.

Built by the Community

This update would not have been possible if it wasn’t for the community support and participation. If you are interested in participating in the development, but don’t know how to get started, check out our “help wanted” issues on GitHub.

As a reminder, although most of the development efforts and usage of the UWP Community Toolkit is for Desktop apps, it also works great on Xbox One, Mobile, HoloLens, IoT and Surface Hub devices. You can get started by following this tutorial, or preview the latest features by installing the UWP Community Toolkit Sample App from the Microsoft Store.

To join the conversation on Twitter, use the #uwptoolkit hashtag.

The post The UWP Community Toolkit v2.1 appeared first on Building Apps for Windows.

Trying out new .NET Core Alpine Docker Images

I blogged recently about optimizing .NET and ASP.NET Docker files sizes. .NET Core 2.0 has previously been built on a Debian image but today there is preview image with .NET Core 2.1 nightlies using Alpine. You can read about the announcement here about this new Alpine preview image. There's also a good rollup post on .NET and Docker.

I blogged recently about optimizing .NET and ASP.NET Docker files sizes. .NET Core 2.0 has previously been built on a Debian image but today there is preview image with .NET Core 2.1 nightlies using Alpine. You can read about the announcement here about this new Alpine preview image. There's also a good rollup post on .NET and Docker.

They have added two new images:

2.1-runtime-alpine2.1-runtime-deps-alpine

Alpine support is part of the .NET Core 2.1 release. .NET Core 2.1 images are currently provided at the microsoft/dotnet-nightly repo, including the new Alpine images. .NET Core 2.1 images will be promoted to the microsoft/dotnet repo when released in 2018.

NOTE: The -runtime-deps- image contains the dependancies needed for a .NET Core application, but NOT the .NET Core runtime itself. This is the image you'd use if your app was a self-contained application that included a copy of the .NET Core runtime. This is apps published with -r [runtimeid]. Most folks will use the -runtime- image that included the full .NET Core runtime. To be clear:

- The

runtimeimage contains the .NET Core runtime and is intended to run Framework-Dependent Deployed applications - see sample- The

runtime-depsimage contains just the native dependencies needed by .NET Core and is intended to run Self-Contained Deployed applications - see sample

It's best with .NET Core to use multi-stage build files, so you have one container that builds your app and one that contains the results of that build. That way you don't end up shipping an image with a bunch of SDKs and compilers you don't need.

NOTE: Read this to learn more about image versions in Dockerfiles so you can pick the right tag and digest for your needs. Ideally you'll pick a docker file that rolls forward to include the latest servicing patches.

Given this docker file, we build with the SDK image, then publish, and the result is about 219megs.

FROM microsoft/dotnet:2.0-sdk as builder

RUN mkdir -p /root/src/app/dockertest

WORKDIR /root/src/app/dockertest

COPY dockertest.csproj .

RUN dotnet restore ./dockertest.csproj

COPY . .

RUN dotnet publish -c release -o published

FROM microsoft/dotnet:2.0.0-runtime

WORKDIR /root/

COPY --from=builder /root/src/app/dockertest/published .

ENV ASPNETCORE_URLS=http://+:5000

EXPOSE 5000/tcp

CMD ["dotnet", "./dockertest.dll"]

Then I'll save this as Dockerfile.debian and build like this:

> docker build . -t shanselman/dockertestdeb:0.1 -f dockerfile.debian

With a standard ASP.NET app this image ends up being 219 megs.

Now I'll just change one line, and use the 2.1 alpine runtime

FROM microsoft/dotnet-nightly:2.1-runtime-alpine

And build like this:

> docker build . -t shanselman/dockertestalp:0.1 -f dockerfile.alpine

and compare the two:

> docker images | find /i "dockertest"

shanselman/dockertestalp 0.1 3f2595a6833d 16 minutes ago 82.8MB

shanselman/dockertestdeb 0.1 0d62455c4944 30 minutes ago 219MB

Nice. About 83 megs now rather than 219 megs for a Hello World web app. Now the idea of a microservice is more feasible!

Please do head over to the GitHub issue here https://github.com/dotnet/dotnet-docker-nightly/issues/500 and offer your thoughts and results as you test these Alpine images. Also, are you interested in a "-debian-slim?" It would be halfway to Alpine but not as heavy as just -debian.

Lots of great stuff happening around .NET and Docker. Be sure to also check out Jeff Fritz's post on creating a minimal ASP.NET Core Windows Container to see how you can squish .(full) Framework applications running on Windows containers as well. For example, the Windows Nano Server images are just 93 megs compressed.

Sponsor: Get the latest JetBrains Rider preview for .NET Core 2.0 support, Value Tracking and Call Tracking, MSTest runner, new code inspections and refactorings, and the Parallel Stacks view in debugger.

© 2017 Scott Hanselman. All rights reserved.

Happy Thanksgiving!

Today is Thanksgiving Day here in the US, so we're taking the rest of the week off to enjoy the time with family.

Even if you don't celebrate Thanksgiving, today is still an excellent day to give thanks to the volunteers who have contributed to the R project and its ecosystem. In particular, give thanks to the R Core Group, whose tireless dedication — in several cases over a period of more than 20 years — was and remains critical to the success and societal contributions of the language we use and love: R. You can contribute financially by becoming a Supporting Member of the R Foundation.

Top stories from the VSTS community – 2017.11.24

Because it’s Friday: Trombone Transcription

Yes, I know we said we're taking the Thanksgiving break off, but this was too funny not to share:

I accidentally texted my wife with voice recognition...while playing the trombone pic.twitter.com/tWCPSXbbrO

— Paul The Trombonist (@JazzTrombonist) November 21, 2017

If you're in the US, hope you had a nice Thanksgiving. Everyone, enjoy the weekend and we'll be back (for real, this time) on Monday.

Writing smarter cross-platform .NET Core apps with the API Analyzer and Windows Compatibility Pack

There's a couple of great utilities that have come out in the last few weeks in the .NET Core world that you should be aware of. They are deeply useful when porting/writing cross-platform code.

There's a couple of great utilities that have come out in the last few weeks in the .NET Core world that you should be aware of. They are deeply useful when porting/writing cross-platform code.

.NET API Analyzer

First is the API Analyzer. As you know, APIs sometimes get deprecated, or you'll use a method on Windows and find it doesn't work on Linux. The API Analyzer is a Roslyn (remember Roslyn is the name of the C#/.NET compiler) analyzer that's easily added to your project as a NuGet package. All you have to do is add it and you'll immediately start getting warnings and/or squiggles calling out APIs that might be a problem.

Check out this quick example. I'll make a quick console app, then add the analyzer. Note the version is current as of the time of this post. It'll change.

C:supercrossplatapp> dotnet new console

C:supercrossplatapp> dotnet add package Microsoft.DotNet.Analyzers.Compatibility --version 0.1.2-alpha

Then I'll use an API that only works on Windows. However, I still want my app to run everywhere.

static void Main(string[] args)

{

Console.WriteLine("Hello World!");

if (RuntimeInformation.IsOSPlatform(OSPlatform.Windows))

{

var w = Console.WindowWidth;

Console.WriteLine($"Console Width is {w}");

}

}

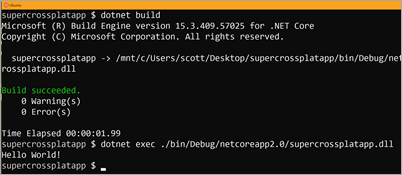

Then I'll "dotnet build" (or run, which implies build) and I get a nice warning that one API doesn't work everywhere.

C:supercrossplatapp> dotnet build

Program.cs(14,33): warning PC001: Console.WindowWidth isn't supported on Linux, MacOSX [C:UsersscottDesktopsupercr

ossplatappsupercrossplatapp.csproj]

supercrossplatapp -> C:supercrossplatappbinDebugnetcoreapp2.0supercrossplatapp.dll

Build succeeded.

Olia from the .NET Team did a great YouTube video where she shows off the API Analyzer and how it works. The code for the API Analyzer up here on GitHub. Please leave an issue if you find one!

Windows Compatibility Pack for .NET Core

Second, the Windows Compatibility Pack for .NET Core is a nice piece of tech. When .NET Core 2.0 come out and the .NET Standard 2.0 was finalized, it included over 32k APIs that made it extremely compatible with existing .NET Framework code. In fact, it's so compatible, I was able to easily take a 15 year old .NET app and port it over to .NET Core 2.0 without any trouble at all.

They have more than doubled the set of available APIs from 13k in .NET Standard 1.6 to 32k in .NET Standard 2.0.

.NET Standard 2.0 is cool because it's supported on the following platforms:

- .NET Framework 4.6.1

- .NET Core 2.0

- Mono 5.4

- Xamarin.iOS 10.14

- Xamarin.Mac 3.8

- Xamarin.Android 7.5

When you're porting code over to .NET Core that has lots of Windows-specific dependencies, you might find yourself bumping into APIs that aren't a part of .NET Standard 2.0. So, there's a new (preview) Microsoft.Windows.Compatibility NuGet package that "provides access to APIs that were previously available only for .NET Framework."

There will be two kinds of APIs in the Compatibility Pack. APIs that were a part of Windows originally but can work cross-platform, and APIs that will always be Windows only, because they are super OS-specific. APIs calls to the Windows Registry will always be Windows-specific, for example. But the System.DirectoryServices or System.Drawing APIs could be written in a way that works anywhere. The Windows Compatibility Pack adds over 20,000 more APIs, on top of what's already available in .NET Core. Check out the great video that Immo shot on the compat pack.

The point is, if the API that is blocking you from using .NET Core is now available in this compat pack, yay! But you should also know WHY you are pointing to .NET Core. Work continues on both .NET Core and .NET (Full) Framework on Windows. If your app works great today, there's no need to port unless you need a .NET Core specific feature. Here's a great list of rules of thumb from the docs:

Use .NET Core for your server application when:+

- You have cross-platform needs.

- You are targeting microservices.

- You are using Docker containers.

- You need high-performance and scalable systems.

- You need side-by-side .NET versions per application.

Use .NET Framework for your server application when:

- Your app currently uses .NET Framework (recommendation is to extend instead of migrating).

- Your app uses third-party .NET libraries or NuGet packages not available for .NET Core.

- Your app uses .NET technologies that aren't available for .NET Core.

- Your app uses a platform that doesn’t support .NET Core.

Finally, it's worth pointing out a few other tools that can aid you in using the right APIs for the job.

- Api Portability Analyzer - command line tool or Visual Studio Extension, a toolchain that can generate a report of how portable your code is between .NET Framework and .NET Core, with an assembly-by-assembly breakdown of issues. See Tooling to help you on the process for more information.

- Reverse Package Search - A useful web service that allows you to search for a type and find packages containing that type.

Enjoy!

Sponsor: Get the latest JetBrains Rider preview for .NET Core 2.0 support, Value Tracking and Call Tracking, MSTest runner, new code inspections and refactorings, and the Parallel Stacks view in debugger.

© 2017 Scott Hanselman. All rights reserved.

Technical reference implementation for enterprise BI and reporting

We are happy to announce the technical reference implementation of an enterprise grade infrastructure to help accelerate the deployment of a BI and reporting solution to augment your data science and analytics platform on Azure.

Azure offers a rich data and analytics platform for customers, system integrators, and software vendors seeking to build scalable BI and reporting solutions. However, you face pragmatic challenges in building the right infrastructure for enterprise-grade production systems. Besides evaluating the various products for security, scale, performance, and geo-availability requirements, you need to understand service features and their interoperability, and address any feature gaps that you perceive using custom software. This takes weeks, if not months, of time and effort. Often, the end to end architecture that appeared promising during the POC (proof of concept) stages does not translate to a robust production system in the expected time-to-market.

This reference implementation enables you to fast track your infrastructure deployment, so that you can focus on the main goal of building a BI and reporting application that addresses your business needs. Key features and benefits of this reference implementation includes:

- It is pre-built with components like Blob, SQL Data Warehouse, SQL Database, and Analysis Services in highly scalable configurations that have been proven in the field to meet enterprise requirements.

- It can be one-click deployed into an Azure subscription, to complete in under 3 hours.

- It is bundled with software for virtual network configuration, job orchestration for data ingestion and flow, system management, monitoring, and other operational essentials for a production system.

- It is tested end-to-end against large workloads.

- It will be supported by trained Microsoft partners who can help you with deployment, and post-deployment customization for your application needs, based on the technical guidance and scripts that ship with the solution.

How do I get the reference implementation?

You can one-click deploy the infrastructure implementation from one of these two locations:

How does the implementation work?

In a nutshell, the deployed implementation works as shown in the graphic below.

You can operationalize the infrastructure using the steps in the User’s Guide, and explore component level details from the Technical Guides.

Next steps

Review the documentation and deploy the implementation. For questions, clarifications, or feedback, please email azent-biandreporting@microsoft.com. You can also monitor any updates to this implementation from the public GitHub.

Server aliases for Azure Analysis Services

Server aliases for Azure Analysis Services allow users to connect to an alias server name instead of the server name exposed in the Azure portal. This can be used in the following scenarios:

- Migrating models between servers without affecting users.

- Providing friendly server names that are easier for users to remember.

- Direct users to different servers at different times of the day.

- Direct users in different regions to instances of Analysis Services that are geographically closer, for example using Azure Traffic Manager.

Any HTTP endpoint that returns a valid Azure Analysis Services server name can provide this capability using the link:// format.

For example, the following URL returns an Azure Analysis server name.

Users can connect to the server from Power BI Desktop (in addition to other client tools) using the link:// format.

To create the HTTP endpoint, various methods can be used. For example, a reference to a file in Azure Blob Storage containing the real server name can be used.

In this case, an ASP.NET Web Forms Application was created in Visual Studio 2017. The master page reference and user control was removed from the Default.aspx page. The contents of Default.aspx are simply the following Page directive.

<%@ Page Title="Home Page" Language="C#" AutoEventWireup="true" CodeBehind="Default.aspx.cs" Inherits="FriendlyRedirect._Default" %>

The Page_Load event in Default.aspx.cs uses the Response.Write() method to return the Azure Analysis Services server name.

protected void Page_Load(object sender, EventArgs e)

{

this.Response.Write("asazure://southcentralus.asazure.windows.net/XXX");

}

The website is deployed directly to Azure using the publish feature in Visual Studio.

That’s it! We hope you’ll agree this is a very simple and useful feature.

TFVC support and other enhancements hit Continuous Delivery Tools for Visual Studio

A year ago, we released the first preview of the Continuous Delivery Tools for Visual Studio (CD4VS) with support for configuring a continuous integration and continuous delivery pipeline for ASP.NET and ASP.NET Core projects with and without container support. With CD4VS you can always configure Continuous Delivery for solutions under source control to App Service, and Service Fabric Clusters.

Today, we announce the support for configuring Continuous Delivery for solutions under TFVC source control on VSTS. When you click configure Continuous Delivery on the solution menu, CD4VS detects the source control provider to determine if it is TFVC or Git and the remote source control host to determine if it is VSTS or GitHub.

If the remote source control host is VSTS and the source control provider is TFVC, the Configure Continuous Delivery dialog automatically selects the remote server path that your local solution folder is mapped to as your source control folder for the continuous integration build definition.

You can select the target Azure subscription and Azure service host. When you click OK, CD4VS will automatically configure a build and release definition that will fire a build and release whenever anyone on your team checks in code to that source control folder.

Other enhancements

We have been releasing incremental updates and recently, we introduced inline creation of a new Azure Container Registry (ACR) as well as support for SSH authentication to GitHub and VSTS Git repositories. If you are configuring Continuous Delivery for a containerized solution you can select an existing ACR, or opt to create a new one.

When you opt to create a new ACR, you can edit the new Container Registry details to change its name, resource group and location.

As you configure Continuous Delivery, CD4VS will now recognize solutions under source control in VSTS repositories that only accept authentication over SSH and populate the dialog with repository details and list of available remote branches in that repository.

Please keep the feedback coming!

Thank you to everyone who has reached out and shared feedback and ideas so far. We’re always looking for feedback on where to take this Microsoft DevLabs extension next. There is a Slack channel and a team alias vsdevops@microsoft.com where you can reach out to the team and others in the community sharing ideas on this topic.

|

Ahmed Metwally, Senior Program Manager, Visual Studio @cd4vs Ahmed is a Program Manager on the Visual Studio Platform team focused on improving team collaboration and application lifecycle management integration. |

R/Finance 2018, Chicago June 1-2

The tenth annual R/Finance conference will be held in Chicago, June 1-2 2018. This is a fantastic conference for anyone working with R in the finance industry, or doing research around R in finance in the academic sector. This community-led, single-track conference always features a program of interesting talks in a convival atmosphere.

If you'd like to contribute a 20-minute presentation or a 5-minute lightning talk, the call for papers is open though February 2, 2018. If you'd like to attend, registrations will open in March 2018.

Microsoft is proud to be a continuing sponsor of R/Finance 2018. You can a summary of lat year's conference here, and watch recordings of the talks on Channel 9. For more on the 2018 conference, follow the link below.

R/Finance 2018: Overview

Launching preview of Azure Migrate

At Microsoft Ignite 2017, we announced Azure Migrate – a new service that provides guidance, insights, and mechanisms to assist you in migrating to Azure. We made the service available in limited preview, so you could request access, try out, and provide feedback. We are humbled by the response received and thankful for the time you took to provide feedback.

Today, we are excited to launch the preview of Azure Migrate. The service is now broadly available and there is no need to request access.

Azure Migrate enables agentless discovery of VMware-virtualized Windows and Linux virtual machines (VMs). It also supports agent-based discovery. This enables dependency visualization, for a single VM or a group of VMs, to easily identify multi-tier applications.

Application-centric discovery is a good start but not enough to make an informed decision. So, Azure Migrate enables quick assessments that help answer three questions:

- Readiness: Is a VM suitable for running in Azure?

- Rightsizing: What is the right Azure VM size based on utilization history of CPU, memory, disk (throughput and IOPS), and network?

- Cost: How much is the recurring Azure cost considering discounts like Azure Hybrid Benefit?

The assessment doesn’t stop there. It also suggests workload-specific migration services. For example, Azure Site Recovery (ASR) for servers and Azure Database Migration Service (DMS) for databases. ASR enables application-aware server migration with minimal-downtime and no-impact migration testing. DMS provides a simple, self-guided solution for moving on-premises SQL databases to Azure.

Once migrated, you want to ensure that your VMs stay secure and well-managed. For this, you can use various other Azure offerings like Azure Security Center, Azure Cost Management, Azure Backup, etc.

Azure Migrate is offered at no additional charge, supported for production deployments, and available in West Central US region. It is worthwhile to note that availability of Azure Migrate in a particular region does not affect your ability to plan migrations for other target regions. For example, even if a migration project is created in West Central US, the discovered VMs can be assessed for West US 2 or UK West or Japan East.

You can get started by creating a migration project in Azure portal:

You can also:

- Get and stay informed by referring documentation.

- Seek help by posting a question on forum or contacting Microsoft Support.

- Provide feedback by posting (or voting for) an idea on user voice.

Hope you will find Azure Migrate useful in your journey to Azure!

Get notified when Azure service incidents impact your resources

When an Azure service incident affects you, we know that it is critical that you are equipped with all the information necessary to mitigate any potential impact. The goal for the Azure Service Health preview is to provide timely and personalized information when needed, but how can you be sure that you are made aware of these issues?

Today we are happy to announce a set of new features for creating and managing Service Health alerts. Starting today, you can:

- Easily create and manage alerts for service incidents, planned maintenance, and health advisories.

- Integrate your existing incident management system like ServiceNow®, PagerDuty, or OpsGenie with Service Health alerts via webhook.

So, let’s walk through these experiences and show you how it all works!

Creating alerts during a service incident

Let’s say you visit the Azure Portal, and you notice that your personalized Azure Service Health map is showing some issues with your services. You can gain access to the specific details of the event by clicking on the map, which takes you to your personalized health dashboard. Using this information, you are able to warn your engineering team and customers about the impact of the service incident.

If you have not pinned a map to your dashboard yet, check out these simple steps.

In this instance, you noticed the health status of your services passively. However, the question you really want answered is, “How can I get notified the next time an event like this occurs?” Using a single click, you can create a new alert based on your existing filters.

Click the “Create service health alert” button, and a new alert creation blade will appear, prepopulated with the filter settings you selected before. Name the alert, and quickly ensure that the other settings are as you expect. Finally, create a new action group to notify when this alert fires, or use an existing group set up in the past.

Once you click “OK”, you will be brought back to the health dashboard with a confirmation that your new alert was successfully created!

Create and manage existing Service Health alerts

In the Health Alerts section, you can find all your new and existing Service Health alerts. If you click on an alert, you will see that it contains details about the alert criteria, notification settings, and even a historical log of when this alert has fired in the past. If you want to make edits to your new or existing Service Health alert, you can select the more options button (“…”) and immediately get access to manage your alert.

During this process, you might think of other alerts you want to set up, so we make it easy for you to create new alerts by clicking the “Create New Alert” button, which gives you a blank canvas to set up your new notifications.

Configure health notifications for existing incident management systems via webhook

Some of you may already have an existing incident management system like ServiceNow, PagerDuty, or OpsGenie which contains all of your notification groups and incident management systems. We have worked with engineers from these companies to bring direct support for our Service Health webhook notifications making the end to end integration simple for you. Even if you use another incident management solution, we have written details about the Service Health webhook payload, and suggestions for how you might set up an integration on your own. For complete documentation on all of these options, you can review our instructions.

Each of the different incident management solutions will give you a unique webhook address that you can add to the action group for your Service Health alerts:

Once the alert fires, your respective incident management system will automatically ingest and parse the data to make it simple for you to understand!

Special thanks to the following people who helped us make this so simple for you all:

- David Shackelford and David Cooper from PagerDuty

- Çağla Arıkan and Berkay Mollamustafaoglu from OpsGenie

- Manisha Arora and Sheeraz Memon from ServiceNow

Closing

I hope you can see how our updates to the Azure Service Health preview bring you that much closer to the action when an Azure service incident affects you. We are excited to continually bring you better experiences and would love any and all feedback you can provide. Reach out to me or leave feedback right in the portal. We look forward to seeing what you all create!

- Shawn Tabrizi (@shawntabrizi)

Run your PySpark Interactive Query and batch job in Visual Studio Code

We are excited to introduce the integration of HDInsight PySpark into Visual Studio Code (VSCode), which allows developers to easily edit Python scripts and submit PySpark statements to HDInsight clusters. For PySpark developers who value productivity of Python language, VSCode HDInsight Tools offer you a quick Python editor with simple getting started experiences, and enable you to submit PySpark statements to HDInsight clusters with interactive responses. This interactivity brings the best properties of Python and Spark to developers and empowers you to gain faster insights.

Key customer benefits

- Interactive responses brings the best properties of Python and Spark with flexibility to execute one or multiple statements.

- Built-in Python language service, such as IntelliSense auto-suggest, autocomplete, and error marker, among others.

- Preview and export your PySpark interactive query results to CSV, JSON, and Excel formats.

- Integration with Azure for HDInsight cluster management and query submissions.

- Link with Spark UI and Yarn UI for further troubleshooting.

How to start HDInsight Tools for VSCode

Simply open your Python files in your HDInsight workspace and connect to Azure. You can then start to author Python script or Spark SQL to query your data.

- Run Spark Python interactive

- Run Spark SQL interactive

How to install or update

First, install Visual Studio Code and download Mono 4.2.x (for Linux and Mac). Then get the latest HDInsight Tools by going to the VSCode Extension repository or the VSCode Marketplace and searching “HDInsight Tools for VSCode”.

For more information about Azure Data Lake Tool for VSCode, please use the following resources:

- User Manual: HDInsight Tools for VSCode

- User Manual: Set Up PySpark Interactive Environment

- Demo Video: HDInsight for VSCode Video

- Hive LLAP: Use Interactive Query with HDInsight

Learn more about today’s announcements on the Azure blog and Big Data blog. Discover more on the Azure service updates page.

If you have questions, feedback, comments, or bug reports, please use the comments below or send a note to hdivstool@microsoft.com.

How to make Python easier for the R user: revoscalepy

by Siddarth Ramesh, Data Scientist, Microsoft

I’m an R programmer. To me, R has been great for data exploration, transformation, statistical modeling, and visualizations. However, there is a huge community of Data Scientists and Analysts who turn to Python for these tasks. Moreover, both R and Python experts exist in most analytics organizations, and it is important for both languages to coexist.

Many times, this means that R coders will develop a workflow in R but then must redesign and recode it in Python for their production systems. If the coder is lucky, this is easy, and the R model can be exported as a serialized object and read into Python. There are packages that do this, such as pmml. Unfortunately, many times, this is more challenging because the production system might demand that the entire end to end workflow is built exclusively in Python. That’s sometimes tough because there are aspects of statistical model building in R which are more intuitive than Python.

Python has many strengths, such as its robust data structures such as Dictionaries, compatibility with Deep Learning and Spark, and its ability to be a multipurpose language. However, many scenarios in enterprise analytics require people to go back to basic statistics and Machine Learning, which the classic Data Science packages in Python are not as intuitive as R for. The key difference is that many statistical methods are built into R natively. As a result, there is a gap for when R users must build workflows in Python. To try to bridge this gap, this post will discuss a relatively new package developed by Microsoft, revoscalepy.

Why revoscalepy?

Revoscalepy is the Python implementation of the R package RevoScaleR included with Microsoft Machine Learning Server.

The methods in ‘revoscalepy’ are the same, and more importantly, the way the R user can view data is the same. The reason this is so important is that for an R programmer, being able to understand the data shape and structure is one of the challenges with getting used to Python. In Python, data types are different, preprocessing the data is different, and the criteria to feed the processed dataset into a model is different.

To understand how revoscalepy eases the transition from R to Python, the following section will compare building a decision tree using revoscalepy with building a decision tree using sklearn. The Titanic dataset from Kaggle will be used for this example. To be clear, this post is written from an R user’s perspective, as many of the challenges this post will outline are standard practices for native Python users.

revoscalepy versus sklearn

revoscalepy works on Python 3.5, and can be downloaded as a part of Microsoft Machine Learning Server. Once downloaded, set the Python environment path to the python executable in the MML directory, and then import the packages.

The first chunk of code imports the revoscalepy, numpy, pandas, and sklearn packages, and imports the Titatic data. Pandas has some R roots in that it has its own implementation of DataFrames as well as methods that resemble R’s exploratory methods.

import revoscalepy as rp; import numpy as np; import pandas as pd; import sklearn as sk; titanic_data = pd.read_csv('titanic.csv') titanic_data.head()

Preprocessing with sklearn

One of the challenges as an R user with using sklearn is that the decision tree model for sklearn can only handle the numeric datatype. Pandas has a categorical type that looks like factors in R, but sklearn’s Decision Tree does not integrate with this. As a result, numerically encoding the categorical data becomes a mandatory step. This example will use a one-hot encoder to shape the categories in a way that sklearn’s decision tree understands.

The side effect of having to one-hot encode the variable is that if the dataset contains high cardinality features, it can be memory intensive and computationally expensive because each category becomes its own binary column. While implementing one-hot encoding itself is not a difficult transformation in Python and provides good results, it is still an extra step for an R programmer to have to manually implement. The following chunk of code detaches the categorical columns, label and one-hot encodes them, and then reattaches the encoded columns to the rest of the dataset.

from sklearn import tree le = sk.preprocessing.LabelEncoder() x = titanic_data.select_dtypes(include=[object]) x = x.drop(['Name', 'Ticket', 'Cabin'], 1) x = pd.concat([x, titanic_data['Pclass']], axis = 1) x['Pclass'] = x['Pclass'].astype('object') x = pd.DataFrame(x) x = x.fillna('Missing') x_cats = x.apply(le.fit_transform) enc = sk.preprocessing.OneHotEncoder() enc.fit(x_cats) onehotlabels = enc.transform(x_cats).toarray() encoded_titanic_data = pd.concat([pd.DataFrame(titanic_data.select_dtypes(include=[np.number])), pd.DataFrame(onehotlabels)], axis = 1)

At this point, there are more columns than before, and the columns no longer have semantic names (they have been enumerated). This means that if a decision tree is visualized, it will be difficult to understand without going through the extra step of renaming these columns. There are techniques in Python to help with this, but it is still an extra step that must be considered.

Preprocessing with revoscalepy

Unlike sklearn, revoscalepy reads pandas’ ‘category’ type like factors in R. This section of code iterates through the DataFrame, finds the string types, and converts those types to ‘category’. In pandas, there is an argument to set the order to False, to prevent ordered factors.

titanic_data_object_types = titanic_data.select_dtypes(include = ['object']) titanic_data_object_types_columns = np.array(titanic_data_object_types.columns) for column in titanic_data_object_types_columns: titanic_data[column] = titanic_data[column].astype('category', ordered = False) titanic_data['Pclass'] = titanic_data['Pclass'].astype('category', ordered = False)

This dataset is already ready to be fed into the revoscalepy model.

Training models with sklearn

One difference between implementing a model in R and in sklearn in Python is that sklearn does not use formulas.

Formulas are important and useful for modeling because they provide a consistent framework to develop models with varying degrees of complexity. With formulas, users can easily apply different types of variable cases, such as ‘+’ for separate independent variables, ‘:’ for interaction terms, and ‘*’ to include both the variable and its interaction terms, along with many other convenient calculations. Within a formula, users can do mathematical calculations, create factors, and include more complex entities third order interactions. Furthermore, formulas allow for building highly complex models such as mixed effect models, which are next to impossible build without them. In Python, there are packages such as ‘statsmodels’ which have more intuitive ways to build certain statistical models. However, statsmodels has a limited selection of models, and does not include tree based models.

With sklearn, model.fit expects the independent and dependent terms to be columns from the DataFrame. Interactions must be created manually as a preprocessing step for more complex examples. The code below trains the decision tree:

model = tree.DecisionTreeClassifier(max_depth = 50) x = encoded_titanic_data.drop(['Survived'], 1) x = x.fillna(-1) y = encoded_titanic_data['Survived'] model = model.fit(x,y)

Training models with revoscalepy

revoscalepy brings back formulas. Granted, users cannot view the formula the same way as they can in R, because formulas are strings in Python. However, importing code from R to Python is an easy transition because formulas are read the same way in the revoscalepy functions as the model fit functions in R. The below code fits the Decision Tree in revoscalepy.

#rx_dtree works with formulas, just like models in R form = 'Survived ~ Pclass + Sex + Age + Parch + Fare + Embarked' titanic_data_tree = rp.rx_dtree(form, titanic_data, max_depth = 50)

The resulting object, titanic_data_tree, is the same structural object that RxDTree() would create in R. Because the individual elements that make up the rx_dtree() object are the same as RxDTree(), it allows R users to easily understand the decision tree without having to translate between two object structures.

Conclusion

From the workflow, it should be clear how revoscalepy can help with transliteration between R and Python. Sklearn has different preprocessing considerations because the data must be fed into the model differently. The advantage to revoscalepy is that R programmers can easily convert their R code to Python without thinking too much about the ‘Pythonic way’ of implementing their R code. Categories replace factors, rx_dtree() reads the R-like formula, and the arguments are similar to the R equivalent. Looking at the big picture, revoscalepy is one way to ease Python for the R user and future posts will cover other ways to transition between R and Python.

Microsoft Docs: Introducing revoscalepy

Join us for an AMA on Azure Stack

This post is authored by Natalia Mackevicius Partner Director for Azure Infrastructure & Management, Azure Stack, Vijay Tewari Principle Group Program Manager, Microsoft Azure Stack, and Bradley Bartz Group Program Manager at Microsoft.

Azure Stack engineering team here, announcing that we’ll host a Reddit AMA on Microsoft Azure Stack, an extension of Azure. The AMA will take place Thursday, December 7 at 8 am PT on /r/Azure. We’d like to invite you to join us and give you some details about the upcoming AMA.

Why are we hosting an AMA about Azure Stack?

Azure Stack made its official debut at Microsoft Ignite 2017, and is already shipping to customers from our hardware partners. Now that Azure Stack is available, we want to give you a chance to ask all of your questions – what it is, what it isn’t, why it’s such a game-changer for hybrid cloud, or even Microsoft’s vision for hybrid cloud. For all of the excitement around Azure Stack, it’s still a new product and we want you to get the most out of it. It’s also important for us to hear your feedback as we continue to make Azure Stack event better.

What can you ask us?

Anything! Specifically, we’d love to take your questions about Azure Stack and how it fits into our vision of a consistent hybrid cloud. We’ll do our best to answer all your questions openly about the product and hybrid cloud. Keep in mind, we cannot comment on yet unreleased features and future plans though.

How can I join the conversation?

We’re glad you asked. As mentioned, we’ll be taking your questions starting Thursday, December 7 at 8am PT in the Azure subreddit. We'll activate the AMA a few days in advance so you can get a head start if you already have questions. Feel free to leave your questions early – we’ll be checking in.

We look forward to answering your questions, and talk to you soon.

Automatic tuning introduces Automatic plan correction and T-SQL management

Azure SQL Database automatic tuning, industry’s first truly auto-tuning database based on Artificial Intelligence, is now providing more flexibility and power with the global introduction of the following new features:

- Automatic plan correction

- Management via T-SQL

- Index created by automatic tuning flag

Automatic tuning is capable of seamlessly tuning hundreds of thousands of databases without affecting performance of the existing workloads. The solution has been globally available since 2016 and proven to enable performant and stable workloads while reducing resource consumption on Azure.

Automatic plan correction

Automatic plan correction, feature introduced in SQL Server 2017, is now making its way to Azure SQL Database as a tuning option Force Last Good Plan. This decision was made after a rigorous testing on hundreds of thousands of SQL Databases ensuring there is an overall positive performance gain for workloads running on Azure. This feature shines in cases of managing hundreds and thousands of databases and heavy workloads.

Automatic tuning feature continuously monitors SQL Database workloads and with the Automatic plan correction option Force Last Good Plan, it automatically tunes regressed query execution plans through enforcing the last plan with a better performance. Since the system automatically monitors the workload performance, in case of changing workloads, the system dynamically adjusts to force the best performing query execution plan.

The system automatically validates all tuning actions performed in order to ensure that each tuning action is resulting in a positive performance gain. In case of a performance degradation due to a tuning action, the system automatically learns and it promptly reverts such tuning recommendation. Tuning actions performed by automatic tuning can be viewed by users in the list of recent tuning recommendations through Azure Portal and T-SQL queries.

Click here to read more about how to configure automatic tuning.

Manage automatic tuning via T-SQL

Recognizing the needs of a large community of professionals using T-SQL and scripting procedures to manage their databases, Auto tuning team has developed a new feature making it possible to enable, disable, configure, and view the current and historical tuning recommendations using T-SQL. This makes it possible to develop custom solutions managing auto tuning, including custom monitoring, alerting and reporting capabilities.

In the upcoming sections, this blog post outlines a few examples on how to use some of the T-SQL capabilities we have made available to you.

Viewing the current automatic tuning configuration via T-SQL

In order to view the current state of the automatic tuning options configured on an individual server, connect to an SQL Database using a tool such as SSMS and execute the following query to read the system view sys.database_automatic_tuning_options:

SELECT * FROM sys.database_automatic_tuning_options

Resulting output are values from the automatic tuning options system view, as shown in the following example:

In the column name it can clearly be seen that there are three Auto tuning options available:

- FORCE_LAST_GOOD_PLAN

- CREATE_INDEX, and

- DROP_INDEX.

Column desired_state indicates settings for an individual tuning option, with its description available in the column desired_state_desc. Possible values for desired_state are 0 = OFF and 1 = ON for custom settings, and 2 = DEFAULT for inheriting settings from the parent server or Azure platform defaults.

Values of the column desired_state_desc indicate if an individual automatic tuning option is set to ON, OFF, or inherited by DEFAULT (corresponding to their numerical values in the column desired_state). The column actual_state indicates if the automatic tuning option is actually working on a database with value 1 indicating it is, and with value 0 indicating it is not working.

Please note that although you might have one of the auto tuning options set to ON, the system might decide to temporarily disable automatic tuning if it deems necessary to protect the workload performance. It also could be that if the Query Store is not enabled on a database, or if it is in a read-only state, this will also render automatic tuning as temporarily disabled. In this case, view of the current state will indicate “Disabled by the system” and the value of the column actual_state will be 0.

The last part of the sys.database_automatic_tuning_options system view indicates in the columns reason and reason_desc if each of the individual automatic tuning options is configured through defaults from Azure, defaults from the parent server, or if it is custom configured. In case of inheriting Azure platform defaults, column reason will have value 2 and column reason_desc value AUTO_CONFIGURED. In case of inheriting parent server defaults, column reason will have value 1 and column reason_desc value INHERITED_FROM_SERVER. In case of a custom setting for an individual automatic tuning option, both columns reason and reason_desc will have the value NULL.

Click here to view detailed structure of system view sys.database_automatic_tuning_options.

Enable automatic tuning via T-SQL

In order to enable automatic tuning on a single database with inheriting Azure configuration defaults, execute a query such as this one:

ALTER DATABASE current SET AUTOMATIC_TUNING = AUTO /* possible values AUTO, INHERIT and CUSTOM */

Possible values to enable automatic tuning are AUTO, INHERIT and CUSTOM. Setting the automatic tuning value to AUTO will apply Azure configuration defaults for automatic tuning. Using the value INHERIT will inherit the default configuration from the parent server. This is especially useful if you would like to customize automatic tuning configuration on a parent server, and have all the databases on such server INHERIT these custom settings.

Please note that in order for the inheritance to work, the three individual tuning options FORCE_LAST_GOOD_PLAN, CREATE_INDEX and DROP_INDEX need to be set to DEFAULT. This is because one, or several of these individual tuning options could be custom configured and there could be a combination between DEFAULT, and custom forced ON or OFF settings in place.

Using the value CUSTOM, you will need to manually custom configure each of the automatic tuning options available.

Custom configuring automatic tuning options via T-SQL

Available options in Auto tuning that could be custom configured, independent of Azure platform and parent server defaults, are FORCE_LAST_GOOD_PLAN, CREATE_INDEX and DROP_INDEXES. These options can be custom configured through executing a query such as this one:

ALTER DATABASE current SET AUTOMATIC_TUNING ( FORCE_LAST_GOOD_PLAN = [ON | OFF | DEFAULT], CREATE_INDEX = [ON | OFF | DEFAULT], DROP_INDEX = [ON | OFF | DEFAULT]) )

Possible values to set one of the three available options are ON, OFF and DEFAULT. Setting an individual tuning option to ON will custom configure it to be explicitly turned on, while setting it to OFF will custom configure it to be explicitly turned off. Setting an individual tuning option to DEFAULT will make such option inherit default value from the Azure platform or the parent server, depending on the setting of the AUTOMATIC_TUNING being set to AUTO or INHERIT, as described above.

If you are using Azure defaults, please note that current Azure defaults for Auto tuning options are to have the FORCE_LAST_GOOD_PLAN and CREATE_INDEX turned ON, while we are having DROP_INDEX option turned OFF by default. We have made this decision as DROP_INDEX option when turned ON drops unused or duplicated user created indexes. With this our aim was to protect user defined indexes and let users explicitly choose if they would like automatic tuning to manage dropping indexes as well.

If you would like to use the DROP_INDEX tuning option, please set the DROP_INDEX option to ON through executing the following query:

ALTER DATABASE current SET AUTOMATIC_TUNING (DROP_INDEX = ON) /* Possible values DEFAULT, ON, OFF */

The resulting output will denote in the columns desired_state and desired_state_desc that the DROP_INDEX option has been set to ON:

Please note that automatic tuning configuration settings we have made available through T-SQL are identical with the configuration option available through the Azure Portal, see Enable automatic tuning.

Reverting back automatic tuning from custom to inheriting defaults via T-SQL

Please note that once you set the AUTOMATIC_TUNING to the CUSTOM setting in order to custom configure each of the three automatic tuning options (FORCE_LAST_GOOD_PLAN, CREATE_INDEX and DROP_INDEX) manually (ON or OFF), in order to revert back to the default inheritance, you will need to:

- set AUTOMATIC_TUNING back to AUTO or INHERIT, and also

- for each tuning option FORCE_LAST_GOOD_PLAN, CREATE_INDEX and DROP_INDEX for which you need to have inheritance set back to DEFAULT.

This is because automatic tuning always respects decisions users explicitly took while customizing options and it never overrides them. In such case, you will first need to set the preference to inherit the values from Azure or the parent server, followed by setting each of the three available tuning options to DEFAULT through executing the following query:

ALTER DATABASE current SET AUTOMATIC_TUNING = AUTO /* Possible values AUTO for Azure defaults and INHERIT for server defaults */ ALTER DATABASE current SET AUTOMATIC_TUNING ( FORCE_LAST_GOOD_PLAN = DEFAULT, CREATE_INDEX = DEFAULT, DROP_INDEX = DEFAULT )

The result of executing the above query will be set all three automatic tuning options to inherit defaults from Azure, with the following output:

View tuning recommendations and history via T-SQL

In order to view the history of recent automatic tuning recommendations, you can retrieve this information from the system view sys.dm_db_tuning_recommendations through executing the following query:

SELECT * FROM sys.dm_db_tuning_recommendations

Output from this view provides detailed information on the current state of automatic tuning:

To highlight some of the values available in this view, we will start with the type and the reason as why a tuning recommendation was made. The column type indicates a type of the tuning recommendation made. The column reason indicates identified reason as why a particular tuning recommendation was made.

Columns valid_since and last_refresh indicate a timespan when a tuning recommendation was made and the time until the system has considered such recommendation as beneficial to the workload performance.

The column state provides a JSON document with details of automatically applied recommendation with a wealth of information related to index management and query execution plans.

If a tuning recommendation can be executed automatically, the column is_executable_action will be populate with a bit value 1. Auto tuning recommendations flagged with a bit 1 in the column is_revertable_action denote tuning recommendations that can be automatically reverted by the system if required. The column execute_action_start_time provides a timestamp when a tuning recommendation was applied.

Click here to view detailed structure of system view sys.dm_db_tuning_recommendations.

System created indexes column

Automatic tuning team has also added a new column auto_created in the system view (sys.indexes) that contains a bit indicating if an index was created by the automatic tuning. This is now making it possible to clearly distinct between the system and user created indexes on a database.

The column auto_created accepts a bit value of 0 and 1, whereas 0 indicates that an index was created by user, and 1 indicates that the index was created by automatic tuning.

With this flag, customers can differentiate between user and automatic tuning created indexes. This is because automatic tuning created indexes behave differently than user created indexes. When automatic tuning index is created over a column that user wants to drop, automatic tuning index will move out of the way. If this was not the case, user index would prevent this operation.

Click here to view detailed structure of system view sys.indexes.

Summary

With introduction of new features Automatic plan correction with Force Last Good Plan option, management of automatic tuning options via T-SQL and providing a flag if an index was automatically created, we have provided further flexibility to customize automatic tuning solution for the needs of the most demanding users.

Please let us know how are you using the solution and perhaps examples of how have you customized it for your needs? Please leave your feedback and questions in the comments.