Microsoft’s Performance Contributions to Git in 2017

New Visual Studio Code Extensions for Java Developers: Maven, Tomcat, and Checkstyle

Alongside the release of Debugger for Java and Java Test Runner this week, we’re welcoming a few new members to our Visual Studio Code Java Extension family. We think you’ll find them helpful for your Java development when you’re dealing with maven, Tomcat or making sure your Java code to follow the standard styles.

Maven Project Explorer

Maven is extremely popular in the Java community and we’d like to make it even easier to use with Visual Studio Code. The new Maven Project Explorer extension:

- Scans your pom.xml in your workspace and displays all maven projects and their modules in the sidebar to make them easy to access.

- Provides shortcuts to common maven goals, namely clean, validate, compile, test, package, verify, install site and deploy. So you won’t need to type any of those in your command line window anymore.

- Preserves history of custom goals for fast re-run long commands (e.g. mvn clean package -DskipTests -Dcheckstyle.skip), our data shows custom goals are very popular for maven users, so we believe this will be a useful feature for your repeating tasks.

- Generates projects from Maven Archetype

- And much more…

You can find more information on the extension home page.

Tomcat

For developers working with Tomcat, now there’s a handy tool with Visual Studio Code. With the Tomcat extension, you can manage all your local Tomcat servers within the editor and easily debug and run your war package on Tomcat and link Tomcat into workspace.

Checkstyle

Checkstyle is a convenient tool to apply Checkstyle rules to your Java source code so you can see the style issues and fix them on the fly. It automates the process of checking your Java code so you would be freed from this boring task while keeping your format correct.

Try it out

If you’re trying to find a performant editor for your Java project, please try out those new extensions and let us know what you think! We plan to keep updating and releasing new extensions to make VS Code a better editor for Java.

Following the steps below to get started

- Install the Java Extension Pack which includes Language Support for Java(TM) by Red Hat, Debugger for Java

- Install the extensions mentioned in this blog as well as the Test Runner/Debugger for Java.

- Learn more about Java on Visual Studio Code.

- Explore our step by step Java Tutorials on VS Code.

|

Xiaokai He, Program Manager @XiaokaiHe Xiaokai is a program manager working on Java tools and services. He’s currently focusing on making Visual Studio Code great for Java developers, as well as supporting Java in various of Azure services. |

#ifdef WINDOWS – Getting Started with Mixed Reality

I sat down with Vlad Kolesnikov from the PAX Mixed Reality team in Windows to talk about what mixed reality is and how developers can get started building immersive experiences. Vlad covered what hardware and tools are needed, building world-scale experiences, and using the Mixed Reality Toolkit to accelerate development for Microsoft HoloLens and Windows Mixed Reality Headsets.

Check out the video above for the full overview and feel free to reach out on Twitter or in the comments below.

Happy coding!

The post #ifdef WINDOWS – Getting Started with Mixed Reality appeared first on Building Apps for Windows.

Power BI and VSTS Analytics

Windows Community Standup on January 18th, 2018

Kevin Gallo, VP of Windows Developer Platform, is hosting the next Windows Community Standup on January 18th, 2018 at 10:00am PST on Channel 9!

Kevin will be discussing the Always Connected PC, with guest speakers Erin Chapple (GM) and Hari Pulapaka (Principal Group Program Manager – Windows Base Kernel). The Always Connected PC is a new category of devices that blends the simplicity and connectivity of the mobile phone with the power and creativity of the PC. The work to enable Windows 10 on ARM is a core investment in bringing this category to life. The group will give an overview of the Always Connected PC, key things a developer should know when developing for Windows 10 on ARM, and answer live questions.

Windows community standup is a monthly online broadcast where we provide additional transparency on what we are building out, and why we are excited. As always, we welcome your feedback.

Once again, we can’t wait to see you at 10:00am PST on January 18th, 2018 on https://channel9.msdn.com.

The post Windows Community Standup on January 18th, 2018 appeared first on Building Apps for Windows.

Building 0verkill on Windows 10 Subsystem for Linux – 2D ASCII art deathmatch game

I'm a big fan of the Windows Subsystem for Linux. It's real Linux that runs real user-mode ELF binaries but it's all on Windows 10. It's not running in a Virtual Machine. I talk about it and some of the things you should be aware of when sharing files between files systems in this YouTube video.

WHAT IS ALL THIS LINUX ON WINDOWS STUFF? Here's a FAQ on the Bash/Windows Subsystem for Linux/Ubuntu on Windows/Snowball in Hell and some detailed Release Notes. Yes, it's real, and it's spectacular. Can't read that much text? Here's a video I did on Ubuntu on Windows 10.

You can now install not only Ubuntu from the Windows Store (make sure you run this first from a Windows PowerShell admin prompt) - "Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Windows-Subsystem-Linux"

I have set up a very shiny Linux environment on Windows 10 with lovely things like tmux and Midnight Commander. The bash/Ubuntu/WSL shell shares the same "console host" (conhost) as PowerShell and CMD.exe, so as the type adds new support for fonts, colors, ANSI, etc, every terminal gets that new feature.

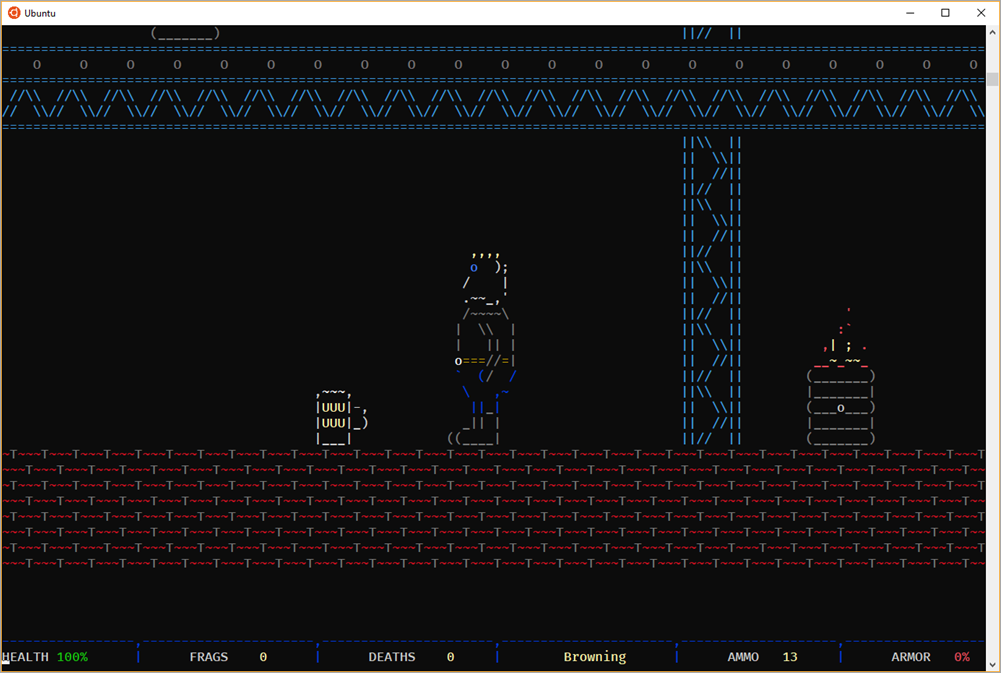

I wanted to see how far this went. How Linuxy is Linux on Windows? How good is the ANSI/ASCII support in the console on Windows 10? Clearly the only real way to check this out would be to try to build 0verkill. 0verkill is a client-server 2D deathmatch-like game in ASCII art. It has both client and server and lots of cool features. Plus building it would exercise the system pretty well. It's also nearly 20 years old which is fun.

PRO TIP: Did you know that you can easily change your command prompt colors globally with the new free open source ColorTool? You can easily switch to solarized or even color-blind schemes for deuteranopia.

There's a fork of the 0verkill code at https://github.com/hackndev/0verkill so I started there. I saw that there was a ./rebuild script that uses aclocal, autoconf, configure, and make, so I needed to apt in some stuff.

sudo apt-get install build-essential autotools-dev automake sudo apt-get install libx11-dev sudo apt-get install libxpm-dev

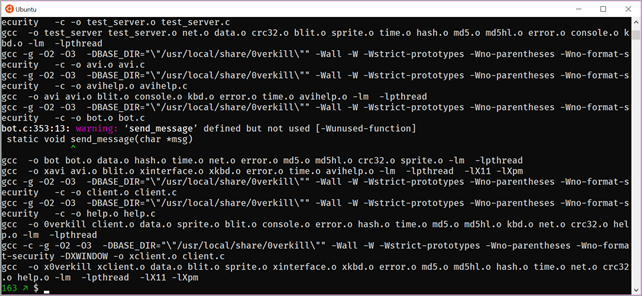

Then I built it with ./rebuild and got a TON of warnings. Looks like this rather old code does some (now, in the modern world) questionable things with fprintf. While I can ignore the warnings, I decided to add -Wno-format-security to the CFLAGS in Makefile.in in order to focus on any larger errors I might run into.

I then rebuild again, and get a few warnings, but nothing major. Nice.

I run the server locally with ./server. This allows you to connect multiple clients, although I'll just be connecting locally, it's nice that the networking works.

$ ./server 11. 1.2018 14:01:42 Running 0verkill server version 0.16 11. 1.2018 14:01:42 Initialization. 11. 1.2018 14:01:42 Loading sprites. 11. 1.2018 14:01:42 Loading level "level1".... 11. 1.2018 14:01:42 Loading level graphics. 11. 1.2018 14:01:42 Loading level map. 11. 1.2018 14:01:42 Loading level objects. 11. 1.2018 14:01:42 Initializing socket. 11. 1.2018 14:01:42 Installing signal handlers. 11. 1.2018 14:01:42 Game started. 11. 1.2018 14:01:42 Sleep

Next, run the client in another bash/Ubuntu console window (or a tmux pane) with ./0verkill.

Awesome. Works great, scales with the window size, ASCII and color looks great.

Now I just need to find someone to play with me...

Sponsor: Get the latest JetBrains Rider for debugging third-party .NET code, Smart Step Into, more debugger improvements, C# Interactive, new project wizard, and formatting code in columns.

© 2017 Scott Hanselman. All rights reserved.

How to implement neural networks in R

If you've ever wondered how neural networks work behind the scenes, check out this guide to implementing neural networks in scratch with R, by David Selby. You may be surprised how with just a little linear algebra and a few R functions, you can train a function that classifies the red dots from the blue dots in a complex pattern like this:

David also includes some elegant R code that implements neural networks using R6 classes. For a similar implementation using base R function, you may also want to check out this guide to implementing neural networks in R by Ilia Karmanov.

Tea & Stats: Building a neural network from scratch in R

Top stories from the VSTS community – 2017.01.12

Services and tools for building intelligent R applications in the cloud

by Le Zhang (Data Scientist, Microsoft) and Graham Williams (Director of Data Science, Microsoft)

As an in-memory application, R is sometimes thought to be constrained in performance or scalability for enterprise-grade applications. But by deploying R in a high-performance cloud environment, and by leveraging the scale of parallel architectures and dedicated big-data technologies, you can build applications using R that provide the necessary computational efficiency, scale, and cost-effectiveness.

We identify four application areas and associated applications and Azure services that you can use to deploy R in enterprise applications. They cover the tasks required to prototype, build, and operationalize an enterprise-level data science and AI solution. In each of the four, there are R packages and tools specifically for accelerating the development of desirable analytics.

Below is a brief introduction of each.

Cloud resource management and operation

Cloud computing instances or services can be harnessed within an R session, and this favors programmatic control and operationalization of R based analytical pipelines. R packages and tools in this category are featured by offering a simplified way to interact with the Azure cloud platform and operate resources (e.g., blob storage, Data Science Virtual Machine, Azure Batch Service, etc.) on Azure for various tasks.

- AzureSMR - R package for managing a selection of Azure resources. Targeted at data scientists who need to control Azure resources directly from R functions. APIs include Storage Blobs, HDInsight (Nodes, Hive, Spark), Resource Manager, and Virtual Machines.

- AzureDSVM - R package that offers a convenient harness for the Azure Data Science Virtual Machine (DSVM), remote execution of scalable and elastic data science work, and monitoring of on-demand resource consumption.

- doAzureParallel - R package that allows users to submit parallel workloads in Azure.

- rAzureBatch - run R code in parallel across a cluster in Azure Batch.

- AzureML- an R interface to AzureML experiments, datasets, and web services.

Remote interaction and access to cloud resources

Data scientists can seamlessly log in and out of R session on cloud for experimentation and explorative study. The R packages and tools in this category help data scientists or developers to remotely access or interact with Azure cloud instances or services for convenient development.

- mrsdeploy - an R package that provides functions for establishing a remote session in a console application and for publishing and managing a web service that is backed by the R code block or script you provided.

- R Tools for Visual Studio - IDE with R support.

- RStudio Server - IDE for remote R session with access via Internet browser.

- JupterHub - Jupyter notebook with multi-user access.

- IRKernel - R kernel for Jupyter notebook.

Scalable and advanced analytics.

Scalable analytics and advanced machine (deep) learning model creation can be performed in R on cloud services, with acceleration of application-specific hardware like GPUs. R packages and tools in this category allow one to perform large-scale R-based analytics on Azure with modern frameworks such as Spark, Hadoop, Microsoft Cognitive Toolkit, Tensorflow, and Keras. It is worth mentioning that many of the tools are pre-installed and configured for direct use on the Azure Data Science Virtual Machine.

Scalable analytics

- dplyrXdf - a dplyr backend for the XDF data format used in Microsoft ML Server.

- sparklyr - R interface for Apache Spark.

- SparkR - an R package that provides a light-weight frontend to use Apache Spark from R.

Deep learning

- CNTK-R - R bindings to the Cognitive Toolkit (CNTK) deep learning library.

- tensorflow - R interface to Tensorflow.

- mxnet - R interface to MXNET, bringing flexible and efficient GPU computing and state-of-art deep learning to R.

- keras - R interface to Keras.

- darch - Create deep architectures in R.

- deepnet - Implement some deep learning architectures and neural network algorithms, including BP, RBM, DBN, Deep autoencoder and so on.

- gpuR - R interface to use GPUs.

Integrations

- RevoScaleR - a collection of portable, scalable, and distributable R functions for importing, transforming, and analyzing data at scale, included with Microsoft ML Server.

- MicrosoftML - a package that provides state-of-the-art fast, scalable machine learning algorithms and transforms for R.

- h2o - R interface to H2O.

Application and service deployment

R based applications can be easily deployed as service for end-users or developers. The R packages and tools in this category are used for deploying an R-based analytics or applicaiton as services or interfaces that can be conveniently consumed by end-users or developers.

- mrsdeploy - an R package included with Microsoft ML Server that provides functions for deploying easily-consumable service within R session.

- AzureML- an R package to allow one to interact with Azure Machine Learning Studio for publishing R functions as API services.

- Azure Container Instances - service to allow running containerized R analytics in Azure.

- Azure Container Service - service that simplifies deployment, management, and operation of orchestrated containers of R analytics in Azure.

- Shiny server - Develop and publish Shiny based web applications online.

For more information

Companies around the world are using R to build enterprise-grade applications on Azure. For in-depth examples (with code and architecture), you can also find a selection of R based solutions for real-world use cases. A more detailed list of packages and tools for deploying R in Azure is provided at the link below, and will be updated as new tools become available.

Github (yueguoguo): R in Azure

Link wiki pages and work items, write math formulas in Wiki, Keyboard shortcuts and more…

Because it’s Friday: Kite Ballet

With a tip 'o the hat to Buck, enjoy the acrobatics of these kites from a performance in Oregon in 2012, set to Bohemian Rhapsody. Even after watching it a few times I still don't get how the lines don't get tangled up.

That's all from the blog for this week (which has been an awesome one for me, hanging out with my new Cloud Developer Advocate colleagues). We'll be back with more on Tuesday, after taking a brief break for the Martin Luther King Day holiday here in the US. Have a great (long, if you have it too) weekend!

Announcing the extension of Azure IP Advantage to Azure Stack

Azure IP Advantage now covers workloads deployed to Azure Stack. As customers rely on Azure Stack to enable hybrid cloud scenarios and extend the reach of Azure to their own data centers or in hosted environments, they increasingly need to navigate unfamiliar IP risks inherent in the digital world. The Azure IP Advantage benefits, such as the uncapped IP indemnification of Azure services, including the open source software powering these services, or the defensive portfolio of 10,000 patents, are available to customers innovating in the hybrid cloud with Azure Stack.

Customers use Azure Stack to access cloud services on-premises or in disconnected environments. For example, oil and gas giant Schlumberger use Azure Stack to enhance its drilling operations. Customers such as Saxo Bank also use Azure Stack in sovereign or regulated context where there is not an Azure region, while reusing the same application code globally. With Azure Stack, customers can rely on a consistent set of services and APIs to run their applications in a hybrid cloud environment. Azure IP Advantage IP protection benefits now cover customers consistently in the hybrid cloud.

With Azure IP Advantage, Azure Stack services receive uncapped indemnification from Microsoft, including for the open source software powering these services. Eligible customers can also access a defensive portfolio of 10,000 Microsoft patents to defend their SaaS application in Azure Stack. This portfolio has been ranked among the top 3 cloud patent portfolios worldwide. They can also rely on a royalty free springing license to protect them in the unlikely event Microsoft transfers a patent to a non-practicing entity.

As the cloud is often used for mission critical applications, considerations for choosing a cloud vendor are becoming wide-ranging and complex. When they select Azure and Azure Stack, customers are automatically covered by Azure IP Advantage, the best-in-industry IP protection program, for their hybrid cloud workloads.

Azure Analysis Services now available in East US, West US 2, and more

Since being generally available in April 2017, Azure Analysis Services has quickly become the clear choice for enterprise organizations delivering corporate business intelligence (BI) in the cloud. The success of any modern data driven organization requires that information be available at the fingertips of every business user, not just IT professionals and data scientists, to guide their day-to-day decisions.

Self-service BI tools have made huge strides in making data accessible to business users. However, most business users don’t have the expertise or desire to do the heavy lifting that is typically required including finding the right sources of data, importing the raw data, transforming it into the right shape, and adding business logic and metrics before they can explore the data to derive insights. With Azure Analysis Services a BI professional can create a semantic model over the raw data and share it with business users so that all they need to do is connect to the model from any BI tool and immediately explore the data to gain insights. Azure Analysis Services use a highly optimized in memory engine to provide responses to user queries at the speed of thought.

We are excited to share that Azure Analysis Services is now available in 4 additional regions including East US, West US 2, USGov-Arizona and USGov-Texas. This means that Azure Analysis Services is available in the following regions: Australia Southeast, Brazil South, Canada Central, East US, East US 2, Japan East, North Central US, North Europe, South Central US, Southeast Asia, UK South, West Central US, West Europe, West India, West US and West US 2.

New to Azure Analysis Services? Find out how you can try Azure Analysis Services or learn how to create your first data model.

Xamarin University Presents: Ship better apps with Visual Studio App Center

At Microsoft Connect(); last November, we announced the general availability of Visual Studio App Center to help (Obj-C, Swift, Java, React Native, and Xamarin) quickly build, test, deploy, monitor, and improve their phone, tablet, desktop, and connected device apps with powerful, automated lifecycle services. As a .NET developer, you may already use the power of Visual Studio Tools for Xamarin to develop amazing apps in C#.

Now, with Visual Studio App Center, you can easily tap into automated cloud services for every stage of your development process, so you get higher quality apps into your users’ hands even faster.

To learn how to simplify and automate your app development pipeline, join Mark Smith for “Xamarin University Presents: Ship Better Apps with Visual Studio App Center” on Thursday, January 25th at 9 am PT / 12 pm ET / 5 pm UTC. Mark will demo the services available in App Center – from setting up continuous cloud builds to automated testing and deployment to post-release crash reporting and user and app analytics. You’ll leave ready to connect your first app and start improving your development process and your apps immediately.

Jam-packed with step-by-step demos, this session has something for everyone, from app development beginners to seasoned pros who’ve built dozens of apps.

[REGISTER]

In this webinar, you’ll:

- Connect your apps and add the Visual Studio App Center SDK in minutes

- Kick off continuous cloud builds, straight from your source control repo.

- Run automated UI tests on hundreds of real devices and hundreds of configurations

- Distribute to beta testers or app stores with every successful build, or on-demand

- Use real-time crash reports and analytics to monitor app health, fix problems fast, identify trends and understand what your users need and want

- Engage your users with push notifications and target specific audiences, geographies, or languages.

- Get technical guidance and advice from our app experts

We understand every development process is different, so we’ll show you how to use all of Visual Studio App Center services together and how to integrate specific services to work with your established processes and tools.

See you soon! Register now and get ready to start shipping amazing (five-star) apps with confidence.

|

Mark Smith, Principal Program Manager

Mark leads Xamarin University, where he helps developers learn how to utilize their .NET skills to build amazing mobile apps for Android, iOS, Windows and beyond. Prior to his career at Microsoft and Xamarin (acquired by Microsoft), Mark ran a consulting business, specializing in custom development |

Spectre mitigations in MSVC

Microsoft is aware of a new publicly disclosed class of vulnerabilities, called “speculative execution side-channel attacks,” that affect many operating systems and modern processors, including processors from Intel, AMD, and ARM. On the MSVC team, we’ve reviewed information in detail and conducted extensive tests, which showed the performance impact of the new /Qspectre switch to be negligible. This post is intended as a follow-up to Terry Myerson’s recent Windows System post with a focus on the assessment for MSVC. If you haven’t had a chance to read Terry’s post you should take a moment to read it before reading this one.

The Spectre and Meltdown vulnerabilities

The security researchers that discovered these vulnerabilities identified three variants that could enable speculative execution side-channel attacks. The following table from Terry’s blog provides the decoder ring for each of these variants:

| Exploited Vulnerability | CVE | Exploit Name | Public Vulnerability Name |

|---|---|---|---|

| Spectre | 2017-5753 | Variant 1 | Bounds Check Bypass |

| Spectre | 2017-5715 | Variant 2 | Branch Target Injection |

| Meltdown | 2017-5754 | Variant 3 | Rogue Data Cache Load |

The mitigations for variant 2 and variant 3 are outside the scope of this post but are explained in Terry’s post. In this post, we’ll provide an overview of variant 1 and describe the steps that we’ve taken with the MSVC compiler to provide mitigation assistance.

What actions do developers need to take?

If you are a developer whose code operates on data that crosses a trust boundary then you should consider downloading an updated version of the MSVC compiler, recompiling your code with the /Qspectre switch enabled, and redeploying your code to your customers as soon as possible. Examples of code that operates on data that crosses a trust boundary include code that loads untrusted input that can affect execution such as remote procedure calls, parsing untrusted input for files, and other local inter-process communication (IPC) interfaces. Standard sandboxing techniques may not be sufficient: you should investigate your sandboxing carefully before deciding that your code does not cross a trust boundary.

In current versions of the MSVC compiler, the /Qspectre switch only works on optimized code. You should make sure to compile your code with any of the optimization switches (e.g., /O2 or /O1 but NOT /Od) to have the mitigation applied. Similarly, inspect any code that uses #pragma optimize([stg], off). Work is ongoing now to make the /Qspectre mitigation work on unoptimized code.

The MSVC team is evaluating the Microsoft Visual C++ Redistributables to make certain that any necessary mitigations are applied.

What versions of MSVC support the /Qspectre switch?

All versions of Visual Studio 2017 version 15.5 and all Previews of Visual Studio version 15.6 already include an undocumented switch, /d2guardspecload, that is currently equivalent to /Qspectre. You can use /d2guardspecload to apply the same mitigations to your code. Please update to using /Qspectre as soon as you get a compiler that supports the switch as the /Qspectre switch will be maintained with new mitigations going forward.

The /Qspectre switch will be available in MSVC toolsets included in all future releases of Visual Studio (including Previews). We will also release updates to some existing versions of Visual Studio to include support for /Qspectre. Releases of Visual Studio and Previews are announced on the Visual Studio Blog; update notifications are included in the Notification Hub. Visual Studio updates that include support for /Qspectre will be announced on the Visual C++ Team Blog and the @visualc Twitter feed.

We initially plan to include support for /Qspectre in the following:

- Visual Studio 2017 version 15.6 Preview 4

- An upcoming servicing update to Visual Studio 2017 version 15.5

- A servicing update to Visual Studio 2017 “RTW”

- A servicing update to Visual Studio 2015 Update 3

If you’re using an older version of MSVC we strongly encourage you to upgrade to a more recent compiler for this and other security improvements that have been developed in the last few years. Additionally, you’ll benefit from increased conformance, code quality, and faster compile times as well as many productivity improvements in Visual Studio.

What’s the performance impact?

Our tests show the performance impact of /Qspectre to be negligible. We have built all of Windows with /Qspectre enabled and did not notice any performance regressions of concern. Performance gains from speculative execution are lost where the mitigation is applied but the mitigation was needed in a relatively small number of instances across the large codebases that we recompiled. We advise all developers to evaluate the impact in the context of their applications and workloads as codebases vary greatly.

If you know that a particular block of your code is performance-critical (say, in a tight loop) and does not need the mitigation applied, you can selectively disable the mitigation with __declspec(spectre(nomitigation)). Note that the __declspec is not available in compilers that only support the /d2guardspecload switch.

Understanding variant 1

Variant 1 represents a new vulnerability class that software developers did not previously realize they needed to defend against. To better understand the issue, it’s helpful to consider the following example code:

if (untrusted_index < array1_length) {

unsigned char value = array1[untrusted_index];

unsigned char value2 = array2[value * 64];

}

In the above example, the code performs an array-bounds check to ensure that untrusted_index is less than the length of array1. This is needed to ensure that the program does not read beyond the bounds of the array. While this appears to be sound as written, it does not take into account microarchitectural behaviors of the CPU involving speculative execution. In short, it is possible that the CPU may mispredict the conditional branch when untrusted_index is greater than or equal to length. This can cause the CPU to speculatively execute the body of the if statement. As a consequence of this, the CPU may perform a speculative out-of-bounds read of array1 and then use the value loaded from array1 as an index into array2. This can create observable side effects in the CPU cache that reveal information about the value that has been read out-of-bounds. While the CPU will eventually recognize that it mispredicted the conditional branch and discard the speculatively executed state, it does not discard the residual side effects in the cache which will remain. This is why variant 1 exposes a speculative execution side-channel.

For a deeper explanation of variant 1, we encourage you to read the excellent research by Google Project Zero and the authors of the Spectre paper.

Mitigating variant 1

Software changes are required to mitigate variant 1 on all currently affected CPUs. This can be accomplished by employing instructions that act as a speculation barrier. For Intel and similar processors (including AMD) the recommended instruction is LFENCE. ARM recommends a conditional move (ARM) or conditional selection instruction (AArch64) on some architectures and the use of a new instruction known as CSDB on others. These instructions ensure that speculative execution down an unsafe path cannot proceed beyond the barrier. However, applying this guidance correctly requires developers to determine the appropriate places to make use of these instructions such as by identifying instances of variant 1.

In order to help developers mitigate this new issue, the MSVC compiler has been updated with support for the /Qspectre switch which will automatically insert one of these speculation barriers when the compiler detects instances of variant 1. In this case the compiler detects that a range-checked integer is used as an index to load a value that is used to compute the address of a subsequent load. If you compile the example above with and without /Qspectre, you will see the following code generation difference on x86:

Without /Qspectre |

With /Qspectre |

|---|---|

?example@@YAEHHPAH0@Z PROC mov ecx, DWORD PTR _index$[esp-4] cmp ecx, DWORD PTR _length$[esp-4] jge SHORT $LN4@example mov eax, DWORD PTR _array$[esp-4] ; no lfence here mov dl, BYTE PTR [eax+ecx*4] mov eax, DWORD PTR _array2$[esp-4] movzx ecx, dl shl ecx, 8 mov al, BYTE PTR [ecx+eax] $LN4@example: |

?example@@YAEHHPAH0@Z PROC mov ecx, DWORD PTR _index$[esp-4] cmp ecx, DWORD PTR _length$[esp-4] jge SHORT $LN4@example mov eax, DWORD PTR _array$[esp-4] lfence mov dl, BYTE PTR [eax+ecx*4] mov eax, DWORD PTR _array2$[esp-4] movzx ecx, dl shl ecx, 8 mov al, BYTE PTR [ecx+eax] $LN4@example: |

As the above shows, the compiled code under /Qspectre now contains the explicit speculation barrier instruction on line 6 which will prevent speculation from going down the unsafe path, thus mitigating the issue. (For clarity, the left hand side includes a comment, introduced with a ; in assembly.)

It is important to note that there are limits to the analysis that MSVC and compilers in general can perform when attempting to identify instances of variant 1. As such, there is no guarantee that all possible instances of variant 1 will be instrumented under /Qspectre.

References

For more details please see the official Microsoft Security Advisory ADV180002, Guidance to mitigate speculative execution side-channel vulnerabilities. Guidance is also available from Intel, Speculative Execution Side Channel Mitigations, and ARM, Cache Speculation Side-channels. We’ll update this blog post as other official guidance is published.

In closing

We on the MSVC team are committed to the continuous improvement and security of your Windows software which is why we have taken steps to enable developers to help mitigate variant 1 under the new /Qspectre flag.

We encourage you to recompile and redeploy your vulnerable software as soon as possible. Continue watching this blog and the @visualc Twitter feed for updates on this topic.

If you have any questions, please feel free to ask us below. You can also send us your comments through e-mail at visualcpp@microsoft.com, through Twitter @visualc, or Facebook at Microsoft Visual Cpp. Thank you.

Azure Security Center adds support for custom security assessments

Azure Security Center monitors operating system (OS) configurations using a set of 150+ recommended rules for hardening the OS, including rules related to firewalls, auditing, password policies, and more. If a machine is found to have a vulnerable configuration, a security recommendation is generated. Today, we are pleased to preview a new feature that allows you to customize these rules and add additional rules to exactly match your desired Windows configurations.

The new custom security configurations are defined as part of the security policy, and allow you to:

- Enable and disable a specific rule.

- Change the desired setting for an existing rule (e.g. passwords should expire in 60 days instead of 30).

- Add a new rule based on the supported rule types including registry, audit policy, and security policy.

Note: OS Security Config customization is available for Security Center users in the Standard tier on subscription level only.

To get started, open Security Center, select Security policy, choose a subscription, and “Edit security configurations (preview)” as shown below.

In the “Edit Security Configurations rules” blade, you can download the configuration file (JSON format), edit the rules, upload the modified file, and save to apply your changes. The customized rule set will then be applied to all applicable resource groups and virtual machines during the next assessment (up to 24 hours).

To learn more about the new feature and file editing guidelines, please visit our documentation page.

Compatibility Level 140 is now the default for Azure SQL Database

Database Compatibility Level 140 is now the default for new databases created in Azure SQL Database across almost all regions. At this point in time, there are already 539,903 databases in Azure SQL Database already running in Compatibility Level 140.

Frequently asked questions related to this announcement:

Why move to database Compatibility Level 140?

The biggest change is the enabling of the adaptive query processing feature family, but there are also query processing related fixes and batch mode improvements as well. For details on what Compatibility Level 140 specifically enables, see the blog post Public Preview of Compatibility Level 140 for Azure SQL Database.

What do you mean by "database Compatibility Level 140 is now the default"?

If you create a new database and don’t explicitly designate COMPATIBILITY_LEVEL, the database Compatibility Level 140 will be used.

Does Microsoft automatically update the database compatibility level for existing databases?

No, we do not update database compatibility level for existing databases. This is up to customers to do at their own discretion. With that said, we highly recommend customers plan on moving to the latest compatibility level in order to leverage the latest improvements.

My application isn’t certified for database Compatibility Level 140 yet. For this scenario, what should I do when I create new databases?

For this scenario, we recommend that database configuration scripts explicitly designate the application-supported COMPATIBILITY_LEVEL rather than rely on the default.

I created a logical server before 140 was the default database compatibility level. What impact does this have?

The master database of your logical server will reflect the database compatibility level that was the default at the time of the logical server creation. New databases created on a logical server with an older compatibility level for the master database will still use database Compatibility Level 140 if not explicitly designated. The master database compatibility cannot be changed without recreating the logical server. Having master at an older database compatibility level will not impact user database behavior.

I would like to change to the latest database compatibility level, any best practices for doing so?

For pre-existing databases running at lower compatibility levels, the recommended workflow for upgrading the query processor to a higher compatibility level is detailed in the article Change the Database Compatibility Mode and Use the Query Store. Note that this article refers to compatibility level 130 and SQL Server, but the same methodology applies for moves to 140 for SQL Server and Azure SQL DB.

Accelerate your business revolution with IoT in Action

There’s a revolution underway that is positioning companies to take operational efficiency to new levels and inform the next generation of products and services. This revolution of course, is the Internet of Things (IoT).

Here at Microsoft, we’re committed to helping our customers harness the power of IoT through our Azure IoT solutions. We’re also committed to helping customers take the first steps through our IoT in Action series. Our next delivery is coming February 13, 2018 in San Francisco, which I’d encourage you to attend.

But first, I’d like to introduce you to some recent updates to Azure IoT Suite that are making IoT solutions easier and more robust than ever.

Azure IoT powers the business revolution

With our long history of driving business success and digital transformation for our customers, it’s no surprise that we’re also focused on powering the business revolution through our robust Azure IoT suite of products.

So how does Azure IoT benefit businesses?

First off, it’s a quick and scalable solution. Our preconfigured solutions can accelerate your development process, so you can get up and running quickly. You can connect existing devices and add new ones using our device SDKs for platforms including Linux, Windows, and real-time operating systems. Scaling is easy, whether you want to add a few devices or a million.

Azure IoT Suite can easily integrate with your existing systems and applications like Salesforce, SAP, and Oracle. You can also enhance security by setting up individual identities and credentials for each of your connected devices. Plus, Azure comes complete with built-in artificial intelligence and built-in machine learning.

Watch the following interview with Sam George, Director of Azure IoT at Microsoft, to learn how Azure IoT is accelerating the digital transformation for businesses.

So, what’s new with Azure IoT?

Microsoft continues to evolve its suite to offer you the world’s best IoT technology. Here are three notable releases that are smoothing the road to IoT.

Microsoft IoT Central

This highly scalable SaaS solution was recently released for public preview. It delivers a low-code way for companies to build IoT production grade applications in hours without needing to manage backend infrastructure or hire specialized talent. Features include device authentication, secure connectivity, extensive device SDKs with multi-language support, and native support for IoT protocols. Learn more about Microsoft IoT Central.

Azure IoT Hub

Use the Azure IoT Hub to connect, monitor, and manage billions of IoT assets. This hub enables you to securely communicate with all your things, set up identities and credentials for individuals, connected devices, and quickly register devices at scale with our provisioning service. Learn more about Azure IoT Hub Device Provisioning Service.

Azure Stream Analytics on IoT Edge

This on-demand, real-time analytics service is now available for your edge devices. Shifting cloud analytics and custom business logic closer to your devices where the data is produced is a great solution for customers who need low-latency, resiliency, and efficient use of bandwidth. It also enables organizations to focus on more business insights instead of data management. Learn more about Azure Stream Analytics on Iot Edge.

Register for IoT in Action

To learn more about how Azure IoT can help you accelerate your business revolution, attend IoT in Action in San Francisco on February 13.

Get expert insights from IoT industry pioneers like James Whittaker and Sam George. Learn how to unlock the intelligent edge with Azure IoT. Take an in-depth exploration of two Microsoft approaches to building IoT solutions, Azure PaaS and SaaS. Find out how to design and build a cloud-powered AI platform with Microsoft Azure + AI. Plus, connect with partners who can help you take your IoT solution from concept to reality.

Register for this free one-day event today, space is limited.

ADF v2: Visual Tools enabled in public preview

|

ADF v2 public preview was announced at Microsoft Ignite on Sep 25, 2017. With ADF v2, we added flexibility to ADF app model and enabled control flow constructs that now facilitates looping, branching, conditional constructs, on-demand executions and flexible scheduling in various programmatic interfaces like Python, .Net, Powershell, REST APIs, ARM templates. One of the consistent pieces of customer feedback we received, is to enable a rich interactive visual authoring and monitoring experience allowing users to create, configure, test, deploy and monitor data integration pipelines without any friction. We listened to your feedback and are happy to announce the release of visual tools for ADF v2. The main goal of the ADF visual tools is to allow you to be productive with ADF by getting pipelines up & running quickly without requiring to write a single line of code. You can use a simple and intuitive code free interface to drag and drop activities on a pipeline canvas, perform test runs, debug iteratively, deploy & monitor your pipeline runs. With this release, we are also providing guided tours on how to use the enabled visual authoring & monitoring features and also an ability to give us valuable feedback. |

Our goal with visual tools for ADF v2 is to increase productivity and efficiency for both new and advanced users with intuitive experiences. You can get started by clicking the Author & Monitor tile in your provisioned v2 data factory blade.

Check out some of the exciting features enabled with the new visual tools in ADF v2. You can also watch the short video below.

Get Started Quickly

-

Create your first ADF v2 pipeline

-

Quickly Copy Data from a bunch of data sources using the copy wizard

-

Configure SSIS IR to lift and shift SSIS packages to Azure

-

Set up code repo (VSTS GIT) for source control, collaboration, versioning etc..

Visual Authoring

Author Control Flow Pipelines

Create pipelines, drag and drop activities, connect them on-success, on-failure, on-completion.

Create Azure & Self Hosted Integration runtimes

Create a self hosted integration runtime for hybrid data movement or an Azure-SSIS IR for lifting and shifting SSIS packages to Azure. Create linked service connections to your data stores or compute.

Support for all control flow activities running on Azure computes

Control Flow Activities:

- HDInsight Hive, HDInsight Pig, HDInsight Map Reduce, HDI Streaming, HDI Spark, U-SQL, Stored Procedure, Web, For Each, Get Metadata, Look up, Execute Pipeline

Support for Azure Computes:

- HDI (on-demand, BYOC), ADLA, Azure Batch

Iterative development and debugging

Do Test Runs before attaching a trigger on the pipeline and running on-demand or on a schedule.

Parameterize pipelines and datasets

Parameterize using expressions, system variables.

Rich Validation Support

You can now validate your pipelines to know about missed property configurations or incorrect configurations. Simply click the Validate button in the pipeline canvas. This will generate the validation output in side drawer. You can then click on each entry to go straight to the location of the missing validation.

Trigger pipelines

Trigger on-demand, run pipelines on schedule.

Use VSTS GIT

VSTS GIT for source control, collaboration, versioning, etc.

Copy Data

Data Stores (65)

Support for 65 data stores. 18 stores with first class support that require users to provide just configuration values. The remaining 47 stores can be used with JSON.

18 stores with first class support:

- Azure Blob, Azure CosmosDB, Azure Database for MySQL, Azure Data Lake Store, Amazon Redshift, Amazon S3, Azure SQL DW, Azure SQL, Azure Table, File System, HDFS, MySQL, ODBC, Oracle, Salesforce, SAP HANA, SAP BW, SQL Server

47 Stores with JSON support:

- Search Index, Cassandra, HTTP file, Mongo DB, OData, Relational table, Dynamics 365, Dynamics CRM, Web table, AWS Marketplace, PostgreSQL, Concur, Couchbase, Drill, Oracle Eloqua, Google Big Query, Greenplum, HBase, Hive, HubSpot, Apache Impala, Jira, Magento, MariaDB, Marketo, PayPal, Phoenix, Presto, QuickBooks, ServiceNow, Shopify, Spark, Square, Xero, Zoho, DB2, FTP, GE Historian, Informix, Microsoft Access, MongoDB, SAP Cloud for customer

Use copy wizard to quickly copy data from a bunch of data sources

The familiar ADF v1 copy wizard is now available in ADF v2 to do one-time quick import. Copy Wizard generates pipelines with copy activities on authoring canvas. The copy activities can now be extended to run other activities like Spark, USQL, Stored Proc etc. on-success, on-failure etc. and create the entire control flow pipeline.

Guided tour

Click on the Information Icon in the lower left. You can then click Guided tour to get step by step instructions on how to visually monitor your pipeline and activity runs.

Feedback

Click on the Feedback icon to give us feedback on various features or any issues that you may be facing.

Select data factory

Hover on the Data Factory icon on the top left. Click on the Arrow icon to see a list of Azure subscriptions and data factories that you can monitor.

Visual Monitoring

List View Monitoring

Monitor pipeline, activity & trigger runs with a simple list view interface. All the runs are displayed in local browser time zone. You can change the time zone and all the date time fields will snap to the selected time zone.

Monitor Pipeline Runs:

List view showcasing each pipeline run for your data factory v2 pipelines.

Monitor Activity Runs:

List view showcasing activity runs corresponding to each pipeline run. Click Activity Runs icon under the Actions column to view activity runs for each pipeline run.

Important note: You need to click the Refresh icon on top to refresh the list of pipeline and activity runs. Auto-refresh is currently not supported.

Monitor Trigger Runs:

Rich ordering and filtering

Order pipeline runs in desc/asc by Run Start and filter pipeline runs pipeline name, run start and run status.

Add/Remove columns to list view

Right click the list view header and choose columns that you want to appear in the list view.

Reorder columns widths in list view

Increase and decrease the column widths in list view by simply hovering over the column header.

Monitor Integration Runtimes

Monitor health of your Self Hosted, Azure, Azure-SSIS Integration runtimes.

Cancel/Re-run your pipeline runs

Cancel a pipeline run or re-run a pipeline run with already defined parameters.

This is the first public release of ADF v2 visual tools We are continuously working to refresh the released bits with new features based on customer feedback. Get more information and detailed steps for using the ADF v2 visual tools.

Get started building pipelines easily and quickly using Azure Data Factory. If you have any feature requests or want to provide feedback, please visit the Azure Data Factory forum.

How Azure Security Center helps analyze attacks using Investigation and Log Search

Every second counts when you are under attack. Azure Security Center (ASC) uses advanced analytics and global threat intelligence to detect malicious threats, and the new capabilities empower you to respond quickly. This blog post showcases how an analyst can leverage the Investigation and Log Search capabilities in Azure Security Center to determine whether an alert represents a security breach, and to understand the scope of that breach.

To learn more about the ASC Investigation feature in detail see the article Investigate Incidents and Alerts in Azure Security Center (Preview). Let’s drill into an alert and see what more we can learn using these new features.

Security Center Standard tier users can view a dashboard similar to one pictured below. You can select the Standard tier or the free 90 day trial from the Pricing Tier blade in the Security Center policy. On the below screen click on the Security Alerts graph for a list of alerts. This view will include alerts triggered by Security Center detections as well as integrated alerts from other security solutions. When possible, Security Center combines alerts that are part of chain of an attacker activity into incidents. The three interconnected dots icon highlighted in the screenshot below indicate an incident, while the blue shield icon indicates a single alert.

Clicking on an incident launches the incident details pane showing all the alerts that are part of it. Selecting a particular alert gives more information about that alert. The alert detail blade also includes an Investigate button (highlighted below) that initiates the investigation process for this alert. In the example alert PowerShell is seen using an invoke expression to download a suspicious-looking batch file from Internet. To understand more about this alert and the context of the security incident you can launch an investigation by clicking on the Investigate button. If an investigation has already been started this button will resume that existing investigation.

The investigation dashboard contains a visual, interactive graph of entities such as accounts, machines, and other alerts that are related to the initial alert or incident. Selecting an entity will show other related entities. For example, selecting a user account that has logged on to the machine where the alert occurred will show any other machines where that account logged on and any other alerts involving that account. You can navigate through related entities on the graph, exploring context about the entity and tagging anything that appears related to the security breach to build an investigative dossier. At this stage, the graph shows items that are one or two hops away from the initial alert, but as you click on other nodes in the graph, items related to the newly-selected node will appear.

An investigation will usually start by trying to understand the chronology of the incident, focusing on the logical sequence of events that happened around the time the alert was triggered. To set the time period for analysis the time scope selector dropdown is available on the top left side of the graph. You can use this to specify the exact time range in which to focus, using either a preset time range or a custom range. In the current example the first alert triggered around 9:47 AM. A custom time range from say 9:40 to 9:53 would be a good starting range. The selected time range limits alerts and entities that are added to the graph. As you navigate the graph, related entities will only be added if the relationship occurred in this time range. For example, an account that logged on to the host in this time range would be shown. The time range also limits the events queried in the Exploration tab to those occurring inside the current time range.

In this dashboard, relevant information about a selected entity is shown on the right side of the screen (screenshot below) in a series of tabs. The Info tab shows summary information about the entity, for example, selecting a machine shows OS type, IP address, and geographic location. The Entities tab shows entities, alerts, and incidents directly related to the selected item. In our example, selecting the machine identified in the alert (SAIPROD) shows alerts related to suspicious PowerShell activity, suspicious account creation, attempted AppLocker bypass, and disabling of critical services. Clicking on these alerts gives more details about them. The Search tab shows the event logs available that contain events related to the selected entity. For our example host it shows SecurityEvent, SecurityDetection, and Heartbeat.

Although it is possible to do your own searching through the logs from the Search tab, the Exploration tab can give you quicker access to relevant events and speed up the investigation process.

Let's look a little bit more into the Exploration tab in more detail.

The Exploration tab contains a set of queries that highlight some of the most relevant data for an investigation of activity on a given entity, presenting the data in way that is easily consumable. For example, in the screenshot below the Accounts failed to log on query shows two logon attempts where the user name does not exist. You can click on individual items in this list to see more details about the specific event or click the magnifying glass icon to view all of these events in the Log Search screen. Exploration queries are only shown when there is relevant event data. The queries also differ for different entity types, so expect to see different contents to this tab as you navigate between entities in the graph.

We also see data in the rarely used process often employed by attacker. This shows processes and commandlines of operating system executables that are often used by the attackers.

In this case looking at command line activity (above) as displayed by rarely used process we can see the attacker first issues the “whoami” command, which displays who the current logged on user is. We also see a range of other suspicious-looking activity like a user account being added, adding a registry value to the Windows run key, PowerShell being used to download a batch file, and the installation and startup of a Windows service. The tab view for this query may show only a subset of the available data. If there is more data, the View all link is shown at the bottom of the query results. Clicking this will take you to the Log Search and will display all of the data. Click on the TimeGenerated column header to order by time so that you get a true chronological picture.

To return to the investigation graph be sure to click on the Investigation Dashboard breadcrumb item at the top of the Log Search screen. Using the browser Back button will take you out of the investigation altogether.

As we find interesting artifacts related to the incident they can also be added to it. For example, the creation of a new user account adninistrator generated an alert around suspicious account creation. Looking at the sequence of command lines, it seems that this is related to the current incident, so we can add these to it as shown in screenshot below. The whole idea being that as we add more artifacts to the incident we get a better summary of how the attack progressed. Adding this account could help you extend the graph to a second virtual machine (VM) in case the attackers used it for lateral movement or if we’d see unusual processes run by that account on other VM’s. All such VM’s found could be added as additional machines to the incident.

One interesting thing that jumps out from this expanded view is that the Logonid (0x144a52) for all attacker command is the same, meaning that all of these commands were executed by the same account in the same logon session. This is a good analysis data point, often used in investigations. Focusing on the activity within the particular logon session from which the alert originated will tell you a lot about what else the attacker was doing or alternatively allows you to quickly determine that the alert might be a false positive in cases where there is no additional suspicious activity.

Using the Log Search capability of Azure Security Center can help you explore this in more detail.

Log Search

Security Center uses Log Analytics search to retrieve and analyze your security data. Log Analytics includes a query language to quickly retrieve and consolidate data. From Security Center, you can use Log Analytics search to construct queries and analyze collected data. You may find it easier to use one of the exploration queries (clicking on “View All” or the magnifier icon to get to Log Search) as a starting point for your query.

Once in Log Search, you can set the event type to look for. In this case we used “Event ID == 4688” for Windows Process Creation events. These contain the CommandLine data that we are interested in. We narrow this further to show only events where the SubjectLogonId == "0x144a52". Adding the project command, displays only the field that we are interested in. The search gives you result somewhat like below.

Looking at the search results reveals the following:

- An attacker tried to login using brute force techniques and succeeded. Please note that this is not shown in the 4688 events, but suggested by the failed logon attempts we saw earlier.

- Once logged in, the attacker launched a command prompt and issued the “whoami” command, which displays who the current logged on user is. The attacker then ran "systeminfo" to get the detailed configuration information about the host VM. The "qwinsta" command was also issued to get information about RDP sessions and other sessions on the VM.

- PowerShell was used to run an invoke expression to download a batch file from the Internet. The subsequent commands are likely contained in the batch file which we see executed at 9:49:07 since we can see the execution times of the following processes occurring within the same second.

- The batch file first disables the firewall. This is a known attacker technique. Once the initial compromise is achieved they often take steps to lower the security settings of a system such as disabling the firewall, Antivirus, and Shared Access.

- Next, we see the addition of a new user account called 'adninstrator'. The account name closely resembles our standard windows account in order to avoid being noticed by a human administrator.

- Then a suspicious service called "svvchost" is created and started.

- The search also shows a registry addition that installs an auto-run command. On closer analysis, this looks like an attempt to bypass AppLocker restrictions. AppLocker can be configured to limit which executables are allowed to run on a Windows system. The command line pattern looks like the attacker is attempting to circumvent AppLocker policy by using regsvr32.exe to execute untrusted code. On hosts where tight AppLocker executable and script rules are enforced, attackers are often seen using regsvr32 and a script file located on Internet to get a script bypass and run their malicious script.

In summary, the post shows how you can use new Investigation and Log Search features in Azure Security Center to understand the nature and scope of a security incident.

At Microsoft Ignite, the Security Center team announced several new features to help Azure customers better understand, investigate, and manage security threats. You can read more about these features in the blog post Azure Security Center extends advanced threat protection to hybrid cloud workloads by Sarah Fender.

Security Center helps reduce the time and expertise needed to respond to security threats by automating the investigation experience for alerts and incidents.

In our next blog post we will try to address some of other questions that you can answer using these features:

- Is an executable flagged in an alert rare in my environment?

- Does the process have an unusual parent process?

- What were the users that logged onto the machine around the time of the alert?

- Did any AV alert trigger around this time? How about process crash?

To learn more

- See other blog posts with real-world examples of how Security Center helps detect cyberattacks.

- Learn about Security Center’s advanced detection capabilities.

- Learn how to manage and respond to security alerts in Azure Security Center.

- Find frequently asked questions about using the service.

Get the latest Azure security news and information by reading the Azure Security blog. Stay tuned in as we bring you blog posts to highlight more of the investigative capabilities of Security Center.

![clip_image001[3] clip_image001[3]](http://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/0210a500-91a8-4e9c-9de2-85b33c2dc596.jpg)

![clip_image001[1] clip_image001[1]](http://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/4b0c74d3-b0b9-4e78-9efb-dc112b506dc4.jpg)