TypeScript 2.8 is here and brings a few features that we think you’ll love unconditionally!

If you’re not familiar with TypeScript, it’s a language that adds optional static types to JavaScript. Those static types help make guarantees about your code to avoid typos and other silly errors. They can also help provide nice things like code completions and easier project navigation thanks to tooling built around those types. When your code is run through the TypeScript compiler, you’re left with clean, readable, and standards-compliant JavaScript code, potentially rewritten to support much older browsers that only support ECMAScript 5 or even ECMAScript 3. To learn more about TypeScript, check out our documentation.

If you can’t wait any longer, you can download TypeScript via NuGet or by running

npm install -g typescript

You can also get editor support for

Other editors may have different update schedules, but should all have excellent TypeScript support soon as well.

To get a quick glance at what we’re shipping in this release, we put this handy list together to navigate our blog post:

We also have some minor breaking changes that you should keep in mind if upgrading.

But otherwise, let’s look at what new features come with TypeScript 2.8!

Conditional types

Conditional types are a new construct in TypeScript that allow us to choose types based on other types. They take the form

where A, B, C, and D are all types. You should read that as “when the type A is assignable to B, then this type is C; otherwise, it’s D. If you’ve used conditional syntax in JavaScript, this will feel familiar to you.

Let’s take two specific examples:

interface Animal {

live(): void;

}

interface Dog extends Animal {

woof(): void;

}

// Has type 'number'

type Foo = Dog extends Animal ? number : string;

// Has type 'string'

type Bar = RegExp extends Dog ? number : string;

You might wonder why this is immediately useful. We can tell that Foo will be number, and Bar will be string, so we might as well write that out explicitly. But the real power of conditional types comes from using them with generics.

For example, let’s take the following function:

interface Id { id: number, /* other fields */ }

interface Name { name: string, /* other fields */ }

declare function createLabel(id: number): Id;

declare function createLabel(name: string): Name;

declare function createLabel(name: string | number): Id | Name;

These overloads for createLabel describe a single JavaScript function that makes a choice based on the types of its inputs. Note two things:

- If a library has to make the same sort of choice over and over throughout its API, this becomes cumbersome.

- We have to create three overloads: one for each case when we’re sure of the type, and one for the most general case. For every other case we’d have to handle, the number of overloads would grow exponentially.

Instead, we can use a conditional type to smoosh both of our overloads down to one, and create a type alias so that we can reuse that logic.

type IdOrName<T extends number | string> =

T extends number ? Id : Name;

declare function createLabel<T extends number | string>(idOrName: T):

T extends number ? Id : Name;

let a = createLabel("typescript"); // Name

let b = createLabel(2.8); // Id

let c = createLabel("" as any); // Id | Name

let d = createLabel("" as never); // never

Just like how JavaScript can make decisions at runtime based on the characteristics of a value, conditional types let TypeScript make decisions in the type system based on the characteristics of other types.

As another example, we could also write a type called Flatten that flattens array types to their element types, but leaves them alone otherwise:

// If we have an array, get the type when we index with a 'number'.

// Otherwise, leave the type alone.

type Flatten<T> = T extends any[] ? T[number] : T;

Inferring within conditional types

Conditional types also provide us with a way to infer from types we compare against in the true branch using the infer keyword. For example, we could have inferred the element type in Flatten instead of fetching it out manually:

// We also could also have used '(infer U)[]' instead of 'Array<infer U>'

type Flatten<T> = T extends Array<infer U> ? U : T;

Here, we’ve declaratively introduced a new generic type variable named U instead of specifying how to retrieve the element type of T. This frees us from having to think about how to get the types we’re interested in.

Distributing on unions with conditionals

When conditional types act on a single type parameter, they distribute across unions. So in the following example, Bar has the type string[] | number[] because Foo is applied to the union type string | number.

type Foo<T> = T extends any ? T[] : never;

/**

* Foo distributes on 'string | number' to the type

*

* (string extends any ? string[] : never) |

* (number extends any ? number[] : never)

*

* which boils down to

*

* string[] | number[]

*/

type Bar = Foo<string | number>;

In case you ever need to avoid distributing on unions, you can surround each side of the extends keyword with square brackets:

type Foo<T> = [T] extends [any] ? T[] : never;

// Boils down to Array<string | number>

type Bar = Foo<string | number>;

While conditional types can be a little intimidating at first, we believe they’ll bring a ton of flexibility for moments when you need to push the type system a little further to get accurate types.

New built-in helpers

TypeScript 2.8 provides several new type aliases in lib.d.ts that take advantage of conditional types:

// These are all now built into lib.d.ts!

/**

* Exclude from T those types that are assignable to U

*/

type Exclude<T, U> = T extends U ? never : T;

/**

* Extract from T those types that are assignable to U

*/

type Extract<T, U> = T extends U ? T : never;

/**

* Exclude null and undefined from T

*/

type NonNullable<T> = T extends null | undefined ? never : T;

/**

* Obtain the return type of a function type

*/

type ReturnType<T extends (...args: any[]) => any> = T extends (...args: any[]) => infer R ? R : any;

/**

* Obtain the return type of a constructor function type

*/

type InstanceType<T extends new (...args: any[]) => any> = T extends new (...args: any[]) => infer R ? R : any;

While NonNullable, ReturnType, and InstanceType are relatively self-explanatory, Exclude and Extract are a bit more interesting.

Extract selects types from its first argument that are assignable to its second argument:

// string[] | number[]

type Foo = Extract<boolean | string[] | number[], any[]>;

Exclude does the opposite; it removes types from its first argument that are not assignable to its second:

// boolean

type Bar = Exclude<boolean | string[] | number[], any[]>;

Declaration-only emit

Thanks to a pull request from Manoj Patel, TypeScript now features an --emitDeclarationOnly flag which can be used for cases when you have an alternative build step for emitting JavaScript files, but need to emit declaration files separately. Under this mode no JavaScript files nor sourcemap files will be generated; just .d.ts files that can be used for library consumers.

One use-case for this is when using alternate compilers for TypeScript such as Babel 7. For an example of repositories taking advantage of this flag, check out urql from Formidable Labs, or take a look at our Babel starter repo.

@jsx pragma comments

Typically, users of JSX expect to have their JSX tags rewritten to React.createElement. However, if you’re using libraries that have a React-like factory API, such as Preact, Stencil, Inferno, Cycle, and others, you might want to tweak that emit slightly.

Previously, TypeScript only allowed users to control the emit for JSX at a global level using the jsxFactory option (as well as the deprecated reactNamespace option). However, if you needed to mix any of these libraries in the same application, you’d have been out of luck using JSX for both.

Luckily, TypeScript 2.8 now allows you to set your JSX factory on a file-by-file basis by adding an // @jsx comment at the top of your file. If you’ve used the same functionality in Babel, this should look slightly familiar.

/** @jsx dom */

import { dom } from "./renderer"

<h></h>

The above sample imports a function named dom, and uses the jsx pragma to select dom as the factory for all JSX expressions in the file. TypeScript 2.8 will rewrite it to the following when compiling to CommonJS and ES5:

var renderer_1 = require("./renderer");

renderer_1.dom("h", null);

JSX is resolved via the JSX Factory

Currently, when TypeScript uses JSX, it looks up a global JSX namespace to look up certain types (e.g. “what’s the type of a JSX component?”). In TypeScript 2.8, the compiler will try to look up the JSX namespace based on the location of your JSX factory. For example, if your JSX factory is React.createElement, TypeScript will try to first resolve React.JSX, and then resolve JSX from within the current scope.

This can be helpful when mixing and matching different libraries (e.g. React and Preact) or different versions of a specific library (e.g. React 14 and React 16), as placing the JSX namespace in the global scope can cause issues.

Going forward, we recommend that new JSX-oriented libraries avoid placing JSX in the global scope, and instead export it from the same location as the respective factory function. However, for backward compatibility, TypeScript will continue falling back to the global scope when necessary.

Granular control on mapped type modifiers

TypeScript’s mapped object types are an incredibly powerful construct. One handy feature is that they allow users to create new types that have modifiers set for all their properties. For example, the following type creates a new type based on T and where every property in T becomes readonly and optional (?).

// Creates a type with all the properties in T,

// but marked both readonly and optional.

type ReadonlyAndPartial<T> = {

readonly [P in keyof T]?: T[P]

}

So mapped object types can add modifiers, but up until this point, there was no way to remove modifiers from T.

TypeScript 2.8 provides a new syntax for removing modifiers in mapped types with the - operator, and a new more explicit syntax for adding modifiers with the + operator. For example,

type Mutable<T> = {

-readonly [P in keyof T]: T[P]

}

interface Foo {

readonly abc: number;

def?: string;

}

// 'abc' is no longer read-only, but 'def' is still optional.

type TotallyMutableFoo = Mutable<Foo>

In the above, Mutable removes readonly from each property of the type that it maps over.

Similarly, TypeScript now provides a new Required type in lib.d.ts that removes optionality from each property:

/**

* Make all properties in T required

*/

type Required<T> = {

[P in keyof T]-?: T[P];

}

The + operator can be handy when you want to call out that a mapped type is adding modifiers. For example, our ReadonlyAndPartial from above could be defined as follows:

type ReadonlyAndPartial<T> = {

+readonly [P in keyof T]+?: T[P];

}

Organize imports

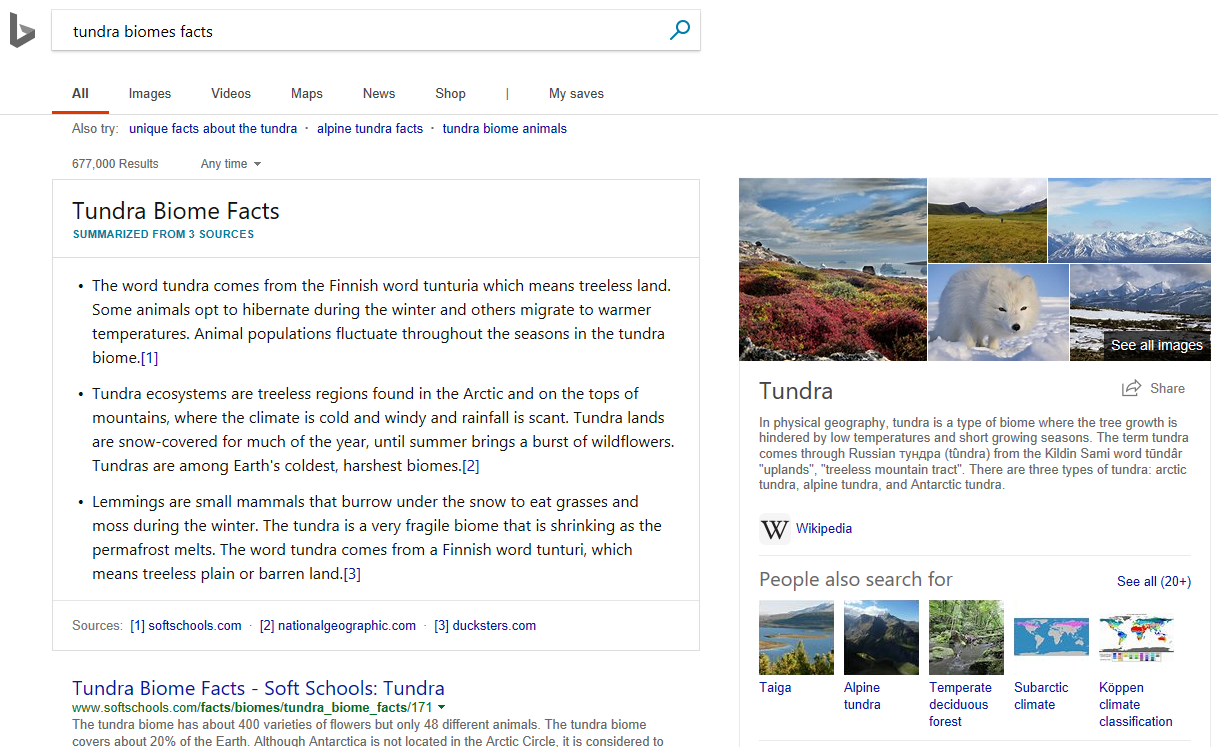

TypeScript’s language service now provides functionality to organize imports. This feature will remove any unused imports, sort existing imports by file paths, and sort named imports as well.

![]()

Fixing uninitialized properties

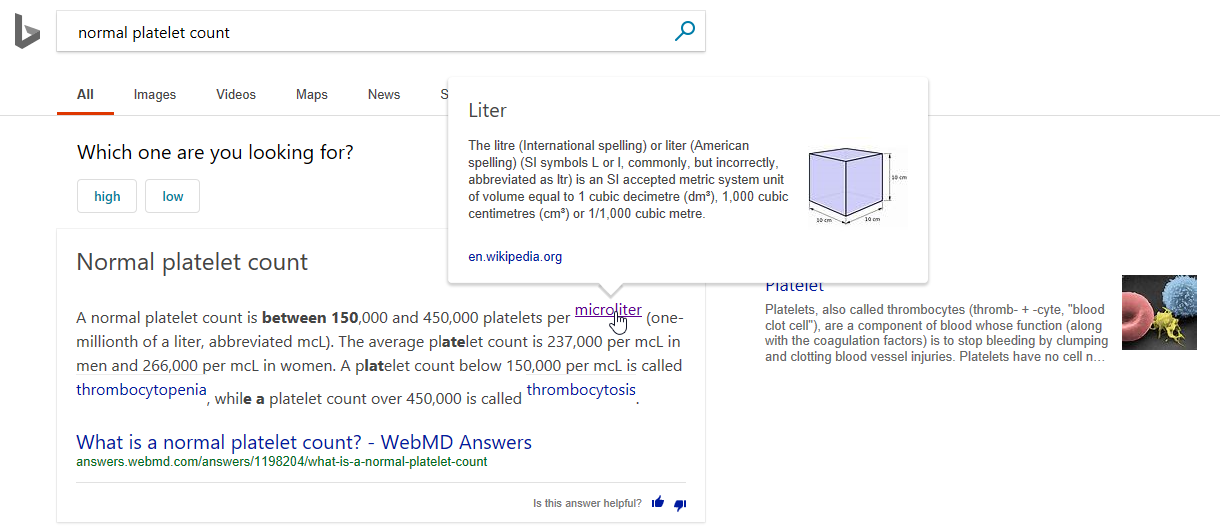

TypeScript 2.7 introduced extra checking for uninitialized properties in classes. Thanks to a pull request by Wenlu Wang TypeScript 2.8 brings some helpful quick fixes to make it easier to add to your codebase.

![]()

Breaking changes

Unused type parameters are checked under --noUnusedParameters

Unused type parameters were previously reported under --noUnusedLocals, but are now instead reported under --noUnusedParameters.

HTMLObjectElement no longer has an alt attribute

Such behavior is not covered by the WHATWG standard.

What’s next?

We hope that TypeScript 2.8 pushes the envelope further to provide a type system that can truly represent the nature of JavaScript as a language. With that, we believe we can provide you with an experience that continues to make you more productive and happier as you code.

Over the next few weeks, we’ll have a clearer picture of what’s in store for TypeScript 2.9, but as always, you can keep an eye on the TypeScript roadmap to see what we’re working on for our next release. You can also try out our nightly releases to try out the future today! For example, generic JSX elements are already out in TypeScript’s recent nightly releases!

Let us know what you think of this release over on Twitter or in the comments below, and feel free to report issues and suggestions filing a GitHub issue.

Happy Hacking!