Today, we released a new Windows 10 Preview Build of the SDK to be used in conjunction with Windows 10 Insider Preview (Build 17672 or greater). The Preview SDK Build 17672 contains bug fixes and under development changes to the API surface area.

The Preview SDK can be downloaded from developer section on Windows Insider.

For feedback and updates to the known issues, please see the developer forum. For new developer feature requests, head over to our Windows Platform UserVoice.

Things to note:

- This build works in conjunction with previously released SDKs and Visual Studio 2017. You can install this SDK and still also continue to submit your apps that target Windows 10 Creators build or earlier to the store.

- The Windows SDK will now formally only be supported by Visual Studio 2017 and greater. You can download the Visual Studio 2017 here.

- This build of the Windows SDK will install on Windows 10 Insider Preview and supported Windows operating systems.

Known Issues

Windows Device Portal

Please note that there is a known issue in this Windows Insider build that prevents the user from enabling Developer Mode through the For developers settings page.

Unfortunately, this means that you will not be able to remotely deploy a UWP application to your PC or use Windows Device Portal on this build. There are no known workarounds at the moment. Please skip this flight if you rely on these features.

Missing Contract File

The contract Windows.System.SystemManagementContract is not included in this release. In order to access the following APIs, please use a previous Windows IoT extension SDK with your project.

This bug will be fixed in a future preview build of the SDK.

The following APIs are affected by this bug:

namespace Windows.Services.Cortana {

public sealed class CortanaSettings

}

namespace Windows.System {

public enum AutoUpdateTimeZoneStatus

public static class DateTimeSettings

public enum PowerState

public static class ProcessLauncher

public sealed class ProcessLauncherOptions

public sealed class ProcessLauncherResult

public enum ShutdownKind

public static class ShutdownManager

public struct SystemManagementContract

public static class TimeZoneSettings

}

API Spot Light:

Check out LauncherOptions.GroupingPreference.

namespace Windows.System {

public sealed class FolderLauncherOptions : ILauncherViewOptions {

ViewGrouping GroupingPreference { get; set; }

}

public sealed class LauncherOptions : ILauncherViewOptions {

ViewGrouping GroupingPreference { get; set; }

}

This release contains the new LauncherOptions.GroupingPreference property to assist your app in tailoring its behavior for Sets. Watch the presentation here.

What’s New:

MC.EXE

We’ve made some important changes to the C/C++ ETW code generation of mc.exe (Message Compiler):

The “-mof” parameter is deprecated. This parameter instructs MC.exe to generate ETW code that is compatible with Windows XP and earlier. Support for the “-mof” parameter will be removed in a future version of mc.exe.

As long as the “-mof” parameter is not used, the generated C/C++ header is now compatible with both kernel-mode and user-mode, regardless of whether “-km” or “-um” was specified on the command line. The header will use the _ETW_KM_ macro to automatically determine whether it is being compiled for kernel-mode or user-mode and will call the appropriate ETW APIs for each mode.

- The only remaining difference between “-km” and “-um” is that the EventWrite[EventName] macros generated with “-km” have an Activity ID parameter while the EventWrite[EventName] macros generated with “-um” do not have an Activity ID parameter.

The EventWrite[EventName] macros now default to calling EventWriteTransfer (user mode) or EtwWriteTransfer (kernel mode). Previously, the EventWrite[EventName] macros defaulted to calling EventWrite (user mode) or EtwWrite (kernel mode).

- The generated header now supports several customization macros. For example, you can set the MCGEN_EVENTWRITETRANSFER macro if you need the generated macros to call something other than EventWriteTransfer.

- The manifest supports new attributes.

- Event “name”: non-localized event name.

- Event “attributes”: additional key-value metadata for an event such as filename, line number, component name, function name.

- Event “tags”: 28-bit value with user-defined semantics (per-event).

- Field “tags”: 28-bit value with user-defined semantics (per-field – can be applied to “data” or “struct” elements).

- You can now define “provider traits” in the manifest (e.g. provider group). If provider traits are used in the manifest, the EventRegister[ProviderName] macro will automatically register them.

- MC will now report an error if a localized message file is missing a string. (Previously MC would silently generate a corrupt message resource.)

- MC can now generate Unicode (utf-8 or utf-16) output with the “-cp utf-8” or “-cp utf-16” parameters.

API Updates and Additions

When targeting new APIs, consider writing your app to be adaptive in order to run correctly on the widest number of Windows 10 devices. Please see Dynamically detecting features with API contracts (10 by 10) for more information.

The following APIs have been added to the platform since the release of 17134.

namespace Windows.ApplicationModel {

public sealed class AppInstallerFileInfo

public sealed class LimitedAccessFeatureRequestResult

public static class LimitedAccessFeatures

public enum LimitedAccessFeatureStatus

public sealed class Package {

IAsyncOperation<PackageUpdateAvailabilityResult> CheckUpdateAvailabilityAsync();

AppInstallerFileInfo GetAppInstallerFileInfo();

}

public enum PackageUpdateAvailability

public sealed class PackageUpdateAvailabilityResult

}

namespace Windows.ApplicationModel.Calls {

public sealed class VoipCallCoordinator {

IAsyncOperation<VoipPhoneCallResourceReservationStatus> ReserveCallResourcesAsync();

}

}

namespace Windows.ApplicationModel.Store.Preview.InstallControl {

public enum AppInstallationToastNotificationMode

public sealed class AppInstallItem {

AppInstallationToastNotificationMode CompletedInstallToastNotificationMode { get; set; }

AppInstallationToastNotificationMode InstallInProgressToastNotificationMode { get; set; }

bool PinToDesktopAfterInstall { get; set; }

bool PinToStartAfterInstall { get; set; }

bool PinToTaskbarAfterInstall { get; set; }

}

public sealed class AppInstallManager {

bool CanInstallForAllUsers { get; }

}

public sealed class AppInstallOptions {

AppInstallationToastNotificationMode CompletedInstallToastNotificationMode { get; set; }

bool InstallForAllUsers { get; set; }

AppInstallationToastNotificationMode InstallInProgressToastNotificationMode { get; set; }

bool PinToDesktopAfterInstall { get; set; }

bool PinToStartAfterInstall { get; set; }

bool PinToTaskbarAfterInstall { get; set; }

bool StageButDoNotInstall { get; set; }

}

public sealed class AppUpdateOptions {

bool AutomaticallyDownloadAndInstallUpdateIfFound { get; set; }

}

}

namespace Windows.Devices.Enumeration {

public sealed class DeviceInformationPairing {

public static bool TryRegisterForAllInboundPairingRequestsWithProtectionLevel(DevicePairingKinds pairingKindsSupported, DevicePairingProtectionLevel minProtectionLevel);

}

}

namespace Windows.Devices.Lights {

public sealed class LampArray

public enum LampArrayKind

public sealed class LampInfo

public enum LampPurpose : uint

}

namespace Windows.Devices.Sensors {

public sealed class SimpleOrientationSensor {

public static IAsyncOperation<SimpleOrientationSensor> FromIdAsync(string deviceId);

public static string GetDeviceSelector();

}

}

namespace Windows.Devices.SmartCards {

public static class KnownSmartCardAppletIds

public sealed class SmartCardAppletIdGroup {

string Description { get; set; }

IRandomAccessStreamReference Logo { get; set; }

ValueSet Properties { get; }

bool SecureUserAuthenticationRequired { get; set; }

}

public sealed class SmartCardAppletIdGroupRegistration {

string SmartCardReaderId { get; }

IAsyncAction SetPropertiesAsync(ValueSet props);

}

}

namespace Windows.Devices.WiFi {

public enum WiFiPhyKind {

He = 10,

}

}

namespace Windows.Graphics.Capture {

public sealed class GraphicsCaptureItem {

public static GraphicsCaptureItem CreateFromVisual(Visual visual);

}

}

namespace Windows.Graphics.Imaging {

public sealed class BitmapDecoder : IBitmapFrame, IBitmapFrameWithSoftwareBitmap {

public static Guid HeifDecoderId { get; }

public static Guid WebpDecoderId { get; }

}

public sealed class BitmapEncoder {

public static Guid HeifEncoderId { get; }

}

}

namespace Windows.Media.Core {

public sealed class MediaStreamSample {

IDirect3DSurface Direct3D11Surface { get; }

public static MediaStreamSample CreateFromDirect3D11Surface(IDirect3DSurface surface, TimeSpan timestamp);

}

}

namespace Windows.Media.Devices.Core {

public sealed class CameraIntrinsics {

public CameraIntrinsics(Vector2 focalLength, Vector2 principalPoint, Vector3 radialDistortion, Vector2 tangentialDistortion, uint imageWidth, uint imageHeight);

}

}

namespace Windows.Media.MediaProperties {

public sealed class ImageEncodingProperties : IMediaEncodingProperties {

public static ImageEncodingProperties CreateHeif();

}

public static class MediaEncodingSubtypes {

public static string Heif { get; }

}

}

namespace Windows.Media.Streaming.Adaptive {

public enum AdaptiveMediaSourceResourceType {

MediaSegmentIndex = 5,

}

}

namespace Windows.Security.Authentication.Web.Provider {

public sealed class WebAccountProviderInvalidateCacheOperation : IWebAccountProviderBaseReportOperation, IWebAccountProviderOperation

public enum WebAccountProviderOperationKind {

InvalidateCache = 7,

}

public sealed class WebProviderTokenRequest {

string Id { get; }

}

}

namespace Windows.Security.DataProtection {

public enum UserDataAvailability

public sealed class UserDataAvailabilityStateChangedEventArgs

public sealed class UserDataBufferUnprotectResult

public enum UserDataBufferUnprotectStatus

public sealed class UserDataProtectionManager

public sealed class UserDataStorageItemProtectionInfo

public enum UserDataStorageItemProtectionStatus

}

namespace Windows.Services.Cortana {

public sealed class CortanaActionableInsights

public sealed class CortanaActionableInsightsOptions

}

namespace Windows.Services.Store {

public sealed class StoreContext {

IAsyncOperation<StoreRateAndReviewResult> RequestRateAndReviewAppAsync();

}

public sealed class StoreRateAndReviewResult

public enum StoreRateAndReviewStatus

}

namespace Windows.Storage.Provider {

public enum StorageProviderHydrationPolicyModifier : uint {

AutoDehydrationAllowed = (uint)4,

}

}

namespace Windows.System {

public sealed class FolderLauncherOptions : ILauncherViewOptions {

ViewGrouping GroupingPreference { get; set; }

}

public sealed class LauncherOptions : ILauncherViewOptions {

ViewGrouping GroupingPreference { get; set; }

namespace Windows.System.UserProfile {

public sealed class AssignedAccessSettings

}

namespace Windows.UI.Composition {

public sealed class AnimatablePropertyInfo : CompositionObject

public enum AnimationPropertyAccessMode

public enum AnimationPropertyType

public class CompositionAnimation : CompositionObject, ICompositionAnimationBase {

void SetAnimatableReferenceParameter(string parameterName, IAnimatable source);

}

public enum CompositionBatchTypes : uint {

AllAnimations = (uint)5,

InfiniteAnimation = (uint)4,

}

public sealed class CompositionGeometricClip : CompositionClip

public class CompositionObject : IAnimatable, IClosable {

void GetPropertyInfo(string propertyName, AnimatablePropertyInfo propertyInfo);

}

public sealed class Compositor : IClosable {

CompositionGeometricClip CreateGeometricClip();

}

public interface IAnimatable

}

namespace Windows.UI.Composition.Interactions {

public sealed class InteractionTracker : CompositionObject {

IReference<float> PositionDefaultAnimationDurationInSeconds { get; set; }

IReference<float> ScaleDefaultAnimationDurationInSeconds { get; set; }

int TryUpdatePositionWithDefaultAnimation(Vector3 value);

int TryUpdateScaleWithDefaultAnimation(float value, Vector3 centerPoint);

}

}

namespace Windows.UI.Notifications {

public sealed class ScheduledToastNotification {

public ScheduledToastNotification(DateTime deliveryTime);

IAdaptiveCard AdaptiveCard { get; set; }

}

public sealed class ToastNotification {

public ToastNotification();

IAdaptiveCard AdaptiveCard { get; set; }

}

}

namespace Windows.UI.Shell {

public sealed class TaskbarManager {

IAsyncOperation<bool> IsSecondaryTilePinnedAsync(string tileId);

IAsyncOperation<bool> RequestPinSecondaryTileAsync(SecondaryTile secondaryTile);

IAsyncOperation<bool> TryUnpinSecondaryTileAsync(string tileId);

}

}

namespace Windows.UI.StartScreen {

public sealed class StartScreenManager {

IAsyncOperation<bool> ContainsSecondaryTileAsync(string tileId);

IAsyncOperation<bool> TryRemoveSecondaryTileAsync(string tileId);

}

}

namespace Windows.UI.ViewManagement {

public sealed class ApplicationView {

bool IsTabGroupingSupported { get; }

}

public sealed class ApplicationViewTitleBar {

void SetActiveIconStreamAsync(RandomAccessStreamReference activeIcon);

}

public enum ApplicationViewWindowingMode {

CompactOverlay = 3,

Maximized = 4,

}

public enum ViewGrouping

public sealed class ViewModePreferences {

ViewGrouping GroupingPreference { get; set; }

}

}

namespace Windows.UI.ViewManagement.Core {

public sealed class CoreInputView {

bool TryHide();

bool TryShow();

bool TryShow(CoreInputViewKind type);

}

public enum CoreInputViewKind

}

namespace Windows.UI.Xaml.Controls {

public class NavigationView : ContentControl {

bool IsTopNavigationForcedHidden { get; set; }

NavigationViewOrientation Orientation { get; set; }

UIElement TopNavigationContentOverlayArea { get; set; }

UIElement TopNavigationLeftHeader { get; set; }

UIElement TopNavigationMiddleHeader { get; set; }

UIElement TopNavigationRightHeader { get; set; }

}

public enum NavigationViewOrientation

public sealed class PasswordBox : Control {

bool CanPasteClipboardContent { get; }

public static DependencyProperty CanPasteClipboardContentProperty { get; }

void PasteFromClipboard();

}

public class RichEditBox : Control {

RichEditTextDocument RichEditDocument { get; }

}

public sealed class RichTextBlock : FrameworkElement {

void CopySelectionToClipboard();

}

public class SplitButton : ContentControl

public sealed class SplitButtonClickEventArgs

public enum SplitButtonOrientation

public sealed class TextBlock : FrameworkElement {

void CopySelectionToClipboard();

}

public class TextBox : Control {

bool CanPasteClipboardContent { get; }

public static DependencyProperty CanPasteClipboardContentProperty { get; }

bool CanRedo { get; }

public static DependencyProperty CanRedoProperty { get; }

bool CanUndo { get; }

public static DependencyProperty CanUndoProperty { get; }

void CopySelectionToClipboard();

void CutSelectionToClipboard();

void PasteFromClipboard();

void Redo();

void Undo();

}

public sealed class WebView : FrameworkElement {

event TypedEventHandler<WebView, WebViewWebResourceRequestedEventArgs> WebResourceRequested;

}

public sealed class WebViewWebResourceRequestedEventArgs

}

namespace Windows.UI.Xaml.Controls.Primitives {

public class FlyoutBase : DependencyObject {

FlyoutShowMode ShowMode { get; set; }

public static DependencyProperty ShowModeProperty { get; }

public static DependencyProperty TargetProperty { get; }

void Show(FlyoutShowOptions showOptions);

}

public enum FlyoutPlacementMode {

BottomLeftJustified = 7,

BottomRightJustified = 8,

LeftBottomJustified = 10,

LeftTopJustified = 9,

RightBottomJustified = 12,

RightTopJustified = 11,

TopLeftJustified = 5,

TopRightJustified = 6,

}

public enum FlyoutShowMode

public sealed class FlyoutShowOptions : DependencyObject

}

namespace Windows.UI.Xaml.Hosting {

public sealed class XamlBridge : IClosable

}

namespace Windows.UI.Xaml.Markup {

public sealed class FullXamlMetadataProviderAttribute : Attribute

}

namespace Windows.Web.UI.Interop {

public sealed class WebViewControl : IWebViewControl {

event TypedEventHandler<WebViewControl, object> GotFocus;

event TypedEventHandler<WebViewControl, object> LostFocus;

}

public sealed class WebViewControlProcess {

string Partition { get; }

}

public sealed class WebViewControlProcessOptions {

string Partition { get; set; }

}

}

The post Windows 10 SDK Preview Build 17672 available now! appeared first on Windows Developer Blog.

Buckle up kids, this is nuts and I'm probably doing it wrong. ;) And it's 2am and I wrote this fast. I'll come back tomorrow and fix the spelling.

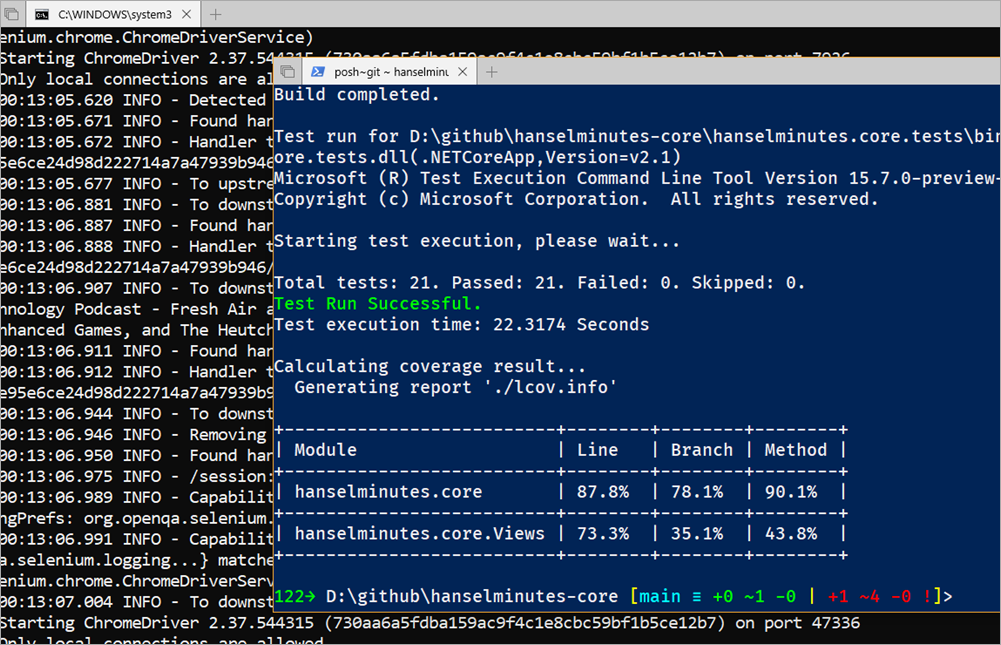

Buckle up kids, this is nuts and I'm probably doing it wrong. ;) And it's 2am and I wrote this fast. I'll come back tomorrow and fix the spelling. Selenium, to be clear, puts your browser on a puppet's strings. Even Chrome knows it's being controlled! It's using the (soon to be standard, but clearly defacto standard) WebDriver protocol. Imagine if your browser had a localhost REST protocol where you could interrogate it and click stuff! I've been using Selenium for over 11 years. You can even

Selenium, to be clear, puts your browser on a puppet's strings. Even Chrome knows it's being controlled! It's using the (soon to be standard, but clearly defacto standard) WebDriver protocol. Imagine if your browser had a localhost REST protocol where you could interrogate it and click stuff! I've been using Selenium for over 11 years. You can even

_b5d0dfe9-9ba6-41b2-8b66-ff426673b482.png)

London Midland trains are an important part of our customers’ everyday lives. Passengers rely on our service for their essential journeys; ensuring our trains run on time means we keep our passengers’ days running smoothly. This commitment to great service led us to invest in Office 365 and Surface Hub. We’re using this technology to keep our digital transformation on track—helping to empower employees to reduce downtime, connect better with customers, and ensure safe and reliable operations.

London Midland trains are an important part of our customers’ everyday lives. Passengers rely on our service for their essential journeys; ensuring our trains run on time means we keep our passengers’ days running smoothly. This commitment to great service led us to invest in Office 365 and Surface Hub. We’re using this technology to keep our digital transformation on track—helping to empower employees to reduce downtime, connect better with customers, and ensure safe and reliable operations.