What’s new in VSTS Sprint 136 Update

ASP.NET Core OData now Available

Introduction

The Microsoft OData Team is proud to announce general availability (GA) of OData (Open OData Protocol) on ASP.NET Core 2.0. It is now available through Nuget package at https://www.nuget.org/packages/Microsoft.AspNetCore.OData, current its version is 7.0.0.

Along this release, it will allow customers to create OData v4.0 endpoints and leverage the OData query syntax easily on multiple platforms, not only on Windows.

This blog is intended to give you a tutorial about how to build/consume OData service through ASP.NET Core OData package. Let’s getting started.

ASP.NET Core Web Application

For simplicity, we will start by creating a simple ASP.NET Core Web Application called BookStore.

Create Visual Studio Project

In Visual studio 2017, from the “File” menu, select “New > Project …”.

In the “New Project” dialog, select “.NET Core” and pick “ASP.NET Core Web Application” template, give a name as “BookStore” and set up the location, see the following picture:

Click "OK" button, then in the “New ASP.NET Core Web Application – BookStore” dialog, select “API” and un-check the "Configure for HTTPs" for simplicity as below:

Click “Ok” button, we will get an empty ASP.NET Core Web Application project.

Install the Nuget Package

Once the empty application has been created, the first thing is to install the ASP.NET Core OData Nuget package from Nuget.org. In the solution explorer, right click on “Dependencies” in the BookStore project and select “Manage Nuget Packages” into the Nuget packages Management dialog. In this dialog, Search and select “Microsoft.AspNetCore.OData” package and click the install button to install the package into the Web application.See the below picture:

EF Core is also used in this tutorial, so do the same process to install “Microsoft.EntityFrameworkCore.InMemory” and its dependencies (for simplicity, we use In Memory data source).

Now, we have the following project configuration:

Add the Model classes

A model is an object representing the data in the application. In this tutorial, we use the POCOs (Plain Old CLR Object) classes to represent our book store models.

Right click BookStore project in the solution explorer, from the popup menu, select Add > New Folder. Name the folder as Models. Add the following classes into the Models folder:

Where:

- Book, Press will be served as Entity types

- Address will be served as a Complex type.

- Category will be served as an Enum type.

Build the Edm Model

OData uses the Entity Data Model (EDM) to describe the structure of data. In ASP.NET Core OData, it’s easily to build the EDM Model based on the above CLR types. So, add the following private static method at the end of class “Startup”.

DM

Where, we define two entity set named “Books” and “Presses”.

Register Services through Dependency Injection

Register the OData Services

ASP.NET Core OData requires some services registered ahead to provide its functionality. The library provides an extension method called “AddOData()” to register the required OData services through the built-in dependency injection. So, add the following codes into “ConfigureServices” method in the “Startup” class:

Register the OData Endpoint

We also need to add OData route to register the OData endpoint. We are going to add an OData route named “odata” with “odata” prefix to the MVC routes, and call the “GetEdmModel()” to bind the Edm model to the endpoint. So, change the “Configure()” method in “Startup” class as:

Query the metadata

The OData service is ready to run and can provide basic functionalities, for example to query the metadata (XML representation of the EDM). So, let’s build and run the Web Application. Once it’s running, we can use any client tools (for example, Postman) to issue the following request (remember to change the port in the URI):

GET http://localhost:5000/odata/$metadata

Then, you can get the metadata as following xml:

Create the Database Context

Now, it’s ready to add more real functionalities. First, let’s introduce the database context into the Web Application. The database context is the main class that maps Entity Framework Core database to a given data model (CLR classes).

In the “Models” folder, add a new class named “BookStoreContext” with the following contents:

The code in “OnModelCreating” maps the “Address” as complex type.

Register the Database Context

Second, we should register the database context through built-in dependency injection at service configuration. So, change the “ConfigureServices” method in “Startup” class as:

Inline Model Data

For simplicity, we build a class to contain the inline model data. In the following codes, I add two books in this container.

Manipulate Resources

Build the Controller

In the “Controllers” folder, rename “ValuesController.cs” to “BooksController.cs” and replace its content with:

In this class, we add a private instance of BookStoreContext to play the DB role and inherit it from "ODataController" class.

Retrieve the Resources

Add the following methods to the “BooksController”:

Where,

- Get() returns the entire books

- Get(int key) returns a certain book by its key.

Now, we can query the whole books as “GET http://localhost:5000/odata/Books”, the result should be:

You can try issue a request as “GET http://localhost:5000/odata/Books(2)” to get the book with Id equal to 2.

Create the Resources

Adding the following lines of code into “BooksController” will allow to create book to the service:

Where, [FromBody] is necessary in ASP.NET Core.

As an example, we can issue a post request as follows to create a new Book:

POST http://localhost:5000/odata/Books

Content-Type: application/json

Content:

Query the Resources

Adding the following line of code in Startup.cs enables all OData query options, for example $filter, $orderby, $expand, etc.

Here's a basic $filter example:

GET http://localhost:5000/odata/Books?$filter=Price le 50

The response content is:

It also supports complex query option, for example:

GET http://localhost:5000/odata/Books?$filter=Price le 50&$expand=Press($select=Name)&$select=ISBN

The response content is:

Summary

Thanks to the OData Community for their feedbacks, questions, issues, and contributions on GitHub. Without their help, we can't deliver this version.

We encourage you to download the latest package from Nuget.org and start building amazing OData service running on ASP.NET Core. Enjoy it!

You can refer to the below links for other detail information:

- Open Data Protocol (OData) Specification

- ASP.NET Core & EF Core

- OData .NET Open Source (ODL & Web API)

- OData Tutorials & Samples

- This blog's sample project

The whole of WordPress compiled to .NET Core and a NuGet Package with PeachPie

Why? Because it's awesome. Sometimes a project comes along that is impossibly ambitious and it works. I've blogged a little about Peachpie, the open source PHP compiler that runs PHP under .NET Core. It's a project hosted at https://www.peachpie.io.

Why? Because it's awesome. Sometimes a project comes along that is impossibly ambitious and it works. I've blogged a little about Peachpie, the open source PHP compiler that runs PHP under .NET Core. It's a project hosted at https://www.peachpie.io.

But...why? Here's why:

- Performance: compiled code is fast and also optimized by the .NET Just-in-Time Compiler for your actual system. Additionally, the .NET performance profiler may be used to resolve bottlenecks.

- C# Extensibility: plugin functionality can be implemented in a separate C# project and/or PHP plugins may use .NET libraries.

- Sourceless distribution: after the compilation, most of the source files are not needed.

- Power of .NET: Peachpie allows the compiled WordPress clone to run in a .NET JIT'ted, secure and manageable environment, updated through windows update.

- No need to install PHP: Peachpie is a modern compiler platform and runtime distributed as a dependency to your .NET project. It is downloaded automatically on demand as a NuGet package or it can be even deployed standalone together with the compiled application as its library dependency.

A year ago you could very happily run Wordpress (a very NON-trivial PHP application, to be clear) under .NET Core using Peachpie. You would compile your PHP into an assembly and then do something like this in your Startup.cs:

public void Configure(IApplicationBuilder app)

{

app.UseSession();

app.UsePhp(new PhpRequestOptions(scriptAssemblyName: "peachweb"));

app.UseDefaultFiles();

app.UseStaticFiles();

}

And that's awesome. However, I noticed something on their GitHub recently, specifically under https://github.com/iolevel/wpdotnet-sdk. It says:

The solution compiles all of WordPress into a .NET assembly and additionally provides C# wrappers for utilization of compiled sources.

Whoa. Drink that in. The project consists of several parts:

wordpresscontains sources of WordPress that are compiled into a single .NET Core assembly (wordpress.dll). Together with its content files it is packed into a NuGet packagePeachPied.WordPress. The project additionally contains the "must-use" pluginpeachpie-api.phpwhich exposes the WordPress API to .NET.PeachPied.WordPress.Sdkdefines abstraction layer providing .NET interfaces over PHP WordPress instance. The interface is implemented and provided bypeachpie-api.php.PeachPied.WordPress.AspNetCoreis an ASP.NET Core request handler that configures the ASP.NET pipeline to pass requests to compiledWordPressscripts. The configuration includes response caching, short URL mapping, various .NET enhancements and the settings of WordPress database.appproject is the executable demo ASP.NET Core web server making use of compiledWordPress.

They compiled the whole of WordPress into a NuGet Package.

YES.

- The compiled website runs on .NET Core

- You're using ASP.NET Core request handling and you can extend WordPress with C# plugins and themes

Seriously. Go get the .NET Core SDK version 2.1.301 over at https://dot.net and clone their repository locally from https://github.com/iolevel/wpdotnet-sdk.

Make sure you have a copy of mySQL running. I get one started FAST with Docker using this command:

docker run --name=mysql1 -p 3306:3306 -e MYSQL_ROOT_PASSWORD=password -e MYSQL_DATABASE=wordpress mysql --default-authentication-plugin=mysql_native_password

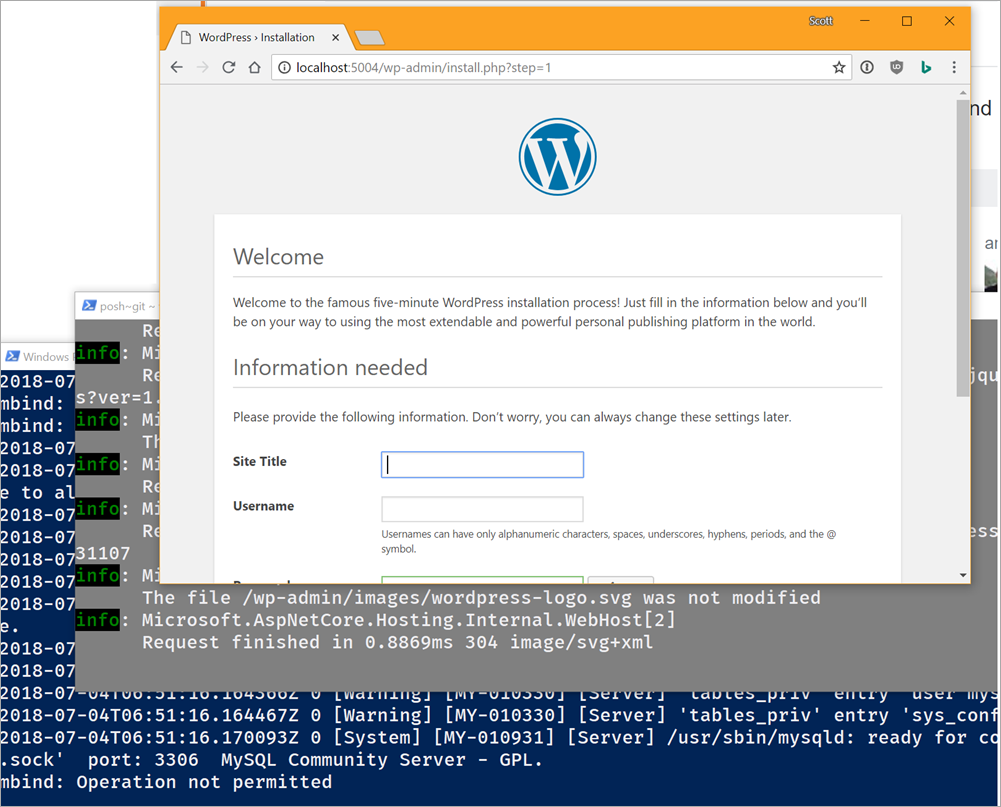

Then just "dotnet build" at the root of the project, then go into the app folder and "dotnet run." It will show up on localhost:5004.

NOTE: I needed to include the default authentication method to prevent the generic Wordpress "Cannot establish database connection." I also added the MYSQL_DATABASE environment variable so I could avoid logging initially using the mysql client and creating the database manually with "CREATE DATABASE wordpress."

Look at that. I have my mySQL in one terminal listening on 3306, and ASP.NET Core 2.1 running on port 5004 hosting freaking WordPress compiled into a single NuGet package.

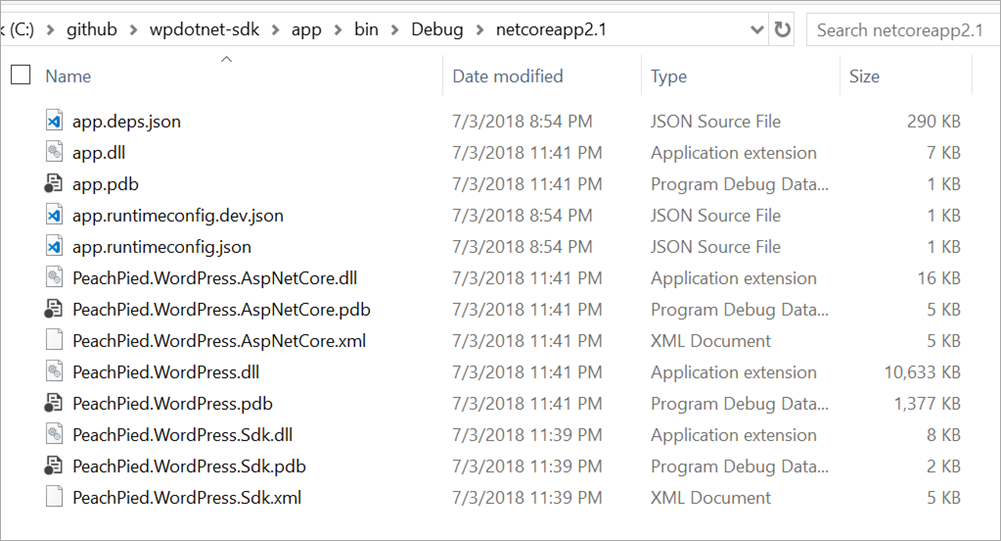

Here's my bin folder:

There's no PHP files which is a nice security bonus - not only are you running from the one assembly but there's no text files for any rogue plugins to modify or corrupt.

Here's the ASP.NET Core 2.1 app that hosts it, in full:

using System.IO;

using Microsoft.AspNetCore;

using Microsoft.AspNetCore.Builder;

using Microsoft.AspNetCore.Hosting;

using Microsoft.Extensions.Configuration;

using PeachPied.WordPress.AspNetCore;

namespace peachserver

{

class Program

{

static void Main(string[] args)

{

// make sure cwd is not app but its parent:

if (Path.GetFileName(Directory.GetCurrentDirectory()) == "app")

{

Directory.SetCurrentDirectory(Path.GetDirectoryName(Directory.GetCurrentDirectory()));

}

//

var host = WebHost.CreateDefaultBuilder(args)

.UseStartup<Startup>()

.UseUrls("http://*:5004/")

.Build();

host.Run();

}

}

class Startup

{

public void Configure(IApplicationBuilder app, IHostingEnvironment env, IConfiguration configuration)

{

// settings:

var wpconfig = new WordPressConfig();

configuration

.GetSection("WordPress")

.Bind(wpconfig);

//

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

app.UseWordPress(wpconfig);

app.UseDefaultFiles();

}

}

}

I think the app.UseWordPress() is such a nice touch. ;)

I often get emails from .NET developers asking what blog engine they should consider. Today, I think you should look closely at Peachpie and strongly consider running WordPress under .NET Core. It's a wonderful open source project that brings two fantastic ecosystems together! I'm looking forward to exploring this project more and I'd encourage you to check it out and get involved with Peachpie.

Sponsor: Check out dotMemory Unit, a free unit testing framework for fighting all kinds of memory issues in your code. Extend your unit testing with the functionality of a memory profiler!

© 2018 Scott Hanselman. All rights reserved.

Microsoft Azure launches tamper-proof Azure Immutable Blob Storage for financial services

I’m pleased to announce that Azure Immutable Blob Storage is now in public preview – enabling financial institutions to store and retain data in a non-erasable and non-rewritable format – and at no additional cost. Azure Immutable Blob Storage meets the relevant storage requirements of three key financial industry regulations: the CFTC Rule 1.31(c)-(d), FINRA Rule 4511, and SEC Rule 17a-4. Financial services customers, representing one of the most heavily regulated industries in the world, are subject to complex requirements like the retention of financial transactions and related communication in a non-erasable and non-modifiable state. These strict requirements help to provide effective legal and forensic surveillance of market conduct.

Software providers and partners can now rely on Azure as a one-stop shop cloud solution for records retention and immutable storage with sensitive workloads. Financial institutions can now easily build their own applications taking advantage of these features while remaining compliant. These Write Once Read Many (WORM) policies apply to all tiers of storage (hot, cool, and archive). This industry leading compliance storage offering is now available at no additional cost on top of the base pricing of Azure storage!

To document compliance, Microsoft retained a leading independent assessment firm that specializes in records management and information governance, Cohasset Associates, to evaluate Azure Immutable Blob Storage and its compliance with requirements specific to the financial services industry. Cohasset validated that Azure Immutable Blob Storage, when used to retain time-based Blobs in a WORM state, meets the relevant storage requirements of CFTC Rule 1.31(c)-(d), FINRA Rule 4511, and SEC Rule 17a-4. Microsoft targeted this set of rules, as they represent the most prescriptive guidance globally for records retention for financial institutions. We are pleased to announce the release of Cohasset’s assessment of our immutable storage feature set, available today.

For customers seeking to decommission legacy SAN and other storage infrastructure, as well as take advantage of the economies of scale available in the cloud, Azure Immutable Blob Storage offers the perfect feature set:

- Data can be rendered immutable and cannot be modified or deleted by any user including those with account administrative privileges.

- Administrators can configure policies where data can be created and read, but not updated or deleted – otherwise known as WORM storage.

- The same Azure storage environment can be used for both standard and immutable storage. This means IT no longer needs to manage the complexity of a separate archive storage solution.

- Integration with archive, cool, and hot tiers of storage – allowing it to be used with data accessed frequently or infrequently. Discounts apply to storage accessed infrequently, either in cool standby or fully archived.

- Administrators can create lifecycle management policies to dictate rules for when data automatically moves between tiers. For example, data that has not been modified for two months moves to archive storage.

This feature set is a result of Microsoft learning and partnering with a broad set of stakeholders across the financial services industry ecosystem. Azure is in a unique position to partner with banking and capital markets customers, given our global leadership in engaging with regulators, the industry leading audit rights we provide to customers, and our exclusive cloud compliance program. Microsoft also has a unique portfolio of financial services compliance offerings built into our products:

- The Service Trust Portal provides full unredacted audit reports for the Azure platform, including PCI DSS, SOC 1 Type 2, SOC 2 Type 2, and ISO 27001. It also includes compliance guides for implementing solutions on Azure subject to financial services regulations.

- Compliance Manager provides a cross-cloud view of control state, organized by Microsoft managed controls and institution managed controls. It includes workflow, allowing a compliance officer to assign control implementation or testing processes to any user through Azure Active Directory. These features were built in collaboration with leading financial institutions and regulators.

- Azure Advisor provides best practice guidance for the topics financial institutions care most about: resiliency, security, performance, and cost.

- Azure Security Center provides deeper visibility into the security threats facing your environment and makes those insights actionable with a few clicks.

This set of resources and features makes Azure the best destination for moving financial data into the cloud and helping ensure it meets the strict regulatory requirements imposed on the financial services industry. Get started today! For the full story of how Azure can help banks and insurers meet their regulatory responsibilities, visit The Microsoft Trust Center. Lastly, see our case studies for how customers are using Azure.

Lessons from big box retail

How can retail banks generate curated experiences? Learn from retailers. They use a cloud platform that orchestrates experiences from omni-channels to devices using data collected from various touchpoints. The data is combined and analyzed with Microsoft Azure Machine Learning technology. With these technologies, retailers can now understand the shopping behavior of a customer and offer them products that that are most relevant. Retailers deliver engaging in-store experiences by digitizing the store to deliver a curated experience to the shopper at every point in their shopping journey.

Figure above: Retail Bank branch shown as the hub for digital curated experiences

Imagine this: banks that provide financial “fitting rooms” where customers can “try on” products and services before they commit. That gives the customer the ability to experiment with the recommendations that fit their holistic life, not just their financial life. That feels a lot more personal and custom fitted to me.

Convergence! Wasn’t there a movie with that title? No, sorry, that was actually Divergent. Exciting sci-fi thriller where people are divided based on virtues, but that’s going in the wrong direction for this topic. So, what’s converging? Retail experiences are giving customers new expectations about banking.

Consumer expectations are being shaped by customer experience leaders outside of banking. Digital transformation in the retail banking industry has intense customer focus and all players are looking towards lean operations and delighted customers. Traditional activities will not sustain the same historical levels of revenue. These include direct/indirect marketing, customer requirement analysis and product design, advertising, campaign management and branch lobby leading. Instead, retail banks will need to build a much larger digital footprint through targeted marketing. This is done using big data, advanced analytics for product design, predictive demand modelling, elasticity modelling for pricing, and conjoint analysis for product configuration.

In the past, it was common to extract data to produce propensity models that hypothesize why customers leave. Those days are over. If retail banks really want to know precisely when to offer the next product, or why an experience won or lost the customer’s heart, they will have to pursue a combination of the following: augmented intelligence (via bots/agents, robo-advisors, intelligent automation), fintech partnerships/coopetition, predictive analytics, IoT, big data, and strategies for future possibilities (Virtual Presence, Augmented Reality, Seamless life integration).

So, what do retail banks need to do to innovate and evolve? Spend some time looking at the convergence of retail banking and big box retail. Dissect what the big box retailers are doing. The retail banking experience will be influenced by the big box retailer customer experience. For perspective, here’s how big box retail has evolved.

- In the 80s, Mass Merchandise was all about ready-for-pickup on display in retail stores. Walmart perfected the art of get-it-right-now-don’t-wait. In contrast, retail banking today has many processes that takes a week to land. Small loans and credit cards still take days to fulfill.

- Starting in the 90s, in terms of Mass Market E-commerce, Amazon could ship anything from anywhere. The customer also had detailed product information, social proof, and price comparisons at their fingertips. When was the last time your bank provided you with reviews from other consumers who have the product you are interested in?

- In the mid 2000s, Retail 4.0 - Digital Transformation began. Then accelerated by blending the physical and digital experiences. Retailers created online-like experiences in stores by leveraging advanced sensors, digital devices, and the computer in your pocket. Great examples are showcased in Microsoft retail stores and Pier 1 Imports.

More and more, digital transformation in retail means custom-made, specially produced, and made-to-order at the point of sale—not just inside the store. And the convergence of these industries will ultimately drive high customer expectations for banks to provide the same.

What big box practices need to be implemented by retail banks? For starters, the retail bank of tomorrow will need the agility to navigate the customer through blended physical and virtual experiences. The customer can be in the branch, online, or on the phone. The experience will be guided using big data analytics and social channels to deliver holistic personal customer experiences based on customer goals—financial and non-financial. Retail banks will effectively blend humans with digital advisors and intelligent agents. And the branch of the future will reflect all these elements in one integrated experience. The experience will provide customer-centricity, cognitive and contextual interactions, innovative personalization, and high levels of trust.

Taking steps to pursue the curated experience is straight forward, but the paths taken to get there will vary widely. That’s why I recommend these steps:

- Develop a curation strategy aligned with the overall business strategy. Read the customer experience orchestration paper to help you get started with this step.

- Democratize artificial intelligence and make it an enterprise-wide capability. Read the Data Management in Banking Overview to help you get started with this step.

- Prioritize the data to be leveraged for analysis and insight.

- Find the right mix of curated experiences.

- Start with a clearly defined proof of business value for those experiences.

Delivering curation at every touch point throughout the financial shopping journey cannot happen without a rich platform like Microsoft Azure to orchestrate the curated experience. To engage with me on additional ideas and recommendations, contact me through Linkedin and Twitter.

Visual Studio Code June 2018

If I am a VSTS Stakeholder, can I also be an Admin?

Workaround for Bower Version Deprecation

As of June 25, the version of Bower shipped with Visual Studio was deprecated, resulting in Bower operations failing when run in Visual Studio. If you use Bower, you will see an error something like:

EINVRES Request to https://bower.herokuapp.com/packages/bootstrap failed with 502

This will be fixed in Visual Studio 15.8. In the meantime, you can work around the issue by using a new version of Bower or by adding some configuration to each Bower project.

The Issue

Some time, ago Bower switched their primary registry feed but continued to support both. On June 25th, they turned off the older feed, which the copy in Visual Studio tries to use by default.

The Fix

There are two options to fix this issue:

1. Update the configuration for each Bower project to explicitly use the new registry: https://reigstry.boiwer.io

2. Manually install a newer version of Bower and configure Visual Studio to use it.

Workaround 1: Define the new registry entry in the project’s Bower config file (.bowerrc)

Add a .bowerrc file to the project (or modify the existing .bowerrc) with the following content:

————————————————————————

{

“registry”: “https://registry.bower.io”

}

————————————————————————

With the registry property defined in a .bowerrc file in the project root, Bower operations should run successfully on the older versions of Bower that shipped with Visual Studio 2015 and Visual Studio 2017.

Workaround 2: Configure Visual Studio to point to use a newer version of Bower

An alternative solution is to configure Visual Studio use to a newer version of Bower that you have installed as a global tool on your machine. (For instructions on how to install Bower, refer to the guidance on the Bower website.) If you have installed Bower via npm, then the path to the bower tools will be contained in the system $(PATH) variable. It might look something like this: C:Users[username]AppDataRoamingnpm. If you make this path available to Visual Studio via the External Web Tools options page, then Visual Studio will be able to find and use the newer version.

To configure Visual Studio to use the globally installed Bower tools:

1. Inside Visual Studio, open Tools->Options.

2. Navigate to the External Web Tools options page (under Projects and Solutions->Web Package Management).

3. Select the “$(PATH)” item in the Locations of external tools list box.

4. Repeatedly press the up-arrow button in the top right corner of the Options dialog until the $(PATH) item is at the top of the list.

5. Press OK to confirm the change.

Ordered this way, when Visual Studio is searching for Bower tools, it will search your system path first and should find and use the version you installed, rather than the older version that ships with Visual Studio in the $(VSINSTALLDIR)WebExternal directory.

Note: This change affects path resolution for all external tools. So, if you have Grunt, Gulp, npm or any other external tools on the system path, those tools will be used in preference to any other versions that shipped with VS. If you only want to change the path for Bower, leave the system path where it is and add a new entry at the top of the list that points to the instance of Bower installed locally. It might look something like this: C:Users[username]AppDataRoamingnpmnode_modulesbowerbin

We trust this will solve any issues related to the recent outage of the older bower registry. If you have any questions or comments, please leave them below.

Happy coding!

Justin Clareburt, Senior Program Manager, Visual Studio

Justin Clareburt is the Web Tools PM on the Visual Studio team. He has over 20 years of Software Engineering experience and brings to the team his expert knowledge of IDEs and a passion for creating the ultimate development experience.

Justin Clareburt is the Web Tools PM on the Visual Studio team. He has over 20 years of Software Engineering experience and brings to the team his expert knowledge of IDEs and a passion for creating the ultimate development experience.

Follow Justin on Twitter @justcla78

Shared PCH usage sample in Visual Studio

This post was written by Olga Arkhipova

Oftentimes, multiple projects in a Visual Studio solution use the same or very similar precompiled headers. As pch files are usually big and building them takes significant time, this leads to a popular question : can several projects use the same pch file which would be built just once, so that <todo insert customer value statement here for those that didn’t have the question but could benefit from the answer>?

The answer is yes, but it requires a couple of tricks to satisfy cl’s check that command line used for building the pch is the same as the command line used for building a source file using this pch.

Here is a sample solution, which has 3 projects – one (SharedPCH) is building the pch and the static library and the other two (ConsoleApplication 1 and 2) are using it. You can find the sample code source in our VCSamples GitHub repository.

When ConsoleApplication projects reference the SharedPCH one, the build will automatically link the SharedPCH’s static lib, but several project properties need to be changed as well.

1. C/C++ Additional Include Directories (/I) should contain the shared stdafx.h directory

2. C/C++ Precompiled Header Output File (/Fp) should be set to the shared pch file (produced by SharedPCH project)

3. If your projects are compiled with /Zi or /ZI (see more info about those switches at the end), the projects which use the shared pch need to copy the .pdb and .idb files produced by the shared pch project to their specific locations so the final .pdb files contains pch symbols.

As those properties need to be changed similarly for all projects, I created the SharedPCH.props and CustomBuildStep.props files and imported them to my projects using the Property Manager tool window.

SharedPch.props helps with #1 and #2 and is imported in all projects. CustomBuildStep.props helps with #3 and is imported to consuming pch projects, but not to the producing one. If your projects are using /Z7, you don’t need CustomBuildStep.props.

In SharedPch.props, I defined properties for shared pch, pdb and idb files locations:

We wanted to have all build outputs under one root folder, separate from the sources, so I redefined the Output and Intermediate directories. This is not necessary for using shared pch since it just makes experimentation easier as you can delete one folder if something goes wrong.

Adjusted C/C++ ‘Additional Include Directories’ and ‘Precompiled Header Output File’ properties:

In CustomBuildStep.props I defined Custom Build Step to run before ClCompile target and copy the shared pch .pdb and .idb files if they are newer than the project’s .pdb and .idb files. Note that we are talking about compiler intermediate pdb file here and not the final one produced by the linker.

If all files in the project are using one pch, that’s all we need to do, since when the pch is changed, all other files need to be recompiled as well, so at the end of the build we’ll have full pdb and idb files.

If your project uses more than one pch or contains files that are not using pch at all, you’ll need to change pdb file location (/Fd) for those files, so that it is not overridden by the shared pch pdb.

I used the command line property editor to define the commands. Each ‘xcopy’ command should be on its own line:

Alternatively, you can put all commands in a script file and just specify it as command line.

Background information

/Z7, /ZI and /Zi compiler flags

When /Z7 is used, the debug information (mainly type information) is stored in each OBJ file. This includes types from the header files, which means that there is a lot of duplication in case of shared headers and OBJ size can be huge.

When /Zi or /ZI is used, the debug information is stored in a compiler PDB file. In a project, the source files often use the same PDB file (this is controlled by /Fd compiler flag, the default value is $(IntDir)vc$(PlatformToolsetVersion).pdb), so the debug information is shared across them.

/ZI will also generate an IDB file to store information related to incremental compilation to support Edit and Continue.

Compiler PDB vs. linker PDB

As mentioned above, the compiler PDB is generated by /Zi or /ZI to store the debug information. Later, linker will generate a linker PDB by combining the information from the compiler PDB and additional debug information during linking. The linker may also remove unreferenced debug information. The name of the linker PDB is controlled by the /PDB linker flag. The default value is $(OutDir)$(TargetName).pdb.

Give us your feedback!

Your feedback is a critical part of ensuring that we can deliver useful information and feature. For any questions, reach out to us via Twitter at @visualc or via email at visualcpp@microsoft.com. For any issues or suggestions, please let us know via Help > Send Feedback > Report a Problem in the IDE.

dotnet outdated helps you keep your projects up to date

I've moved my podcast site over to ASP.NET Core 2.1 over the last few months. You might want to follow the saga by checking out some of the recent blog posts.

- Upgrading my podcast site to ASP.NET Core 2.1 in Azure plus some Best Practices

- Major build speed improvements - Try .NET Core 2.1 today

- Using ASP.NET Core 2.1's HttpClientFactory with Refit's REST library

- Penny Pinching in the Cloud: Deploying Containers cheaply to Azure

- Detecting that a .NET Core app is running in a Docker Container and SkippableFacts in XUnit

- Adding Cross-Cutting Memory Caching to an HttpClientFactory in ASP.NET Core with Polly

- The Programmer's Hindsight - Caching with HttpClientFactory and Polly Part 2

- Setting up Application Insights took 10 minutes. It created two days of work for me.

That's just a few of the posts. Be sure to check out the last several months' posts in the calendar view. Anyway, I've been trying lots of new open source tools and libraries like coverlet for .NET Core Code Coverage, and frankly, keeping my project files and dependencies up to date has sucked.

Npm has "npm outdated" and paket has "paket outdated," why doesn't dotnet Core have this also? Certainly at a macro level there's more things to consider as NuGet would need to find the outdated packages for UWP, C++, and a lot of other project types as well. However if we just focus on .NET Core as an initial/primary use case, Jerrie Pelser has "dotnet outdated" for us and it's fantastic!

Once you've got the .NET Core 2.1 SDK or newer, just install the tool globally with one line:

dotnet tool install --global dotnet-outdated

At this point I'll run "dotnet outdated" on my podcast website. While that's running, let me just point you to https://github.com/jerriep/dotnet-outdated as a lovely example of how to release a tool (no matter how big or small) on GitHub.

- It has an AppVeyor CI link along with a badge showing you that it's passing its build and test suite. Nice.

- It includes both a NuGet link to the released package AND a myGet link and badge to the dailies.

- It's got clear installation and clear usage details.

- Bonus points of screenshots. While not accessible to call, I admit personally that I'm more likely to feel that a project is well-maintained if there are clear screenshots that tell me "what am I gonna get with this tool?"

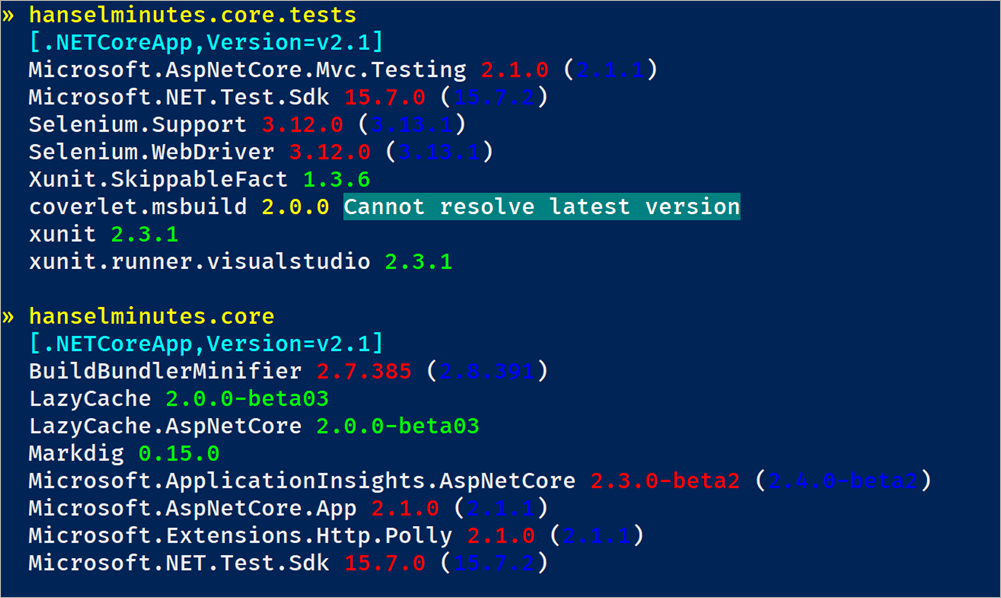

Here's the initial output on my Site and Tests.

After updating the patch versions, here's the output, this time as text. For some reason it's not seeing Coverlet's NuGet so I'm getting a "Cannot resolve latest version" error but I haven't debugged that yet.

» hanselminutes.core.tests [.NETCoreApp,Version=v2.1] Microsoft.AspNetCore.Mvc.Testing 2.1.1 Microsoft.NET.Test.Sdk 15.7.2 Selenium.Support 3.13.1 Selenium.WebDriver 3.13.1 Xunit.SkippableFact 1.3.6 coverlet.msbuild 2.0.1 Cannot resolve latest version xunit 2.3.1 xunit.runner.visualstudio 2.3.1 » hanselminutes.core [.NETCoreApp,Version=v2.1] BuildBundlerMinifier 2.8.391 LazyCache 2.0.0-beta03 LazyCache.AspNetCore 2.0.0-beta03 Markdig 0.15.0 Microsoft.ApplicationInsights.AspNetCore 2.4.0-beta2 Microsoft.AspNetCore.App 2.1.1 Microsoft.Extensions.Http.Polly 2.1.1 Microsoft.NET.Test.Sdk 15.7.2

As with all projects and references, while things aren't *supposed* to break when you update a Major.Minor.Patch/Revision.build...things sometimes do. You should check your references and their associated websites and release notes to confirm that you know what's changed and you know what changes you're bringing in.

Shayne blogged about dotnet out-dated and points out the -vl (version lock) options that allows you to locking on Major or Minor versions. No need to take things you aren't ready to take.

All in all, a super useful tool that you should install TODAY.

dotnet tool install --global dotnet-outdated

The source is up at https://github.com/jerriep/dotnet-outdated if you want to leave issues or get involved.

Sponsor: Check out dotMemory Unit, a free unit testing framework for fighting all kinds of memory issues in your code. Extend your unit testing with the functionality of a memory profiler!

© 2018 Scott Hanselman. All rights reserved.

Moving from Hosted XML process to Inheritance process – Private Preview

Top Stories from the Microsoft DevOps Community – 2018.07.06

MSVC Preprocessor Progress towards Conformance

Why re-write the preprocessor?

Recently, we published a blog post on C++ conformance completion. As mentioned in the blog post, the preprocessor in MSVC is currently getting an overhaul. We are doing this to improve its language conformance, address some of the longstanding bugs that were difficult to fix due to its design and improve its usability and diagnostics. In addition to that, there are places in the standard where the preprocessor behavior is undefined or unspecified and our traditional behavior diverged from other major compilers. In some of those cases, we want to move closer to the ecosystem to make it easier for cross platform libraries to come to MSVC.

If there is an old library you use or maintain that depends on the non-conformant traditional behavior of the MSVC preprocessor, you don’t need to worry about these breaking changes as we will still support this behavior. The updated preprocessor is currently under the switch /experimental:preprocessor until it is fully implemented and production-ready, at which point it will be moved to a /Zc: switch which is enabled by default under /permissive- mode.

How do I use it?

The behavior of the traditional preprocessor is maintained and continues to be the default behavior of the compiler. The conformant preprocessor can be enabled by using the /experimental:preprocessor switch on the command line starting with the Visual Studio 2017 15.8 Preview 3 release.

We have introduced a new predefined macro in the compiler called “_MSVC_TRADITIONAL” to indicate the traditional preprocessor is being used. This macro is set unconditionally, independent of which preprocessor is invoked. Its value is “1” for the traditional preprocessor, and “0” for the conformant experimental preprocessor.

#if defined(_MSVC_TRADITIONAL) && _MSVC_TRADITIONAL

// Logic using the traditional preprocessor

#else

// Logic using cross-platform compatible preprocessor

#endif

What are the behavior changes?

This first experimental release is focused on getting conformant macro expansions done to maximize adoption of new libraries by the MSVC compiler. Below is a list of some of the more common breaking changes that were run into when testing the updated preprocessor with real world projects.

Behavior 1 [macro comments]

The traditional preprocessor is based on character buffers rather than preprocessor tokens. This allows unusual behavior such as this preprocessor comment trick which will not work under the conforming preprocessor:

#if DISAPPEAR

#define DISAPPEARING_TYPE /##/

#else

#define DISAPPEARING_TYPE int

#endif

// myVal disappears when DISAPPEARING_TYPE is turned into a comment

// To make standard compliant wrap the following line with the appropriate // // #if/#endif

DISAPPEARING_TYPE myVal;

Behavior 2 [L#val]

The traditional preprocessor incorrectly combines a string prefix to the result of the # operator:

#define DEBUG_INFO(val) L”debug prefix:” L#val

// ^

// this prefix

const wchar_t *info = DEBUG_INFO(hello world);

In this case the L prefix is unnecessary because the adjacent string literals get combined after macro expansion anyway. The backward compatible fix is to change the definition to:

#define DEBUG_INFO(val) L”debug prefix:” #val

// ^

// no prefix

This issue is also found in convenience macros that ‘stringize’ the argument to a wide string literal:

// The traditional preprocessor creates a single wide string literal token

#define STRING(str) L#str

// Potential fixes:

// Use string concatenation of L”” and #str to add prefix

// This works because adjacent string literals are combined after macro expansion

#define STRING1(str) L””#str

// Add the prefix after #str is stringized with additional macro expansion

#define WIDE(str) L##str

#define STRING2(str) WIDE(#str)

// Use concatenation operator ## to combine the tokens.

// The order of operations for ## and # is unspecified, although all compilers

// I checked perform the # operator before ## in this case.

#define STRING3(str) L## #str

Behavior 3 [warning on invalid ##]

When the ## operator does not result in a single valid preprocessing token, the behavior is undefined. The traditional preprocessor will silently fail to combine the tokens. The new preprocessor will match the behavior of most other compilers and emit a diagnostic.

// The ## is unnecessary and does not result in a single preprocessing token.

#define ADD_STD(x) std::##x

// Declare a std::string

ADD_STD(string) s;

Behavior 4 [comma elision in variadic macros]

Consider the following example:

void func(int, int = 2, int = 3);

// This macro replacement list has a comma followed by __VA_ARGS__

#define FUNC(a, …) func(a, __VA_ARGS__)

int main()

{

// The following macro is replaced with:

// func(10,20,30)

FUNC(10, 20, 30);

// A conforming preprocessor will replace the following macro with: func(1, );

// Which will result in a syntax error.

FUNC(1, );

}

All major compilers have a preprocessor extension that helps address this issue. The traditional MSVC preprocessor always removes commas before empty __VA_ARGS__ replacements. In the updated preprocessor we have decided to more closely follow the behavior of other popular cross platform compilers. For the comma to be removed, the variadic argument must be missing (not just empty) and it must be marked with a ##

operator.

#define FUNC2(a, …) func(a , ## __VA_ARGS__)

int main()

{

// The variadic argument is missing in the macro being evoked

// Comma will be removed and replaced with:

// func(1)

FUNC2(1);

// The variadic argument is empty, but not missing (notice the

// comma in the argument list). The comma will not be removed

// when the macro is replaced.

// func(1, )

FUNC2(1, );

}

In the upcoming C++2a standard this issue has been addressed by adding __VA_OPT__, which is not yet implemented.

Behavior 5 [macro arguments are ‘unpacked’]

In the traditional preprocessor, if a macro forwards one of its arguments to another dependent macro then the argument does not get “unpacked” when it is substituted. Usually this optimization goes unnoticed, but it can lead to unusual behavior:

// Create a string out of the first argument, and the rest of the arguments.

#define TWO_STRINGS( first, … ) #first, #__VA_ARGS__

#define A( … ) TWO_STRINGS(__VA_ARGS__)

const char* c[2] = { A(1, 2) };

// Conformant preprocessor results:

// const char c[2] = { “1”, “2” };

// Traditional preprocessor results, all arguments are in the first string:

// const char c[2] = { “1, 2”, };

When expanding A(), the traditional preprocessor forwards all of the arguments packaged in __VA_ARGS__ to the first argument of TWO_STRINGS, which leaves the variadic argument of TWO_STRINGS empty. This causes the result of #first to be “1, 2” rather than just “1”. If you are following along closely, then you may be wondering what happened to the result of #__VA_ARGS__ in the traditional preprocessor expansion: if the variadic parameter is empty it should result in an empty string literal “”. Due to a separate issue, the empty string literal token was not generated.

Behavior 6 [rescanning replacement list for macros]

After a macro is replaced, the resulting tokens are rescanned for additional macro identifiers that need to be replaced. The algorithm used by the traditional preprocessor for doing the rescan is not conformant as shown in this example based on actual code:

#define CAT(a,b) a ## b

#define ECHO(…) __VA_ARGS__

// IMPL1 and IMPL2 are implementation details

#define IMPL1(prefix,value) do_thing_one( prefix, value)

#define IMPL2(prefix,value) do_thing_two( prefix, value)

// MACRO chooses the expansion behavior based on the value passed to macro_switch

#define DO_THING(macro_switch, b) CAT(IMPL, macro_switch) ECHO(( “Hello”, b))

DO_THING(1, “World”);

// Traditional preprocessor:

// do_thing_one( “Hello”, “World”);

// Conformant preprocessor:

// IMPL1 ( “Hello”,”World”);

Although this example is a bit contrived, we have run into this issue few times when testing the preprocessor changes against real world code. To see what is going on we can break down the expansion starting with DO_THING:

DO_THING(1, “World”)— >

CAT(IMPL, 1) ECHO((“Hello”, “World”))

Second, CAT is expanded:

CAT(IMPL, 1)– > IMPL ## 1 — > IMPL1

Which puts the tokens into this state:

IMPL1 ECHO((“Hello”, “World”))

The preprocessor finds the function-like macro identifier IMPL1, but it is not followed by a “(“, so it is not considered a function-like macro invocation. It moves on to the following tokens and finds the function-like macro ECHO being invoked:

ECHO((“Hello”, “World”))– > (“Hello”, “World”)

IMPL1 is never considered again for expansion, so the full result of the expansions is:

IMPL1(“Hello”, “World”);

The macro can be modified to behave the same under the experimental preprocessor and the traditional preprocessor by adding in another layer of indirection:

#define CAT(a,b) a##b

#define ECHO(…) __VA_ARGS__

// IMPL1 and IMPL2 are macros implementation details

#define IMPL1(prefix,value) do_thing_one( prefix, value)

#define IMPL2(prefix,value) do_thing_two( prefix, value)

#define CALL(macroName, args) macroName args

#define DO_THING_FIXED(a,b) CALL( CAT(IMPL, a), ECHO(( “Hello”,b)))

DO_THING_FIXED(1, “World”);

// macro expanded to:

// do_thing_one( “Hello”, “World”);

What’s next…

The preprocessor overhaul is not yet complete; we will continue to make changes under the experimental mode and fix bugs from early adopters.

- Some preprocessor directive logic needs completion rather than falling back to the traditional behavior

- Support for _Pragma

- C++20 features

- Additional diagnostic improvements

- New switches to control the output under /E and /P

-

Boost blocking bug

- Logical operators in preprocessor constant expressions are not fully implemented in the new preprocessor so on #if directives the new preprocessor can fall back to the traditional preprocessor. This is only noticeable when macros not compatible with the traditional preprocessor are expanded, which can be the case when building boost preprocessor slots.

In closing

We’d love for you to download Visual Studio 2017 version 15.8 preview and try out all the new experimental features.

As always, we welcome your feedback. We can be reached via the comments below or via email (visualcpp@microsoft.com). If you encounter other problems with MSVC in Visual Studio 2017 please let us know through Help > Report A Problem in the product, or via Developer Community. Let us know your suggestions through UserVoice. You can also find us on Twitter (@VisualC) and Facebook (msftvisualcpp).

Introducing Dev Spaces for AKS

Today I’m excited to announce the public preview of Dev Spaces for Azure Kubernetes Services (AKS), an easy way to build and debug applications for Kubernetes – only available on Azure. At Microsoft Build, Scott Hanselman showed how you could use Dev Spaces to quickly debug and fix a single application inside a complex microservices environment. In case you missed the event or were distracted by the on-stage theatrics, it’s worth reviewing why Dev Spaces is such a unique product.

Imagine you are a new employee trying to fix a bug in a complex microservices application consisting of dozens of components, each with their own configuration and backing services. To get started, you must configure your local development environment so that it can mimic production including setting up your IDE, building tool chain, containerized service dependencies, a local Kubernetes environment, mocks for backing services, and more. With all the time involved setting up your development environment, fixing that first bug could take days.

Or you could use Dev Spaces and AKS.

Setup your dev environment in seconds

With Dev Spaces, all a new developer needs is their IDE and the Azure CLI. Simply create a new Dev Space inside AKS and begin working on any component in the microservice environment safely, without impeding other traffic flows.

azds space select --name gabe

Under the covers, Dev Spaces creates a Kubernetes namespace and populates it with only the microservices that are under active development inside your IDE. With Dev Spaces setting up your environment now takes seconds, not days.

Iterate on code changes rapidly

Now that your environment is setup, it’s time to write some code. At Microsoft we call this process the “inner loop” – while you’re writing code, but before you’ve pushed to version control. With containers and Kubernetes, the process of writing code can be painfully slow as you wait for container images to build, push to a registry, and deploy to your cluster. Dev Spaces includes code synchronization technology to sync your IDE with live containers running inside AKS. Want a fast inner-loop experience? With Dev Spaces, changes in your IDE are pushed up to your AKS cluster in seconds.

Debug your containers remotely

If you’ve ever used a software debugger, you’ll know how empowering it can be to set breakpoints, inspect your call stack, and even modify memory live as you’re coding. Unfortunately, remote debugging with containers is brittle and difficult to configure. In fact, most developers working on container-based microservices are forced to use print line debugging, which is slow and time consuming, especially when your changes depend on a complex set of surrounding services. Thanks to the innovative use of service mesh technology in Dev Spaces, a developer can use a simple hostname prefix to work on any service in isolation.

For example, let’s say I have a service running at contoso.com that must traverse 10 microservices before it returns a result. If I have a Dev Space named `gabe`, I can hit:

gabe.s.contoso.com

With this special URL prefix, traffic will be routed through any services under active development. This means I can set breakpoints in my IDE on service 3 and service 7, while using unmodified instances of the other services, all without interrupting any of the normal traffic flows. How cool is that!? Today we support remote debugging with .NET Core and Node.js, with more languages on the way.

Now that you know what you can do with Dev Spaces, head over to our documentation to get started. And to see how to use Azure Dev Spaces as part of your development flow with Visual Studio and Visual Studio Code see this blog.

Bootstrap new applications easily

Developers love the agility, reliability, and scalability benefits that Kubernetes provides. They love the community and the open source ecosystem around Kubernetes. The YAML manifests and is Dockerfiles required to use Kubernetes? Not so much. Fortunately, Dev Spaces features application scaffolding that makes it easy to bootstrap a new application:

azds prep

This command will detect your application type and lay down a Dockerfile, Helm chart, and other metadata into your source tree. This makes it easy to containerize and package your application for Kubernetes without having to learn the arcane details. Since the scaffolding becomes a part of your source code, you can tweak the configuration as you see fit. You can also modify your scaffolded assets to help transition your app from development to production.

Use open source technologies

Though Dev Spaces is only available on Azure, the scaffolding builds upon the open source Draft project, which provides an inner-loop experience on any Kubernetes cluster. Over time this will mean that any project using Draft can switch to Dev Spaces and vice-versa. As we continue to improve Dev Spaces on Azure, you can expect more innovation in the open source Draft project which will benefit the entire Kubernetes community.

It’s been an exciting month for the Azure team. AKS is now generally available and has grown over 80 percent in the last 30 days. AKS is now available in 12 regions including East US 2 and Japan East, both added since general availability two weeks ago. I spent the last few weeks showing off Dev Spaces to audiences around the globe and the response has been overwhelmingly positive. Dev Spaces makes developing against a complex microservices environment simple. Give it a try. We can’t wait to hear your feedback.

Azure Marketplace new offers June 1–15

We continue to expand the Azure Marketplace ecosystem. From June 1 to 15, 21 new offers successfully met the onboarding criteria and went live. See details of the new offers below:

|

A10 Lightning ADC with Harmony Controller - SaaS: Gain cloud-native load balancing, advanced traffic management, central policy management, application security, per-application traffic visibility, analytics, and insights. |

|

|

Actian Vector Analytic Database Enterprise Edition: Actian Vector is a developer-friendly high-performance analytics engine that requires minimal setup, provides automatic tuning to reduce database administration effort, and enables highly responsive end-user BI reporting. |

|

|

ADFS 4.0 Server Windows 2016: Quickly deploy a new ADFS 2016 server preloaded with the ADFS role, ADFS PowerShell module, and prerequisites. Add the VM to your Active Directory domain and follow the setup GUI to get Active Directory Federation Services up and running. |

|

|

Build Agent for VSTS: This solution template simplifies the deployment of a private build agent to a single click. You can create a full deployment of private build agents for VSTS on Azure virtual machines using your Azure subscription. |

|

|

CIS SUSE Linux 12 Benchmark v2.0.0.0 - L1: This instance of SUSE Linux 12 is hardened according to a CIS Benchmark. CIS Benchmarks are consensus-based security configuration guides developed and accepted by government, business, industry, and academia. |

|

|

hMailServer Email Server 2016: Quickly deploy hMail's open-source mail server on Windows 2016 using a local MySQL installation. It supports the common email protocols (IMAP, SMTP, and POP3), can be used with many web mail systems, and has score-based spam protection. |

|

|

Neopulse AI Studio™: Design, train, and verify AI models quickly and easily using the NeoPulse Modeling language (NML). NeoPulse AI Studio is a complete framework for training, querying, and managing AI models. |

|

|

Neopulse Query Runtime™: Deploy models trained with NeoPulse AI Studio so they can be queried as a RESTful web service. NeoPulse Query Runtime (NPQR) provides the functionality to query AI models generated by NeoPulse AI Studio. |

|

|

NetScaler 12.1 VPX Express - 20 Mbps: NetScaler VPX Express is a free virtual application delivery controller that combines the latest cloud-native features with a simple user experience. NetScaler comes with one-click provisioning to help your IT teams swiftly set it up. |

|

|

NetScaler ADC: Load Balancer, SSL VPN, WAF & SSO: This ARM template is designed to ensure an easy and consistent way of deploying the NetScaler pair in Active-Passive mode. The template increases reliability and system availability with built-in redundancy. |

|

|

OutSystems on Microsoft Azure: Deliver innovative apps faster with OutSystems' low-code application platform combined with the advanced services, scale, and global reach of Microsoft Azure. |

|

|

Resource Central 4.0: With a revamped user interface to make it look and feel consistent with Office 2016, Resource Central 4.0 by Add-On Products has advanced catering menu options, menu labeling, and a floor-plan feature that gives a visual representation of each location. |

|

|

SoftNAS Cloud Enterprise: SoftNAS Cloud Enterprise is a software-defined, full-featured enterprise cloud NAS filer for primary data using either block (Standard or Premium Disk), object (Hot, Cool, or Cold Blob), or a combination of both types of storage. |

|

|

SoftNAS Cloud Essentials: SoftNAS Cloud Essentials supports Veeam synthetic full backups to Azure Blob and provides file services for object storage, lowering the overall storage cost of “cool” secondary data for cloud backup storage, disaster recovery, and tape-to-cloud archives. |

|

|

SoftNAS Cloud Platinum: SoftNAS Cloud Platinum is a fully integrated cloud data platform for companies that want to go digital-first faster with hybrid/multi-cloud deployments while maintaining control over price, performance, security, and reliability. |

|

|

Squid Proxy Cache Server: Squid is a caching proxy for the web, supporting HTTP, HTTPS, FTP, and more. Squid offers a rich set of traffic optimization options, and this Squid proxy caching server offers speed and high performance. |

|

|

TensorFlow CPU MKL Notebook: This is an integrated deep-learning software stack with TensorFlow, an open-source software library; Keras, an open-source neural network library; Python, a programming language; and Jupyter Notebook, an interactive notebook. |

|

|

Vormetric Data Security Manager v6.0.2: The center point for multi-cloud advanced encryption, the Vormetric Data Security Manager (DSM) provisions and manages keys for Vormetric Data Security solutions, including Vormetric Transparent Encryption and Application Encryption. |

|

|

Windows Server 2019 Datacenter Preview: Windows Server 2019 Datacenter Preview is a server operating system designed to run the apps and infrastructure that power your business. It includes built-in layers of security to help you run traditional and cloud-native apps. |

|

|

ZeroDown Software Business Continuity As a Service: ZeroDown Software technology provides businesses with continuous access to their company data via the Business Continuity-as-a-Service (BCaaS) architecture, protecting apps and transactions from network interruptions. |

|

|

ZEROSPAM email Security: Get enterprise-grade, cloud-based email security that stops phishing, spear phishing, ransomware, and zero-day attacks. ZEROSPAM is deployed on a per-domain basis and is fully compatible with Exchange and Office 365. |

Supercharging the Git Commit Graph III: Generations and Graph Algorithms

Azure.Source – Volume 39

Now in preview

Azure Event Hubs and Service Bus VNET Service Endpoints in public preview - Azure Event Hubs and Service Bus joins the growing list of Azure services enabled for Virtual Network Service Endpoints. Virtual Network (VNet) service endpoints extend your virtual network private address space and the identity of your VNet to the Azure services, over a direct connection. Endpoints allow you to secure your critical Azure service resources to only your virtual networks. Traffic from your VNet to the Azure service always remains on the Microsoft Azure backbone network.

IP filtering for Event Hubs and Service Bus - IP Filter rules are now available in public preview for Service Bus Premium and Event Hubs Standard and Dedicated price plans. For scenarios in which you want Service Bus or Azure Event Hubs to only be accessible from certain well-known sites, IP filter rules enable you to configure when to reject or accept traffic originating from specific IPv4 addresses.

New Azure #CosmosDB Explorer now in public preview - Azure Cosmos DB Explorer (https://cosmos.azure.com) is a full-screen, standalone web-based version of the Azure Cosmos DB Data Explorer found in the Azure portal, which provides a rich and unified developer experience for inserting, querying, and managing Azure Cosmos DB data.

Microsoft Azure launches tamper-proof Azure Immutable Blob Storage for financial services - Financial institutions can now store and retain data in a non-erasable and non-rewritable format – and at no additional cost. Available in public preview, Azure Immutable Blob Storage meets the relevant storage requirements of several key financial industry regulations. Microsoft retained a leading independent assessment firm that specializes in records management and information governance, Cohasset Associates, to evaluate Azure Immutable Blob Storage and its compliance with requirements specific to the financial services industry, which is available from this post.

Now generally available

Azure Databricks provides the best Apache Spark™-based analytics solution for data scientists and engineers - This post announced the general availability of RStudio integration with Azure Databricks, and four additional regions – UK South, UK West, Australia East, and Australia South East. Azure Databricks is an Apache Spark-based analytics platform optimized for the Microsoft Azure cloud services platform. Designed with the founders of Apache Spark, Databricks is integrated with Azure to provide one-click setup, streamlined workflows, and an interactive workspace that enables collaboration between data scientists, data engineers, and business analysts. Azure Databricks integrates with RStudio Server, the popular integrated development environment (IDE) for R. There’s also webinar coming up next month (Thursday, August 2) on Making R-Based Analytics Easier and More Scalable.

Azure Search – Announcing the general availability of synonyms - Synonyms in Azure Search enable it to associate equivalent terms that implicitly expand the scope of a query, without the user having to provide the alternate terms. In Azure Search, synonym support is based on synonym maps that you define and upload to your search service. These maps constitute an independent resource, such as indexes or data sources, and can be used by any searchable field in any index in your search service. You can add synonym maps to a service with no disruption to existing operations.

Network Performance Monitor is now generally available in UK South region - Network Performance Monitor (NPM) is a suite of capabilities, each of which is geared towards monitoring the health of your network, network connectivity to your applications, and provides insights into the performance of your network. Performance Monitor, ExpressRoute Monitor, and Service Endpoint Monitor are monitoring capabilities within NPM.

Also generally available

News and updates

What’s new in Azure IoT Central - Azure IoT Central is a fully managed SaaS (software-as-a-service) solution that makes it easy to connect, monitor and manage your IoT assets at scale. Azure IoT Central simplifies the initial setup of your IoT solution and reduces the management burden, operational costs, and overhead of a typical IoT project. Since entering public preview in December, we implemented a number of new features, capabilities, and usability improvements, including: state measurement, event monitoring template, bulk device import and export, location and map services, and new account management tools.

Monitor Azure Data Factory pipelines using Operations Management Suite - Azure Data Factory (ADF) integration with Azure Monitor allows you to route your data factory metrics to Operations and Management (OMS) Suite. Now, you can monitor the health of your data factory pipelines using Azure Data Factory Analytics OMS pack from the Azure Marketplace, which provides you with a summary of overall health of your Data Factory, including options to drill into details and to troubleshoot unexpected behavior patterns.

Spoken Language Identification in Video Indexer - Video Indexer now includes automatic Spoken Language Identification (LID), which is based on advanced Deep Learning modeling and works best for high- to mid-quality recordings. LID currently supports eight languages including English, Chinese, French, German, Italian, Japanese, Spanish, and Russian. This post includes a brief description of spectrograms, which turn audio into images for detecting patterns.

Additional news and updates

- Azure Security Center update July 1

- New documentation for Power BI Embedded REST APIs

- Apply themes to Power BI Embedded dashboards

- Azure DevTest Labs: Auto-fill virtual machine username and password

- Azure DevTest Labs: Auto-fill virtual machine name

- Increased reference data size in Azure Stream Analytics

- New features for Azure dashboard tiles: resize and edit query

The Azure Podcast

|

|

The Azure Podcast: Episode 236 - Where to run my app? - Barry Luijbregts, a very well-known and respected Azure Developer, author and Pluralsight instructor, talks to us about the universal issue in Azure - which service should you use to run your app? (Thanks for the kind words about Azure.Source, Cale & Sujit!) |

Technical content and training

Securing the connection between Power BI and Azure SQL Database - Learn how to connect Power BI to Azure SQL DB in a more secure way using Virtual Networks (VNets), VNet service endpoints, virtual network rules, on-premise data gateways, and network security groups.

How to use Azure Container Registry for a Multi-container Web App? - Learn how to configure Azure Container Registry with Multi-Container Web App (currently in preview), which supports the ability for you to deploy multiple Docker images to Web App for Containers using either Docker Compose or Kubernetes Config files.

AI Show

|

AI Show | Building an Intelligent Bot: Conference Buddy - Using the example of the Conference Buddy, Wilson will show you the key ingredients needed to develop an intelligent chatbot with Microsoft AI – one that helps the attendees at a conference interact with speakers in a novel way. |

Customers and partners

Agile SAP development with SAP Cloud Platform on Azure - SAP Cloud Platform (SAP CP) is SAP's multi-cloud platform-as-a-service offering and is now available for production use in Azure West Europe. SAP CP offers a fully managed platform-as-a-service, operated by SAP, and is the first multi-tenant and fully managed Cloud Foundry PaaS on Azure. Learn how developers can use either the SAP CP cockpit or Cloud Foundry CLI to deploy and manage their applications and services. In addition, developers can connect to Azure Platform Services using the Meta Azure Service Broker or its future replacement, Open Service Broker for Azure.

Events

Azure Backup hosts Ask Me Anything session - The Azure Backup team will host a special Ask Me Anything session on Twitter, Tuesday, July 10, 2018 from 7:30 AM to 11:30 AM PDT. Tweet your questions to @AzureBackup or @AzureSupport with #AzureBackupAMA

The IoT Show

|

IoT Show | Provisioning IoT devices at scale - The Demo - Showing is always better than telling. Nicole Berdy joins Olivier on the IoT Show to demonstrate how the Azure IoT Hub Device Provisioning Service can be used to implement zero-touch provisioning of (a lot of) IoT devices. |

Industries

Lessons from big box retail - Learn how retail banks can take a lesson from big box retailers to generate curated experiences. Retailers already use Azure Machine Learning to orchestrate experiences using data collected from various touchpoints they have with their customers, which helps them to understand their shopping behaviors and provide them with engaging in-store experiences. These experiences set customer expectations from other industries, such as banking.

Azure Friday

|

Azure Friday | Episode 448 - Azure App Service Web Apps - Byron Tardif joins Scott Hanselman to discuss Azure App Service Web Apps, the best way to build and host web applications in the programming language of your choice without managing infrastructure. It offers auto-scaling and high availability, supports both Windows and Linux, and enables automated deployments from GitHub, Visual Studio Team Services, or any Git repo. |

|

|

Azure Friday | Episode 449 - Unified alerts in Azure Monitor - Kiran Madnani chats with Scott Hanselman about Azure Monitor, where you can set up alerts to monitor the metrics and log data for the entire stack across your infrastructure, application, and Azure platform. Learn about the next generation of alerts in Azure, which provides a new consolidated alerts experience in Azure Monitor. Plus, new metrics-based alerts platform that is faster and leveraged by other Azure services. |

Developer spotlight

Free e-book | Serverless apps: Architecture, patterns, and Azure implementation - This guide by Jeremy Likness focuses on cloud native development of applications that use serverless. The book highlights the benefits and exposes the potential drawbacks of developing serverless apps and provides a survey of serverless architectures. Many examples of how serverless can be used are illustrated along with various serverless design patterns. This guide explains the components of the Azure serverless platform and focuses specifically on implementation of serverless using Azure Functions. You'll learn about triggers and bindings as well as how to implement serverless apps that rely on state using durable functions. Finally, business examples and case studies will help provide context and a frame of reference to determine whether serverless is the right approach for your projects.

A Cloud Guru: Azure This Week | 6 July 2018

|

A Cloud Guru | Azure This Week - 6 July 2018 - In this episode of Azure This Week, James takes a look at the public preview of static website hosting for Azure Storage and zone-redundant VPN and ExpressRoute gateways, as well as the general availability of Azure IoT Edge. |

Power BI Embedded dashboards with Azure Stream Analytics

Azure Stream Analytics is a fully managed “serverless” PaaS service in Azure built for running real-time analytics on fast moving streams of data. Today, a significant portion of Stream Analytics customers use Power BI for real-time dynamic dashboarding. Support for Power BI Embedded has been a repeated ask from many of our customers, and today we are excited to share that it is now generally available.

What is Power BI Embedded?

Power BI Embedded simplifies how ISVs and developers can quickly add stunning visuals, reports, and dashboards to their apps. By enabling easy-to-navigate data exploration in their apps, ISVs help their customers make quick, informed decisions in context. This also enables faster time to market and competitive differentiation for all parties.

Additionally, Power BI Embedded enables users to work within the familiar development environments, Visual Studio or Azure.

Using Azure Stream Analytics with Power BI Embedded

Using Power BI with Azure Stream Analytics allows users of Power BI Embedded dashboards to easily visualize insights from streaming data within the context of the apps they use every day. With Power BI Embedded, users can also embed real-time dashboards right in their organization's web apps.

No changes are required for your existing Stream Analytics jobs to work with Power BI Embedded. For more information, please see our tutorial that provides detailed directions on how you can build and embed dynamic streaming dashboards with Azure Stream Analytics and Power BI.

Keep the feedback and ideas coming

The Azure Stream Analytics team is highly committed to listening to your feedback. We welcome you to join the conversation and make your voice heard via our UserVoice.

Announcing ML.NET 0.3

Two months ago, at //Build 2018, we released ML.NET 0.1, a cross-platform, open source machine learning framework for .NET developers. We’ve gotten great feedback so far and would like to thank the community for your engagement as we continue to develop ML.NET together in the open.

We are happy to announce the latest version: ML.NET 0.3. This release supports exporting models to the ONNX format, enables creating new types of models with Factorization Machines, LightGBM, Ensembles, and LightLDA, and addressing a variety of issues and feedback we received from the community.

The main highlights of ML.NET 0.3 release are explained below.

For your convenience, here’s a short list of links pointing to the internal sections in this blog post:

- Export of ML.NET models to the ONNX-ML format

- Added LightGBM as a learner for binary classification, multiclass classification, and regression

- Added Field-Aware Factorization Machines (FFM) as a learner for binary classification

- Added Ensemble learners enabling multiple learners in one model

- Added LightLDA transform for topic modeling

- Added One-Versus-All (OVA) learner for multiclass classification

Export of ML.NET models to the ONNX-ML format

ONNX is an open and interoperable standard format for representing deep learning and machine learning models which enables developers to save trained models (from any framework) to the ONNX format and run them in a variety of target platforms.

ONNX is developed and supported by a community of partners such as Microsoft, Facebook, AWS, Nvidia, Intel, AMD, and many others.

With this release of ML.NET v0.3 you can export certain ML.NET models to the ONNX-ML format.

ONNX models can be used to infuse machine learning capabilities in platforms like Windows ML which evaluates ONNX models natively on Windows 10 devices taking advantage of hardware acceleration, as illustrated in the following image:

The following code snippet shows how you can convert and export an ML.NET model to an ONNX-ML model file:

Here’s the original C# sample code

Added LightGBM as a learner for binary classification, multiclass classification, and regression

This addition wraps LightGBM and exposes it in ML.NET.

LightGBM is a framework that basically helps you to classify something as ‘A’ or ‘B’ (binary classification), classify something across multiple categories (Multi-class Classification) or predict a value based on historic data (regression).

Note that LightGBM can also be used for ranking (predict relevance of objects, such as determine which objects have a higher priority than others), but the ranking evaluator is not yet exposed in ML.NET.

The definition for LightGBM in ‘Machine Learning lingo’ is: A high-performance gradient boosting framework based on decision tree algorithms.

LightGBM is under the umbrella of the DMTK project at Microsoft.

The LightGBM repository shows various comparison experiments that show good accuracy and speed, so it is a great learner to try out. It has also been used in winning solutions in various ML challenges.

See example usage of LightGBM learner in ML.NET, here.

Added Field-Aware Factorization Machines (FFM) as a learner for binary classification

FFM is another learner for binary classification (“Is this A or B”). However, it is especially effective for very large sparse datasets to be used to train the model, because it is a streaming learner so it does not require the entire dataset to fit in memory.

FFM has been used to win various click prediction competitions such as the Criteo Display Advertising Challenge on Kaggle. You can learn more about the winning solution here.

Click-through rate (CTR) prediction is critical in advertising. Models based on Field-aware Factorization Machines (FFMs) outperforms previous approaches such as Factorization Machines (FMs) in many CTR-prediction model trainings. In addition to click prediction, FFM is also useful for areas such as recommendations.

Here’s how you add an FFM learner to the pipeline.

For additional sample code using FFM in ML.NET, check here and here.

Added Ensemble learners enabling multiple learners in one model