Top Stories from the Microsoft DevOps Community – 2018.08.24

Because it’s Friday: One Million Integers

This is a visualization of numbers from 1 to 1,000,000, added in order in blocks of 1,000, located in the 2-D plane according to their prime factors:

The animation was created by John Williamson. The mathematical process begins by representing each integer as a vector of length 80,000: the first value is the number of times "2" is used in its prime factorization, the next for "3", and so on through the primes. Then all of those vectors together represent one million points in an 80,000-dimensional space, which is then reduced to two dimensions using a dimension-reducing algorithm. The interesting structure that results is partly an artifact of the algorithm, but also a results of the fractal nature of the primes on the number line.

That's all from the blog for this week. Have a great weekend, and we'll be back next week. See you then!

Decoding an SSH Key from PEM to BASE64 to HEX to ASN.1 to prime decimal numbers

I'm reading a new chapter of The Imposter's Handbook: Season 2 that Rob and I are working on. He's digging into the internals of what's exactly in your SSH Key.

I generated a key with no password:

ssh-keygen -t rsa -C scott@myemail.com

Inside the generated file is this text, that we've all seen before but few have cracked open.

-----BEGIN RSA PRIVATE KEY-----

MIIEpAIBAAKCAQEAtd8As85sOUjjkjV12ujMIZmhyegXkcmGaTWk319vQB3+cpIh

Wu0mBke8R28jRym9kLQj2RjaO1AdSxsLy4hR2HynY7l6BSbIUrAam/aC/eVzJmg7

qjVijPKRTj7bdG5dYNZYSEiL98t/+XVxoJcXXOEY83c5WcCnyoFv58MG4TGeHi/0

coXKpdGlAqtQUqbp2sG7WCrXIGJJdBvUDIQDQQ0Isn6MK4nKBA10ucJmV+ok7DEP

kyGk03KgAx+Vien9ELvo7P0AN75Nm1W9FiP6gfoNvUXDApKF7du1FTn4r3peLzzj

50y5GcifWYfoRYi7OPhxI4cFYOWleFm1pIS4PwIDAQABAoIBAQCBleuCMkqaZnz/

6GeZGtaX+kd0/ZINpnHG9RoMrosuPDDYoZZymxbE0sgsfdu9ENipCjGgtjyIloTI

xvSYiQEIJ4l9XOK8WO3TPPc4uWSMU7jAXPRmSrN1ikBOaCslwp12KkOs/UP9w1nj

/PKBYiabXyfQEdsjQEpN1/xMPoHgYa5bWHm5tw7aFn6bnUSm1ZPzMquvZEkdXoZx

c5h5P20BvcVz+OJkCLH3SRR6AF7TZYmBEsBB0XvVysOkrIvdudccVqUDrpjzUBc3

L8ktW3FzE+teP7vxi6x/nFuFh6kiCDyoLBhRlBJI/c/PzgTYwWhD/RRxkLuevzH7

TU8JFQ9BAoGBAOIrQKwiAHNw4wnmiinGTu8IW2k32LgI900oYu3ty8jLGL6q1IhE

qjVMjlbJhae58mAMx1Qr8IuHTPSmwedNjPCaVyvjs5QbrZyCVVjx2BAT+wd8pl10

NBXSFQTMbg6rVggKI3tHSE1NSdO8kLjITUiAAjxnnJwIEgPK+ljgmGETAoGBAM3c

ANd/1unn7oOtlfGAUHb642kNgXxH7U+gW3hytWMcYXTeqnZ56a3kNxTMjdVyThlO

qGXmBR845q5j3VlFJc4EubpkXEGDTTPBSmv21YyU0zf5xlSp6fYe+Ru5+hqlRO4n

rsluyMvztDXOiYO/VgVEUEnLGydBb1LwLB+MVR2lAoGAdH7s7/0PmGbUOzxJfF0O

OWdnllnSwnCz2UVtN7rd1c5vL37UvGAKACwvwRpKQuuvobPTVFLRszz88aOXiynR

5/jH3+6IiEh9c3lattbTgOyZx/B3zPlW/spYU0FtixbL2JZIUm6UGmUuGucs8FEU

Jbzx6eVAsMojZVq++tqtAosCgYB0KWHcOIoYQUTozuneda5yBQ6P+AwKCjhSB0W2

SNwryhcAMKl140NGWZHvTaH3QOHrC+SgY1Sekqgw3a9IsWkswKPhFsKsQSAuRTLu

i0Fja5NocaxFl/+qXz3oNGB56qpjzManabkqxSD6f8o/KpeqryqzCUYQN69O2LG9

N53L9QKBgQCZd0K6RFhhdJW+Eh7/aIk8m8Cho4Im5vFOFrn99e4HKYF5BJnoQp4p

1QTLMs2C3hQXdJ49LTLp0xr77zPxNWUpoN4XBwqDWL0t0MYkRZFoCAG7Jy2Pgegv

uOuIr6NHfdgGBgOTeucG+mPtADsLYurEQuUlfkl5hR7LgwF+3q8bHQ==

-----END RSA PRIVATE KEY-----

The private key is an ASN.1 (Abstract Syntax Notation One) encoded data structure. It's a funky format but it's basically a packed format with the ability for nested trees that can hold booleans, integers, etc.

However, ASN.1 is just the binary packed "payload." It's not the "container." For example, there are envelopes and there are letters inside them. The envelope is the PEM (Privacy Enhanced Mail) format. Such things start with ----- BEGIN SOMETHING ----- and end with ----- END SOMETHING ------. If you're familiar with BASE64, your spidey sense may tell you that this is a BASE64 encoded file. Not everything that's BASE64 turns into a friendly ASCII string. This turns into a bunch of bytes you can view in HEX.

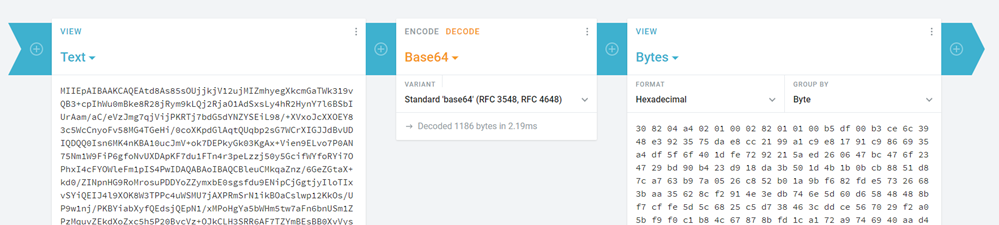

We can first decode the PEM file into HEX. Yes, I know there's lots of ways to do this stuff at the command line, but I like showing and teaching using some of the many encoding/decoding websites and utilities there are out there. I also love using https://cryptii.com/ for these things as you can build a visual pipeline.

308204A40201000282010100B5DF00B3CE6C3948E3923575DAE8

CC2199A1C9E81791C9866935A4DF5F6F401DFE7292215AED2606

47BC476F234729BD90B423D918DA3B501D4B1B0BCB8851D87CA7

63B97A0526C852B01A9BF682FDE57326683BAA35628CF2914E3E

DB746E5D60D65848488BF7CB7FF97571A097175CE118F3773959

C0A7CA816FE7C306E1319E1E2FF47285CAA5D1A502AB5052A6E9

DAC1BB582AD7206249741BD40C8403410D08B27E8C2B89CA040D

74B9C26657EA24EC310F9321A4D372A0031F9589E9FD10BBE8EC

FD0037BE4D9B55BD1623FA81FA0DBD45C3029285EDDBB51539F8

AF7A5E2F3CE3E74CB919C89F5987E84588BB38F87123870560E5

snip

This ASN.1 JavaScript decoder can take the HEX and parse it for you. Or you can that ASN.1 packed format at the *nix command line and see that there's nine big integers inside (I trimmed them for this blog).

openssl asn1parse -in notreal

0:d=0 hl=4 l=1188 cons: SEQUENCE

4:d=1 hl=2 l= 1 prim: INTEGER :00

7:d=1 hl=4 l= 257 prim: INTEGER :B5DF00B3CE6C3948E3923575DAE8CC2199A1C9E81791C9866935A4DF5F6F401DFE7292215

268:d=1 hl=2 l= 3 prim: INTEGER :010001

273:d=1 hl=4 l= 257 prim: INTEGER :8195EB82324A9A667CFFE867991AD697FA4774FD920DA671C6F51A0CAE8B2E3C30D8A1967

534:d=1 hl=3 l= 129 prim: INTEGER :E22B40AC22007370E309E68A29C64EEF085B6937D8B808F74D2862EDEDCBC8CB18BEAAD48

666:d=1 hl=3 l= 129 prim: INTEGER :CDDC00D77FD6E9E7EE83AD95F1805076FAE3690D817C47ED4FA05B7872B5631C6174DEAA7

798:d=1 hl=3 l= 128 prim: INTEGER :747EECEFFD0F9866D43B3C497C5D0E3967679659D2C270B3D9456D37BADDD5CE6F2F7ED4B

929:d=1 hl=3 l= 128 prim: INTEGER :742961DC388A184144E8CEE9DE75AE72050E8FF80C0A0A38520745B648DC2BCA170030A97

1060:d=1 hl=3 l= 129 prim: INTEGER :997742BA4458617495BE121EFF68893C9BC0A1A38226E6F14E16B9FDF5EE072981790499E

Per the spec the format is this:

An RSA private key shall have ASN.1 type RSAPrivateKey:

RSAPrivateKey ::= SEQUENCE {

version Version,

modulus INTEGER, -- n

publicExponent INTEGER, -- e

privateExponent INTEGER, -- d

prime1 INTEGER, -- p

prime2 INTEGER, -- q

exponent1 INTEGER, -- d mod (p-1)

exponent2 INTEGER, -- d mod (q-1)

coefficient INTEGER -- (inverse of q) mod p }

I found the description for how RSA works in this blog post very helpful as it uses small numbers as examples. The variable names here like p, q, and n are agreed upon and standard.

The fields of type RSAPrivateKey have the following meanings:

o version is the version number, for compatibility

with future revisions of this document. It shall

be 0 for this version of the document.

o modulus is the modulus n.

o publicExponent is the public exponent e.

o privateExponent is the private exponent d.

o prime1 is the prime factor p of n.

o prime2 is the prime factor q of n.

o exponent1 is d mod (p-1).

o exponent2 is d mod (q-1).

o coefficient is the Chinese Remainder Theorem

coefficient q-1 mod p.

Let's look at that first number q, the prime factor p of n. It's super long in Hexadecimal.

747EECEFFD0F9866D43B3C497C5D0E3967679659D2C270B3D945

6D37BADDD5CE6F2F7ED4BC600A002C2FC11A4A42EBAFA1B3D354

52D1B33CFCF1A3978B29D1E7F8C7DFEE8888487D73795AB6D6D3

80EC99C7F077CCF956FECA5853416D8B16CBD89648526E941A65

2E1AE72CF0511425BCF1E9E540B0CA23655ABEFADAAD028B

That hexadecimal number converted to decimal is this long ass number. It's 308 digits long!

22959099950256034890559187556292927784453557983859951626187028542267181746291385208056952622270636003785108992159340113537813968453561739504619062411001131648757071588488220532539782545200321908111599592636973146194058056564924259042296638315976224316360033845852318938823607436658351875086984433074463158236223344828240703648004620467488645622229309082546037826549150096614213390716798147672946512459617730148266423496997160777227482475009932013242738610000405747911162773880928277363924192388244705316312909258695267385559719781821111399096063487484121831441128099512811105145553708218511125708027791532622990325823

It's hard work to prove this number is prime but there's a great Integer Factorization Calculator that actually uses WebAssembly and your own local CPU to check such things. Expect to way a long time, sometimes until the sun death of the universe. ;)

Rob and I are finding it really cool to dig just below the surface of common things we look at all the time. I have often opened a key file in a text file but never drawn a straight and complete line through decoding, unpacking, decoding, all the way to a math mathematical formula. I feel I'm filling up some major gaps in my knowledge!

Sponsor: Preview the latest JetBrains Rider with its built-in spell checking, initial Blazor support, partial C# 7.3 support, enhanced debugger, C# Interactive, and a redesigned Solution Explorer.

© 2018 Scott Hanselman. All rights reserved.

Accelerating Artificial Intelligence (AI) in healthcare using Microsoft Azure blueprints

Artificial Intelligence holds major potential for healthcare, from predicting patient length of stay to diagnostic imaging, anti-fraud, and many more use cases. To be successful in using AI, healthcare needs solutions, not projects. Learn how you can close the gap to your AI in healthcare solution by accelerating your initiative using Microsoft Azure blueprints.

To rapidly acquire new capabilities and implement new solutions, healthcare IT and developers can now take advantage of industry-specific Azure Blueprints. Blueprints include resources such as example code, test data, security, and compliance support. These are packages that include reference architectures, guidance, how-to guides and other documentation, and may also include executable code and sample test data built around a key use case of interest to healthcare organizations. Blueprints also contain components to support privacy, security, and compliance initiatives, including threat models, security controls, responsibility matrices, and compliance audit reports.

Using these blueprints, your healthcare IT team can quickly implement a new type of solution in your secure Azure cloud. With a blueprint, however, you get 50 percent to 90 percent of the end solution. Then, you can simply devote your efforts and resources to customizing the blueprint solution.

You can learn more by watching the Accelerating Artificial Intelligence (AI) in Healthcare Using Microsoft Azure Blueprints Webcast. This session is intended for healthcare provider, payer, pharmaceuticals, and life sciences organizations. Key roles include senior technical decision makers, IT Managers Cloud Architects, and developers.

Key take-aways:

- Opportunities for AI in healthcare

- Key decision points for AI in healthcare solutions including architecture, cost, resources, process

- AI in healthcare blueprints available for Microsoft Azure, and how to get started

- Opportunities to work with Microsoft partners to accelerate your AI in healthcare initiative using the blueprint

Register or watch Accelerating Artificial Intelligence (AI) in Healthcare Using Microsoft Azure Blueprints -- Part 1: Getting Started (AZR311PAL) on demand.

Monitoring environmental conditions near underwater datacenters using Deep Learning

This blog post is co-authored by Xiaoyong Zhu, Nile Wilson (Microsoft AI CTO Office), Ben Cutler (Project Natick), and Lucas Joppa (Microsoft Chief Environmental Officer).

At Microsoft, we put our cloud and artificial intelligence (AI) tools in the hands of those working to solve global environmental challenges, through programs such as AI for Earth. We also use these same tools to understand our own interaction with the environment, such as the work being done in concert with Project Natick.

Project Natick seeks to understand the benefits and difficulties in deploying subsea datacenters worldwide; it is the world's first deployed underwater datacenter and it was designed with an emphasis on sustainability. Phase 2 extends the research accomplished in Phase 1 by deploying a full-scale datacenter module in the North Sea, powered by renewable energy. Project Natick uses AI to monitor the servers and other equipment for signs of failure and to identify any correlations between the environment and server longevity.

Because Project Natick operates like a standard land datacenter, the computers inside can be used for machine learning to provide AI to other applications, just as in any other Microsoft datacenter. We are also using AI to monitor the surrounding aquatic environment, as a first step to understanding what impact, if any, the datacenter may have.

Figure 1. Project Natick datacenter pre-submergence

Monitoring marine life using object detection

The Project Natick datacenter is equipped with various sensors to monitor server conditions and the environment, including two underwater cameras, which are available as live video streams (check out the livestream on the Project Natick homepage). These cameras allow us to monitor the surrounding environment from two fixed locations outside the datacenter in real time.

Figure 2. Live camera feed from Project Natick datacenter (Source: Project Natick homepage)

We want to count the marine life seen by the cameras. Manually counting the marine life in each frame in the video stream requires a significant amount of effort. To solve this, we can leverage object detection to automate the monitoring and counting of marine life.

Figure 3. From the live camera feed, we observe a variety of aquatic life, including left, ray; middle, fish; right, arrow worm

In each frame, we count the number of marine creatures. We model this as an object detection problem. Object detection combines the task of classification with localization, and outputs both a category and a set of coordinates representing the bounding box for each object detected in the image. This is illustrated in Figure 4.

Figure 4. Object detection tasks in computer vision. left, input image; right, object detection with bounding boxes

How to choose an object detection model

Over the past few years, many exciting deep learning approaches for object detection have emerged. Models such as Faster R-CNN use a two-stage procedure to first propose regions containing some object, followed by classification of the proposed region and adjusting the proposed bounding box. Using a one-stage approach, models such as You Only Look Once (YOLO) and Single Shot MultiBox Detector (SSD), or RetinaNet with focal loss, consider a fixed set of boxes for detection and skip the region proposal stage, which are usually faster compared with two-stage detectors.

To achieve real-time object detection for videos, we need to balance between speed and accuracy. From the video stream, we observed that there are only a few types of aquatic life that come within view—fish, arrow worms, and rays. The limited number of animal categories allows us to choose a relatively light-weight object detection model which can be run on CPUs.

By comparing the speed and accuracy of different deep learning model architectures, we chose to use SSD with MobileNet as our network architecture.

Figure 5. Object detection approaches (tradeoffs between accuracy and inference time). Marker shapes indicate meta-architecture and colors indicate feature extractor. Each (meta-architecture, feature extractor) pair corresponds to multiple points on this plot due to changing input sizes, stride, etc. (source)

An AI Oriented Architecture for environmental monitoring

AI Oriented Architecture (AOA) is a blueprint for enterprises to use AI to accelerate digital transformation. An AOA enables organizations to map a business solution to the set of AI tools, services and infrastructure that will enable them to realize the business solution. In this example, we use an AI Oriented Architecture to design a deep learning solution for environmental monitoring.

Figure 6 shows an AI Oriented Architecture for monitoring marine life near the underwater datacenter. We first save the streamed video file from Azure Media Service using OpenCV, then label the video frames using VoTT and put the labelled data in Azure Blob Storage. Once the dataset is labelled and placed in Azure Blob Storage, we start training an object detection model using Azure. After we have trained the model, we deploy the model to the Natick datacenter, so the model can run inference on the input stream directly. The result is then either posted to PowerBI or displayed directly in the UI for intuitive debugging. Each of these steps is discussed in the following sections.

Figure 6. AI Oriented Architecture for detecting underwater wildlife

The key ingredients of the environment monitoring solution include the following:

- Dataset

- Labelling data using the Visual Object Tagging Tool (VoTT)

- Azure Blob Storage to store the dataset for easy access during training and evaluation

- Tools

- TensorFlow Object Detection API

- Azure

- Azure GPU cluster, or the CPU cluster available in Project Natick Datacenter

- Development and deployment tools

- Visual Studio Tools for AI, an extension to develop and debug models in the integrated development environment (IDE)

Labelling data for object detection

The first step is to label the video files. The video streams are publicly available (here and here), which can be saved to local mp4 files using OpenCV. To facilitate the labelling process, we then label the mp4 video files using the open source tool VoTT developed by Microsoft. VoTT is a commonly used tool built for labelling objects in videos.

For this problem, we scope it to monitor fish and arrow worms. We labelled 200 images in total for the two classes (arrow worms and fish), and the labelled data is available in the GitHub repository.

Figure 7. Sample image of the labelled file

Training an object detection model using Azure

We used TensorFlow to perform object detection. TensorFlow comes with the TensorFlow Object Detection API which has some built-in network architectures, making it easy to train a neural network to recognize underwater animals.

Because the dataset is small (200 images in total for arrow worms and fish), the problem is how to train an accurate enough object detector with limited labelled data and avoid overfitting. We train the object detector for 1,000 steps, initialized from COCO weights, with strong data augmentation such as flipping, color adjustment (including hue, brightness, contrast, and saturation), as well as bounding box jittering, to make sure the model can generalize well under most of the conditions.

We also use more anchor ratios, in particular 0.25:1 and 4:1, in addition to the regularly used anchor ratios (such as 2:1), to make sure the model can capture most of the animals. This is especially useful to capture arrow worms since they are long and narrow in shape.

The code and object detection configurations are available in this GitHub repository.

Figure 8. Training loss for the underwater creature detector

Deploying the model to the Project Natick datacenter

Another question we asked was - can we deploy the model to the Natick datacenter to monitor the wildlife teeming around the data center?

We chose to use CPUs to process the input videos and tested locally to make sure it works well. However, the default TensorFlow pre-built binary does not have optimizations such as AVX or FMA built-in to fully utilize modern CPUs. To better utilize the CPUs, we built the TensorFlow binary from source code, turning on all the optimization for Intel CPU by following Intel’s documentation. With all the optimization, we can increase the processing speed by 50 percent from around two frame per second to three frame per second. The build command is like below:

bazel build --config=mkl -c opt --copt=-mavx --copt=-mavx2 --copt=-mfma --copt=-mavx512f --copt=-mavx512pf --copt=-mavx512cd --copt=-mavx512er --copt="-DEIGEN_USE_VML" //tensorflow/tools/pip_package:build_pip_package

Real-time environmental monitoring with Power BI

Environmental scientists and aquatic scientists may benefit from a more intuitive way of monitoring the statistics of the underwater datacenter, such that they can quickly gain insight as to what is going on, through powerful visualization via Power BI.

Power BI has a notion of real-time datasets which provides the ability to accept streamed data and update dashboards in real time. It is intuitive to call the REST API to post data to the Power BI dashboard with a few lines of code:

# REST API endpoint, given to you when you create an API streaming dataset # Will be of the format: https://api.powerbi.com/beta/<tenant id>/datasets/< dataset id>/rows?key=<key id> REST_API_URL = ' *** Your Push API URL goes here *** ' # ensure that timestamp string is formatted properly now = datetime.strftime(datetime.now(), "%Y-%m-%dT%H:%M:%S%Z") # data that we're sending to Power BI REST API data = '[{{ "timestamp": "{0}", "fish_count": "{1}", "arrow_worm_count": "{2}" }}]'.format(now, fish_count, arrow_worm_count) req = urllib2.Request(REST_API_URL, data) response = urllib2.urlopen(req)

Because the animals may move quickly, we need to carefully balance between capturing data for many frames in short succession, sending to the Power BI dashboard, and consuming compute resources. We chose to push the analyzed data (for example, fish count) to Power BI three times per second to achieve this balance.

Figure 9. Power BI dashboard running together with the video frame. Bottom: GIF showing the E2E result

Summary

Monitoring the environmental impact is an important topic, and AI can help make this process more scalable, and automated. In this post, we explained how we developed a deep learning solution for environment monitoring near the underwater data center. In this solution, we show how to ingest and store the data, and train an underwater animal detector to detect the marine life seen by the cameras. The model is then deployed to the machines in the data center to monitor the marine life. At the same time, we also explored how to analyze the video streams and leverage Power BI’s streaming APIs to monitor the marine life over time.

If you have questions or comments, please leave a message on our GitHub repository.

Azure Marketplace new offers: July 16-31

We continue to expand the Azure Marketplace ecosystem. From July 16 to 31, 50 new offers successfully met the onboarding criteria and went live. See details of the new offers below:

Virtual Machines

|

Active Directory Domain Controller 2016: This 2016 virtual machine comes preloaded with the Active Directory Domain Services role, DNS server role, and remote administration tools for AD, DNS, and the required PowerShell modules. |

|

Akamai Enterprise Application Access: Try a new and convenient alternative to traditional remote-access technologies, such as VPNs, RDP, and proxies. A cloud architecture closes inbound firewall ports while providing authenticated users access to only their specific apps. |

|

Amazon S3 API for Azure Blob / Multicloud Storage: Distribute and migrate data between multiple clouds with transparent S3-to-Azure API translation. Flexify.IO enables cloud-agnostic and multi-cloud deployments by combining storage spaces into a single virtual namespace. |

|

beekeeper fully automated patch management: beekeeper by Infront Consulting Group Ltd. is an innovative solution that automates patch management. Utilizing its workload engine, beekeeper automates pre- and post-patching tasks. |

|

BlueCat DNS Edge Service Point for Azure: Edge reduces your attack surface, detects malicious behavior, reduces the time needed to remediate breaches, and adds visibility and control to corporate networks under siege from an explosion of malware attacks that exploit DNS. |

|

centos 7 with joomla: Centos 7 php with joomla pre install. |

|

Corda Enterprise Single Node: Corda Enterprise by R3 is a commercial distribution of Corda software optimized to meet the demands of modern-day business. It includes a blockchain application firewall and cross-distribution and cross-version wire compatibility. |

|

Digital transformation platform: Looking for a platform to help you navigate digital transformation while retaining familiar Microsoft technologies? Mavim offers infinitely scalable software that facilitates the creation of a dynamic, virtual representation of your organization. |

|

Fusio open source API: Fusio by tunnelbiz.com is an open-source API management platform to help you build and manage REST APIs. Fusio provides you with the tools to quickly build an API from different data sources and create customized responses. |

|

GigaSECURE Cloud 5.4.00 - Hourly (100 pack): GigaSECURE Cloud by Gigamon Inc. delivers intelligent network traffic visibility for workloads running in Azure and enables increased security, operational efficiency, and scale across virtual networks (VNets). |

|

GoAnywhere MFT for Linux: HelpSystems' GoAnywhere MFT is a flexible managed file-transfer solution that automates and secures file transfers using a centralized, enterprise-level approach with support for all popular file-transfer and encryption protocols. |

|

Grafana Certified by Bitnami: Grafana is an open-source analytics and monitoring dashboard for more than 40 data sources, including Graphite, Elasticsearch, Prometheus, MySQL, and PostgreSQL. |

|

H2O Driverless AI: This artificial intelligence platform automates some of the most difficult data science and machine learning workflows, such as feature engineering, model validation, model tuning, model selection, and model deployment. |

|

Intellicus BI Server (25 Users): Intellicus BI Server is an enterprise reporting and business intelligence platform with drag-and-drop interactive capability to pull KPIs to personalized dashboards and monitor the business parameters on a browser or a mobile device. |

|

Intellicus BI Server (50 Users): Intellicus BI Server is an enterprise reporting and business intelligence platform with drag-and-drop interactive capability to pull KPIs to personalized dashboards and monitor the business parameters on a browser or a mobile device. |

|

Microsoft Web Application Proxy - WAP 2016 Server: Deploy a Microsoft Web Application Proxy 2016 server preloaded with the ADFS WAP role and ADFS WAP PowerShell modules alongside the prerequisites for you to build an ADFS farm or add to an existing one. |

|

Project Tools Product Suite: Profecia IT (Pty) Ltd.'s Project Tools Product Suite is an automated reporting tool that collects and captures data via the mobile application, then completes your report automatically while your data is being captured. |

|

Qualys Virtual Firewall Appliance: The Qualys Virtual Firewall Appliance provides a continuous view of security events and compliance, putting a spotlight on your Azure infrastructure. It acts as an extension to the Qualys Cloud Platform. |

|

Ripple Development & Training Suit(Techlatest.net): If you're looking to get started with Ripple blockchain development and want to have an out-of-box environment up and running in minutes, this virtual machine is for you. |

|

RSA NetWitness Suite 11.1: RSA NetWitness 11.1 strengthens the RSA NetWitness Evolved SIEM offering through deeper and extended visibility and threat intelligence to help increase productivity. |

|

Secured Nginx on Ubuntu 16.04 LTS: Nginx can be deployed to serve dynamic HTTP content on the network using FastCGI, SCGI handlers for scripts, WSGI application servers, or Phusion Passenger modules, and it can serve as a software load balancer. |

|

secured-mariadb-on-ubuntu-16-04: MariaDB turns data into structured information in a wide array of applications, ranging from banking to websites. It is an enhanced, drop-in replacement for MySQL. |

|

SharePoint Term Extractor: DIQA Projektmanagement GmbH's SharePoint Term Extractor attaches to one or more SharePoint libraries and automatically extracts relevant terms for contained documents in common file formats. You can then use the terms to tag documents. |

|

SoftEther VPN Server Windows 2016: Quickly deploy SoftEther VPN server on Windows 2016. SoftEther VPN is an alternative to OpenVPN and Microsoft's VPN servers, and it supports Microsoft SSTP VPN for Windows. |

|

ThingsBoard Professional Edition with Cassandra: The Professional Edition is a closed-source version of the ThingsBoard IoT platform. It features several value-add features, including platform integrations, device and asset groups, a scheduler, and advanced white-labeling. |

Web Applications

|

Archive2Azure - Intelligent Information Management: Archive2Azure is an Azure-powered platform for modernizing your data estate, delivering long-term, secure, and cost-effective retention and management of organizational data. |

|

Barracuda WAF-as-a-Service: This cloud-delivered solution enables anyone to protect web apps against the OWASP’s top 10 threats, distributed denial of service attacks, zero-day attacks, and more in just minutes. Barracuda’s security can be configured in just five steps. |

|

fedora linux: fedora linux server by tunnelbiz.com is an operating system with the latest open-source technology. |

|

Gieom: Gieom helps define controls in your manual processes to manage your business risks. It supplies information about each risk factor, assesses its impact on a scale of 1-10, lists the probability of it happening, and provides a mitigation plan. |

|

Kyligence Enterprise: Powered by Apache Kylin, Kyligence Enterprise empowers business analysts to architect business intelligence on Hadoop with industry-standard data warehouse and BI methodology. |

|

Vormetric Data Security Manager v6.1.0: The Vormetric Data Security Manager (DSM) from Thales eSecurity serves as the center point for multi-cloud advanced encryption. You control encryption keys and policies. |

Consulting Services

|

Advanced Azure DevOps: 3 Day Workshop: In this hands-on course from Opsgility LLC, students will learn about automation and configuration management for Azure Infrastructure-as-a-Service and Platform-as-a-Service using the Azure Resource Manager. |

|

Azure Data Security Healthcheck: 2-Wk Workshop: The Data Security Healthcheck by Coeo Ltd. will identify and review data assets and end points, as well as potential attack vectors and vulnerabilities, recommending strategies and solutions to mitigate these risks. |

|

Azure EDI Cloud Migration & Integration: 5-Day PoC: EDI in the cloud is supported by Azure Integration Services, requiring no on-premises footprint, servers, or dedicated administrative staff. This proof of concept lays the foundation for B2B apps using EDI. |

|

Azure Enterprise Architecture: 1-Day Workshop: This on-site technical workshop by igroup will gather requirements and focus on what infrastructure and/or services are required to deploy an existing software application. |

|

Azure Machine Learning (ML): 2 Day Workshop: This course by Opsgility LLC explores Azure Machine Learning for students who are either new to machine learning or new to Microsoft Azure. This course is designed for data professionals and data scientists. |

|

Azure Migration & Integration: FREE 1-Day Briefing: TwoConnect's Azure Integration Services (AIS) migration briefing helps guide you in your effort to develop a plan to migrate your on-premises apps and integrations to the cloud. |

|

Azure Migration: 7-Day Assessment: Get an overview of your infrastructure environment, including dependencies and performance. Sentia will provide a recommended model of operations, securing a seamless migration and avoiding excess costs. |

|

Big Data and Machine Learning: 2 Day Workshop: This Azure Big Data and machine learning bootcamp by Opsgility LLC is designed to give students a clear architectural understanding of the application of Big Data patterns in Azure. |

|

BizTalk to Azure Integration: 3-Day Assessment: Migrating BizTalk apps to Azure Integration Services (AIS) and other Azure services is a multi-step process that begins with an expert analysis of existing apps. |

|

BizTalk VM to Azure VM: 2-Day Assessment: Moving virtual machines from on-premises to Azure can lower costs associated with BizTalk Server infrastructure. TwoConnect's BizTalk VM to Azure VM assessment can create a migration plan for your VM situation. |

|

Carbonite Endpoint Protection - Azure 1000 Bundle: Our team will deploy our Platform-as-a-Service offering onto a customer's Azure Enterprise Agreement, which will allow the customer to back up endpoints to Azure. Our team will also provide training. |

|

Cognitive and AI: 2 Day Workshop: This course by Opsgility LLC introduces you to Microsoft Cognitive Services and its features, including search, audio, computer vision, and language processing services. This course is designed for IT developers. |

|

DevOps in Azure: 2 Day Workshop: In this hands-on course by Opsgility LLC, students are introduced to fundamental DevOps concepts, as well as core technologies and services in Azure. This course is designed for IT professionals, developers, and DevOps engineers. |

|

InsurHUB for IFRS 17: 4-Hour Workshop: BDO’s team of experts will detail how the cloud-based solution InsurHUB can help your organization address IFRS 17 compliance using Microsoft Azure. IFRS 17 concerns financial reporting for insurance companies in Canada. |

|

Migrate to Azure Stack - Windows 1Tb (4-Wks): Our team will ensure that all aspects of your Windows migration project is a success. We utilize Carbonite Migrate technology for efficient migration of Windows workloads to Azure Stack with near-zero downtime. |

|

NetX- Veritas NetBackup: 2-Day Assessment: NetX has developed a Veritas NetBackup assessment service that evaluates the health of a NetBackup environment and includes an upgrade path to the latest version, as well as cloud readiness. |

|

On-Demand Training and Lab: 4-Wk Workshop: This training in the SkillMeUp series by Opsgility LLC provides on-demand courses and live lab environments for an immersive learning experience. SkillMeUp caters to nontechnical learners as well as to IT pros. |

|

Public Service to Azure API: 3-Day Assessment: Host your public-facing services on Azure to bring tremendous benefits in availability, security, and cost. TwoConnect's assessment includes a service review, a back-end review, and a migration plan. |

|

SaaS Integration with Azure Logic Apps: 3-Day POC: Azure Integration Services (AIS) makes it easy to integrate with any Software-as-a-Service app. This proof of concept by TwoConnect will enable you to show the benefits of AIS and other Azure services. |

Sharing a self-hosted Integration Runtime infrastructure with multiple Data Factories

The Integration Runtime (IR) is the compute infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments. If you need to perform data integration and orchestration securely in a private network environment, which does not have a direct line-of-sight from the public cloud environment, you can install a self-hosted IR on premises behind your corporate firewall, or inside a virtual private network.

Untill now, you were required to create at least one such compute infrastructure in every Data Factory by design for hybrid and on-premise data integration capabilities. Which implies if you have ten such data factories being used by different project teams to access on-premise data stores and orchestrate inside VNet, you would have to create ten self-hosted IR infrastructures, adding additional cost and management concerns to the IT teams.

With the new capability of self-hosted IR sharing, you can share the same self-hosted IR infrastructure across data factories. This lets you reuse the same highly available and scalable self-hosted IR infrastructure from different data factories within the same Azure Active Directory tenant. We are introducing a new concept of a Linked self-hosted IR which references another self-hosted IR infrastructure. This does not introduce any change in the way you currently author pipelines in Data Factory and works the same way as the self-hosted IR does. So once you have created a Linked self-hosted IR, you can start using the same way as you would use a self-hosted IR in the Linked Services.

New Self-hosted IR Terminologies (sub-types):

- Shared IR – The original self-hosted IR which is running on a physical infrastructure. By default, self-hosted IR do not have sub-type, but after sharing is enabled on a self-hosted IR, it then carries a sub-type as shared denoting that it is shared with other data factories.

- Linked IR – The IR which references another Shared IR. This is a logical IR and uses the infrastructure of another self-hosted IR (shared).

High-level architecture outlining the Self-hosted IR sharing mechanism across data factories:

Authoring/ creating a Linked self-hosted IR

Reference the step-by-step guide for sharing a self-hosted IR with multiple data factories.

References:

- Create a self-hosted IR infrastructure using Azure Resource Manager template on Azure VM

- Create a highly available and scalable self-hosted IR cluster

- Tutorial – Copy data from an on-premises SQL Server database to Azure Blob storage using self-hosted IR

- Security considerations while hybrid data movement involving self-hosted IR

Azure.Source – Volume 46

Now in preview

Reduce your exposure to brute force attacks from the virtual machine blade - One way to reduce exposure to an attack is to limit the amount of time that a port on your virtual machine is open. Ports only need to be open for a limited amount of time for you to perform management or maintenance tasks. Just-In-Time VM Access helps you control the time that the ports on your virtual machines are open. It leverages network security group (NSG) rules to enforce a secure configuration and access pattern.

Respond to threats faster with Security Center’s Confidence Score - Azure Security Center provides you with visibility across all your resources running in Azure and alerts you of potential or detected issues. Security Center can help your team triage and prioritize alerts with a new capability called Confidence Score. The Confidence Score automatically investigates alerts by applying industry best practices, intelligent algorithms, and processes used by analysts to determine whether a threat is legitimate and provides you with meaningful insights.

Tuesdays with Corey

|

Tuesdays With Corey | Awesome Demo of Azure Migrate tool - Corey Sanders, Corporate VP - Microsoft Azure Compute team sat down with Abhishek Hemrajani, Principal PM Manager on the Azure Compute Team to talk about Azure Migrate utility. |

Now generally available

DPDK (Data Plane Development Kit) for Linux VMs now generally available - Data Plane Development Kit (DPDK) for Azure Linux Virtual Machines (VMs) offers a fast user space packet processing framework for performance intensive applications that bypass the VM’s kernel network stack. DPDK provides a key performance differentiation in driving network function virtualization implementations. Customers in Azure can now use DPDK capabilities running in Linux VMs with multiple Linux OS distributions (Ubuntu, RHEL, CentOS and SLES) to achieve higher packets per second (PPS).

Azure SQL Data Warehouse Gen2 now generally available in France and Australia - Azure SQL Data Warehouse (Azure SQL DW) is a fast, flexible, and secure analytics platform offering you a SQL-based view across data. It is elastic, enabling you to provision a cloud data warehouse and scale to terabytes in minutes. Azure SQL DW Gen2 brings the best of Microsoft software and hardware innovations to dramatically improve query performance and concurrency. Compute Optimized Gen2 tier is now available in 22 regions.

Azure Friday

|

Azure Friday | Azure Stack - An extension of Azure - Ultan Kinahan joins Scott Hanselman to discuss how Azure Stack is an Azure consistent cloud platform that you can place where you need it, regardless of connectivity. It provides several key advantages to your cloud strategy, edge/disconnected capabilities, addresses governance and sovereignty concerns, and the ability to run PaaS services on premises. |

News and updates

Multi-member consortium support with Azure Blockchain Workbench 1.3.0 - Continuing a monthly release cadence, Azure Blockchain Workbench 1.3.0 is now available. This release offers faster and more reliable deployment, better transaction reliability, ability to deploy Workbench in a multi-member Ethereum PoA consortium, simpler pre-deployment script for AAD, and sample code and a tool for working with the Workbench API.

Hardening the security of Azure IoT Edge - The Azure IoT Edge security manager is a well-bounded security core for protecting the IoT Edge device and all its components by abstracting the secure silicon hardware. It is the focal point for security hardening and provides technology integration point to original device manufacturers (OEM). The IoT Edge security manager in essence comprises software working in conjunction with secure silicon hardware where available and enabled to help deliver the highest security assurances possible.

Public Environment Repository in Labs - We're releasing a public repository of Azure Resource Manager templates for each lab. You can use the repository to create environments without having to connect to an external GitHub source by yourself. The repository includes frequently used templates such as Azure Web Apps, Service Fabric clusters, and developer SharePoint farm environments.

Speech Services August 2018 update - Announces the release of another update to the Cognitive Services Speech SDK (version 0.6.0). With this release, we have added the support for Java on Windows 10 (x64) and Linux (x64). We are also extending the support for .NET Standard 2.0 to the Linux platform. The sample section of the SDK has been updated with samples showcasing the use of the newly supported languages. There are also changes that impact the Speech Devices SDK.

Cross-subscription disaster recovery for Azure virtual machines - Announces cross-subscription disaster recovery (DR) support for Azure virtual machines using Azure Site Recovery (ASR). You can now configure DR for Azure IaaS applications to a different subscription with in the same Azure Active Directory tenant. Now you can replicate your virtual machines to a different Azure region of your choice within a geographical cluster across subscriptions, which will help you meet the business continuity and disaster recover requirements for your IaaS applications without altering subscription topology of your Azure environment. Disaster recovery between Azure regions is available in all Azure regions where ASR is available.

Additional news and updates

- Managed instance general purpose name changes

- Migrate projects to the Inheritance process model: VSTS Sprint 139 Update

- Azure IoT Edge 1.0.1 release

- Data Factory supports service principal and MSI authentication for Azure Blob connectors

Several updates support improved experiences with Azure HDInsight, including:

- Azure HDInsight is now available in South India

- A_V2 series VMs are available in Azure HDInsight

- Kafka 1.1 support on Azure HDInsight

- G-series VMs are available in Azure HDInsight

The Azure Podcast

|

|

The Azure Podcast: Episode 243 - Azure File Sync - Sibonay Koo, a PM in the Azure Files team, talks to us about a new service that just went GA - Azure File Sync. She gives us use-cases for using the new service as well as tips and tricks for getting the most out of it. |

Azure tips & tricks

|

How to access Cloud Shell from within Microsoft docs | Azure Tips and Tricks |

How to start, restart, stop or delete multiple VMs | Azure Tips and Tricks |

Technical content and training

Migrate Windows Server 2008 to Azure with Azure Site Recovery - Azure Site Recovery lets you easily migrate your Windows Server 2008 machines including the operating system, data and applications on it to Azure. All you need to do is perform a few basic setup steps, create storage accounts in your Azure subscription, and then get started with Azure Site Recovery by replicating servers to your storage accounts. Azure Site Recovery orchestrates the replication of data and lets you migrate replicating servers to Azure when you are ready. You can use Azure Site Recovery to migrate your servers running on VMware virtual machines, Hyper-V or physical servers.

Azure Block Blob Storage Backup - Azure Blob Storage is Microsoft's massively scalable cloud object store. Blob Storage is ideal for storing any unstructured data such as images, documents and other file types. Although Blob storage supports replication out-of-box, it's important to understand that the replication of data does not protect against application errors. In this blog post, Hemant Kathuria outlines a backup solution that you can use to perform weekly full and daily incremental back-ups of storage accounts containing block blobs for any create, replace, and delete operations. The solution also walks through storage account recovery should it be required.

Logic Apps, Flow connectors will make Automating Video Indexer simpler than ever - Video Indexer recently released a new and improved Video Indexer V2 API. This RESTful API supports both server-to-server and client-to-server communication and enables Video Indexer users to integrate video and audio insights easily into their application logic, unlocking new experiences and monetization opportunities. To make the integration even easier, we also added new Logic Apps and Flow connectors that are compatible with the new API. Learn how to get started quickly with the new connectors using Microsoft Flow templates that use the new connectors to automate extraction of insights from videos.

WebJobs in Azure with .NET Core 2.1 - A WebJob is a program running in the background of an App Service. It runs in the same context as your web app at no additional cost. Creating a WebJob in .NET Core isn't hard, but you have to know some tricks, especially if you want to use some .NET Core goodies like logging and DI. In this post, Sander Rossel shows how to build a WebJob using .NET Core 2.1 and release it to Azure using Visual Studio, the Azure portal, and VSTS.

Azure Machine Learning in plain English - Data scientist and author Siraj Raval recently released a 12-minute video overview of Azure Machine Learning. The video included in this post begins with a overview of cloud computing and Microsoft Azure generally, before getting into the details of some specific Azure services for machine learning.

//DevTalk : App Service – SSL Settings Revamp - App Service SSL settings experience is one of the most used features in App Service. Based on customer feedback, we made several changes to the user experience to address and improve the overall experience of managing certificates in Azure App Service. Check out this post to learn about improvements to editing SSL bindings, private certificate details, uploading certificates, and adding public certificates.

Azure Content Spotlight – API Management Suite – VSTS extension v2.0 - This spotlight is for a VSTS extension: the API Management Suite. The purpose of the extension to VSTS is to bring API Management into the release lifecycle allowing you to do many of the activities supported in the Azure Portal. Also included is a security checker to ensure all released endpoints are secure. The source is available on GitHub.

Operationalize Your Deep Learning Models with Azure CLI 2.0 - Francesca Lazzeri, AI & Machine Learning Scientist at Microsoft, shows how to deploy your deep learning models on a Kubernetes cluster as a web service using Azure Command-Line Interface (Azure CLI) 2.0. This approach simplifies the model deployment process by using a set of Azure ML CLI commands.

AI Show

|

AI Show | Sketch2Code - Learn how this application transforms any hands-drawn design into a HTML code with AI by detecting design patterns, understanding handwritten text, understanding structure, and then finally building HTML. |

Customers and partners

Azure Marketplace consulting offers: May - The Azure Marketplace is the premier destination for all your Azure software and consulting needs. In May, twenty-six consulting offers successfully met the onboarding criteria and went live, including: App Migration to Azure 10-Day Workshop from Imaginet and Cloud Governance: 3-Wk Assessment from Cloudneeti.

The IoT Show

|

Internet of Things Show | Azure IoT for Smart Spaces - Meet Lyrana Hughes, a developer in the Azure IoT team, who gives us an early look at the spatial intelligence capabilities she is working on. Lyrana and her team are working with partners in the world of smart building and smart spaces to develop this new set of capabilities within Azure IoT. |

|

|

Internet of Things Show | Microsoft Smart IoT Campus - The Microsoft Campus in Redmond, WA is (obviously) a smart campus that leverages IoT in many ways. The IoT Show managed to get a pass to visit one of the labs where the Applied Innovation team of the Microsoft Digital Group tests and develops new IoT technologies for Smart Buildings. In this episode, Jeremy Richmond, from McDonald-Miller, demos a solution developed with the Applied Innovation team and used for the maintenance of the HVAC systems across Microsoft's Campus, integrating IoT sensors information and devices with Azure IoT and Dynamics 365 Connected Field Service. |

Industries

Driving industry transformation with Azure – Getting started – Edition 1 - Through dedicated industry resources from Microsoft and premier partners, new solutions, content, and guidance are released almost daily. This first post in what promises to be a monthly series aggregates a series of the newest resources focused on how Azure can address common challenges and drive new opportunities in a variety of industries.

Expanded Azure Blueprint for FFIEC compliant workloads - Announces the general availability of the expanded Blueprint for Federal Financial Institution Examination Council (FFIEC) regulated workloads. As more financial services customers moving to the Azure cloud platform, this expanded Blueprint explains how to deploy four different reference architectures in a secure and compliant way. It also takes the guesswork out of figuring out what security controls Microsoft implements on your behalf when you build on Azure, and how to implement the customer-responsible security controls.

A Cloud Guru's Azure This Week

|

A Cloud Guru’s Azure This Week - 24 August - This time on Azure This Week, Lars talks about three new features on Azure: VNet service endpoints for MySQL and PostgreSQL, Azure HDInsight Apache Phoenix now supporting Zeppelin and Azure API management extension for VSTS. Also, participate in the AI challenge from Microsoft to win great prizes. |

Keeping shelves stocked and consumers happy (and shopping!)

Being a working parent of two young children, I try to limit how much time I spend on household errands. I am one of those shoppers who has become conditioned to shopping based on convenience and ease. Availability of the products I want, and need must be available to me via all channels I shop — always! There is nothing more frustrating than shopping online to have my order cancelled because it can’t be fulfilled or shopping in store and finding the shelf empty. Sometimes I can delay my purchase. But if not, I either look for an alternative product or I’ll take my business to another retailer. Either way, it means I’m taking my dollars elsewhere, and both the local retailer and consumer brand lose a sale and potentially long-term relationship should out-of-stock be a regular occurrence.

Advanced analytics can solve out-of-stock challenges

An improved SKU assortment using advanced analytics to more closely monitor, predict and provide a course of action for out-of-stocks may prevent this experience. Today, we can solve for this scenario and like scenarios by developing algorithms that provide predictive insights that optimize available SKUs, tailoring assortments by store to ensure products are stocked to meet consumer demand.

An advanced analytics solution must be able to handle millions of SKUs and segment data into detailed comparisons. The outcome is the ability to maximize sales at every store by tuning product assortments using advanced analytics and visualization tools to enable insights. Doing this successfully could result in an estimated 5-10 percent sales increase.

Azure offers several out-of-the box services that can be used to build a scalable analytics solution that is cost effective and comprehensive. And if developing a solution is not a viable option, Microsoft works with partners such as Neal Analytics who have proven capabilities solving this business challenge at scale.

Assortment Optimization Solution

How machine learning is applied to the out-of-stock example

To determine how to solve out-of-stocks with a SKU assortment optimization solution, read the Inventory optimization through SKU Assortment + machine learning use case. This document provides options for solving the out-of-stock challenge and a solution overview leveraging Azure Services, including an approach by Neal Analytics, Microsoft 2017 Global Partner of the Year for business analytics.

I post regularly about new developments on social media. If you would like to follow me you can find me on Linkedin and Twitter.

Microsoft Azure Data welcomes attendees to VLDB 2018

Hello VLDB attendees!

Welcome to Rio De Janeiro. I wanted to take this opportunity to share with you some of the exciting work in data that’s going on in the Azure Data team at Microsoft, and to invite you to take a closer look.

Microsoft has long been a leader in database management with SQL Server, recognized as the top DBMS by Gartner for the past three years in a row. The emergence of the cloud and edge as the new frontiers for computing, and thus data management, is an exciting direction—data is now dispersed within and beyond the enterprise, on-premises, on-cloud, and on edge devices, and we must enable intelligent analysis, transactions, and responsible governance for all data everywhere, from the moment it is created to the moment it is deleted, through the entire life-cycle of ingestion, updates, exploration, data prep, analysis, serving, and archival.

These trends require us to fundamentally re-think data management. Transactional replication can span continents. Data is not just relational. Interactive, real-time, and streaming applications with enterprise level SLAs are becoming common. Machine learning is a foundational analytic task and must be supported while ensuring that all data governance policies are enforced, taking full advantage of advances in deep learning and hardware acceleration via FPGAs and GPUs. Microsoft is a very data-driven company, and to support product teams such as Bing, Office, Skype, Windows, and Xbox, the Azure Data team operates some of the world’s largest data services for our internal customers. This grounds our thinking about how data management is changing, through close collaborations with some of the most sophisticated application developers on the planet. Of course, even in a company like Microsoft, the majority of data owners and users are domain experts, not database experts. This means that even though the underlying complexity of data management is growing rapidly, we need to greatly simplify how users deal with their data. Indeed, a large cloud database service contains millions of customer databases, and requires us to handle many of the tasks previously handled by database administrators.

We are excited about the opportunity to re-imagine data management. We are making broad and deep investments in SQL Server and open source technologies, in Azure data services that leverage them, as well as in new built-for-cloud technologies. We have an ambitious vision of the future of data management that embraces Data Lakes and NoSQL, on-premises, in the cloud, and on edge devices. There has never been a better time to be part of database systems innovation at Microsoft, and we invite you to explore the opportunities to be part of our team.

This is an exciting time in our industry with many companies competing for talent and customers, and as you consider options, we want to highlight how Microsoft is differentiated in several respects. First, we have a unique combination of a world-class data management ecosystem in SQL Server and a leading public cloud in Azure. Second, our culture puts customers first with a commitment to bringing them the best of open source technologies alongside the best of Microsoft. Third, we have a deep commitment to innovation. Product teams collaborate closely with research and advanced development groups at Cloud Information Services Lab, Gray Systems Lab, and Microsoft Research to go farther faster, and to maintain strong ties with the research community.

In this blog, I’ve listed the many services and ongoing work in the Azure Data group at Microsoft, together with links that will give you a closer look. I hope you will find these of interest.

Have a great VLDB conference!

Rohan Kumar

Corporate Vice President, Azure Data

________________________________________

Azure Data blog posts

The Microsoft data platform continues momentum for cloud-scale innovation and migration

Azure data blog posts by Rohan Kumar

SQL Server

Industry leading database management system, now available on Linux/Docker and Windows, on-premises and in the cloud.

Azure Cosmos DB: The industry’s first globally-distributed, multi-model database service

Azure Cosmos DB is the first globally-distributed data service that lets you elastically scale throughput and storage across any number of geographical regions while guaranteeing low latency, high availability, and consistency – backed by the most comprehensive SLAs in the industry. Azure Cosmos DB is built to power today’s IoT and mobile apps, and tomorrow’s AI-hungry future.

It is the first cloud database to natively support a multitude of data models and popular query APIs, is built on a novel database engine capable of ingesting sustained volumes of data, and provides blazing-fast queries – all without having to deal with schema or index management. And it is the first cloud database to offer five well-defined consistency models, so you can choose just the right one for your app.

Azure Cosmos DB: The industry's first globally-distributed, multi-model database service

Azure Data Lake Analytics - An on-demand analytics job service to power intelligent action

Easily develop and run massively parallel data transformation and processing programs in U-SQL, R, Python, and .NET over petabytes of data. With no infrastructure to manage, you can process data on demand, scale instantly, and only pay per job. There is no infrastructure to worry about because there are no servers, virtual machines, or clusters to wait for, manage, or tune. Instantly scale the processing power for any job.

Azure Data Catalog – Find, understand, and govern data

Companies collectively own Exabytes of data across hundreds of billions of streams, files, tables, reports, spreadsheets, VMs, etc. But for the most part, companies don't know where their data is, what's in it, how it's being used, or if it's secure. Our job is to fix that. We are building an automated infrastructure to find and classify any form of data anywhere it lives, from on-premises to cloud, figure out what the data contains, and how it should be managed/protected. Our global scale infrastructure will track all of this data and provide a search engine to make the data discoverable and easily re-usable. From AI to distributed systems we are using cutting edge technologies to make data useful.

Azure Data Factory – Managed, hybrid data integration service at scale

Create, schedule, and manage your data integration at scale. Work with data wherever it lives, in the cloud or on-premises, with enterprise-grade security. Accelerate your data integration projects by taking advantage of over 70 available data source connectors. Use the graphical user interface to build and manage your data pipelines. Transform raw data into finished, shaped data ready for consumption by business intelligence tools or custom applications. Easily lift your SQL Server Integration Services (SSIS) packages to Azure, and let Azure Data Factory manage your resources so you can increase productivity and lower total cost of ownership (TCO).

Azure HDInsight – Managed cluster service for the full spectrum of Hadoop and Spark

Azure HDInsight is a fully-managed cluster service that makes it easy, fast, and cost-effective to process massive amounts of data. Use popular open-source frameworks such as Hadoop, Spark, Hive, LLAP, Kafka, Storm, ML Services, and more. Azure HDInsight enables a broad range of scenarios such as ETL, Data Warehousing, Machine Learning, IoT, and more. A cost-effective service that is powerful and reliable. Pay only for what you use. Create clusters on demand, then scale them up or down. Decoupled compute and storage provide better performance and flexibility.

Azure SQL Database - The intelligent relational cloud database service

The world’s first intelligent database service that learns and adapts with your application, enabling you to dynamically maximize performance with very little effort on your part. Working around the clock to learn, profile, and detect anomalous database activities, Threat Detection identifies potential threats to the database, and like an airplane’s flight data recorder, Query Store collects detailed historical information about all queries, greatly simplifying performance forensics by reducing the time to diagnose and resolve issues.

Adaptive query processing support for Azure SQL Database

Improved Automatic Tuning boosts your Azure SQL Database performance

Query Store: A flight data recorder for your database

Azure SQL Data Warehouse - Fast, flexible, and secure analytics platform

A fast, fully managed, elastic, petabyte-scale cloud data warehouse. Azure SQL Data Warehouse (SQL DW) lets you independently scale compute and storage, while pausing and resuming your data warehouse within minutes through a distributed processing architecture designed for the cloud. Seamlessly create your hub for analytics along with native connectivity with data integration and visualization services, all while using your existing SQL and BI skills. Through PolyBase, Azure Data Warehouse brings data lake data into the warehouse with support for rich queries over files and unstructured data.

Blazing fast data warehousing with Azure SQL Data Warehouse

Azure SQL Database Threat Detection, your built-in security expert

Azure SQL Data Warehouse now generally available in all Azure regions worldwide

Azure Stream Analytics - A serverless, real-time analytics service to power intelligent action

Easily develop and run massively parallel real-time analytics on multiple IoT or non-IoT streams of data using a simple SQL-like language. Use custom code for advanced scenarios. With no infrastructure to manage, you can process data on-demand, scale instantly, and only pay per job. Azure Stream Analytics seamlessly integrates with Azure IoT Hub and Azure IoT Suite. Azure Stream Analytics is also available on Azure IoT Edge enabling near-real-time analytical intelligence closer to IoT devices so that they can unlock the full value of device-generated data.

Gray Systems Lab (GSL) and Cloud and Information Services Lab (CISL)

The Azure Data Office of the CTO is the home of the Gray Systems Lab (GSL) and the Cloud and Information Services Lab (CISL). These are applied research groups, employing scientists and engineers focusing on everything data, from SQL Server in the cloud to massive-scale distributed systems for Big Data, to hardware-accelerated data processing to self-healing streaming solutions. The labs were founded in 2008 and 2012 respectively, have since produced 70+ papers in top database and system conferences, have filed numerous patents, and are continuously engaged in deep academic collaborations, through internships and sponsorship of academic projects. This research focus is matched with an equally strong commitment to open-source (with several committers in Apache projects who together contributed over 500K LOC to open-source), and continuous and deep engagement with product teams.

Strong product partnerships provide an invaluable path for innovation to affect the real world—the labs’ innovations today govern hundreds of thousands of servers in our Big Data infrastructure, and ship with several cloud and on-premises products. Microsoft’s cloud-first strategy gives researchers in our group a unique vantage point, where PBs of telemetry data and first-party workloads make every step of our innovation process principled and data-driven. The labs have locations in Microsoft’s Redmond and Silicon Valley campuses, as well as in the UW-Madison campus. To hear more, please contact Carlo Curino at carlo.curino@microsoft.com or Alan Halverson at alanhal@microsoft.com.

Turn your whiteboard sketches to working code in seconds with Sketch2Code

User interface design process involves a lot a creativity that starts on a whiteboard where designers share ideas. Once a design is drawn, it is usually captured within a photograph and manually translated into some working HTML wireframe to play within a web browser. This takes efforts and delays the design process. What if a design is refactored on the whiteboard and the browser reflects changes instantly? In that sense, by the end of the session there is a resulting prototype validated between the designer, developer, and customer. Introducing Sketch2Code, a web based solution that uses AI to transform a handwritten user interface design from a picture to a valid HTML markup code.

Let’s understand the process of transforming handwritten image to HTML using Sketch2Code in more details.

- First the user uploads an image through the website.

- A custom vision model predicts what HTML elements are present in the image and their location.

- A handwritten text recognition service reads the text inside the predicted elements.

- A layout algorithm uses the spatial information from all the bounding boxes of the predicted elements to generate a grid structure that accommodates all.

- An HTML generation engine uses all these pieces of information to generate an HTML markup code reflecting the result.

Below is the the application workflow:

The Sketch2Code uses the following elements:

- A Microsoft Custom Vision Model: This model has been trained with images of different handwritten designs tagging the information of most common HTML elements like buttons, text box, and images.

- A Microsoft Computer Vision Service: To identify the text written into a design element a Computer Vision Service is used.

- An Azure Blob Storage: All steps involved in the HTML generation process are stored, including the original image, prediction results and layout grouping information.

- An Azure Function: Serves as the backend entry point that coordinates the generation process by interacting with all the services.

- An Azure website: User font-end to enable uploading a new design and see the generated HTML results.

The above elements form the architecture as follows:

You can find the code, solution development process, and all other details on GitHub. Sketch2Code is developed in collaboration with Kabel and Spike Techniques.

We hope this post helps you get started with AI and motivates you to become an AI developer.

Tara

ASP.NET Core 2.2.0-preview1: Endpoint Routing

Endpoint Routing in 2.2

What is it?

We’re making a big investment in routing starting in 2.2 to make it interoperate more seamlessly with middleware. For 2.2 this will start with us making a few changes to the routing model, and adding some minor features. In 3.0 the plan is to introduce a model where routing and middleware operate together naturally. This post will focus on the 2.2 improvements, we’ll discuss 3.0 a bit further in the future.

So, without further ado, here are some changes coming to routing in 2.2.

How to use it?

The new routing features will be on by default for 2.2 applications using MVC. UseMvc and related methods with the 2.2 compatibility version will enable the new ‘Endpoint Routing’ feature set. Existing conventional routes (using MapRoute) or attribute routes will be mapped into the new system.

public void ConfigureServices(IServiceProvider services)

{

...

services.AddMvc().SetCompatibilityVersion(CompatibilityVersion.Version_2_2);

}

public void Configure(IApplicationBuilder app)

{

....

app.UseMvc();

}

If you need to specifically revert the new routing features, this can be done by setting an option. We’ve tried to make the new endpoint routing system as backwards compatible as is feasible. Please log issues at https://github.com/aspnet/Routing if you encounter problems.

We don’t plan to provide an experience in 2.2 for using the new features without MVC – our focus for right now is to make sure we can successfully shift MVC applications to the new infrastructure.

Link Generator Service

We’re introducing a new singleton service that will support generating a URL. This new service can be used from middleware, and does not require an HttpContext. For right now the set of things you can link to is limited to MVC actions, but this will expand in 3.0.

public class MyMiddleware

{

public MyMiddleware(RequestDelegate next, LinkGenerator linkGenerator) { ... }

public async Task Invoke(HttpContext httpContext)

{

var url = _linkGenerator.GenerateLink(new { controller = "Store",

action = "ListProducts" });

httpContext.Response.ContentType = "text/plain";

return httpContext.Response.WriteAsync($"Go to {url} to see some cool stuff.");

}

}

This looks a lot like MVC’s link generation support (IUrlHelper) for now — but it’s usable anywhere in your application. We plan to expand the set of things that are possible during 2.2.

Performance Improvements

One of the biggest reasons for us to revisit routing in 2.2 is to improve the performance of routing and MVC’s action selection.

We still have more work to, but the results so far are promising:

This chart shows the trend of Requests per Second (RPS) of our MVC implementation of the TechEmpower plaintext benchmark. We’ve improved the RPS of this benchmark about 10% from 445kRPS to 515kRPS.

To test more involved scenarios, we’ve used the Swagger/OpenAPI files published by Azure and Github to build code-generated routing benchmarks. The Azure API benchmark has 5160 distinct HTTP endpoints, and the Github benchmark has 243. The new routing system is significantly faster at processing the route table of these real world APIs than anything we’ve had before. In particular MVC’s features that select actions such as matching on HTTP methods and [Consumes(...)] are significantly less costly.

Improvements to link generation behavior for attribute routing

We’re also using this opportunity to revisit some of the behaviors that users find confusing when using attribute routing. Razor Pages is based on MVC’s attribute routing infrastructure and so many new users have become familiar with these problems lately. Inside the team, we refer to this feature as ‘route value invalidation’.

Without getting into too many details, conventional routing always invalidates extra route values when linking to another action. Attribute routing didn’t have this behavior in the past. This can lead to mistakes when linking to another action that uses the same route parameter names. Now both forms of routing invalidate values when linking to another action.

A conceptual understanding

The new routing system is called ‘Endpoint Routing’ because it represents the route table as a set of Endpoints that can be selected by the the routing system. If you’ve ever thought about how attribute routing might work in MVC, the above description should not be surprising. The new part is that a bunch of concerns traditionally handled by MVC have been pushed down to a very low level of the routing system. Endpoint routing now processes HTTP methods, [Consumes(...)], versioning, and other policies that used to be part of MVC’s action selection process.

In contrast to this, the existing routing system models the application is a list of ‘Routes’ that need to be processed in order. What each route does is a black-box to the routing system – you have to run the route to see if it will match.

To make MVC’s conventional routing work, we flatten the list of actions multiplied by the number of routes into a list of endpoints. This flattening allows us to process all of MVC’s requirements very efficiently inside the routing system.

The list of endpoints gets compiled into a tree that’s easy for us to walk efficiently. This is similar to what attribute routing does today but using a different algorithm and much lower complexity. Since routing builds a graph based on the endpoints, this means that the complexity of the tree scales very directly with your usage of features. We’re confident that we can scale up this design nicely while retaining the pay-for-play characteristics.

New round-tripping route parameter syntax

We are introducing a new catch-all parameter syntax {**myparametername}. During link generation, the routing system will encode all the content in the value captured by this parameter except the forward slashes.

Note that the old parameter syntax {*myparametername} will continue to work as it did before. Examples:

- For a route defined using the old parameter syntax :

/search/{*page},

a call toUrl.Action(new { category = "admin/products" })would generate a link/search/admin%2Fproducts(notice that the forward slash is encoded) - For a route defined using the new parameter syntax :

/search/{**page},

a call toUrl.Action(new { category = "admin/products" })would generate a link/search/admin/products

What is coming next?

Expect more refinement and polish on the LinkGenerator API in the next preview. We want to make sure that this new API will support a variety of scenarios for the foreseeable future.

How can you help?

There are a few areas where you can provide useful feedback during this preview. We’re interested in any thoughts you have of course, these are a few specific things we’d like opinions on. The best place to provide feedback is by opening issues at https://github.com/aspnet/Routing

What are you using IRouter for? The ‘Endpoint Routing’ system doesn’t support IRouter-based extensibility, including inheriting from Route. We want what you’re using IRouter for today so we can figure out how to accomplish those things in the future.

What are you using IActionConstraint for? ‘Endpoint Routing’ supports IActionConstraint-based extensibility from MVC, but we’re trying to find better ways to accomplish these tasks.

What are your ‘most wanted’ issues from Routing? We’ve revisited a bunch of old closed bugs and brought back a few for reconsideration. If you feel like there are missing details or bugs in routing that we should consider please let us know.

Caveats and notes

We’ve worked to make the new routing system backwards compatible where possible. If you run into issues we’d love for you to report them at https://github.com/aspnet/Routing.

DataTokens are not supported in 2.2.0-preview1. We plan to address this for the next preview.

By nature the new routing system does not support IRouter based extensibility.

Generating links inside MVC to conventionally routed actions that don’t yet exist will fail (resulting in the empty string). This is in contrast to the current MVC behavior where linking usually succeeds even if the action being linked hasn’t been defined yet.

We know that the performance of link generation will be bad in 2.2.0-preview1. We worked hard to get the API definitions in so that you could try it out, and ignored performance. Expect the performance of URL generation to improve significantly for the next preview.

Endpoint routing does not support WebApiCompatShim. You must use the 2.1 compatibility switch to continue using the compat shim.

TypeScript and Babel 7

Over a year ago, we set out to find what the biggest difficulties users were running into with TypeScript, and we found that a common theme among Babel users was that trying to get TypeScript set up was just too hard. The reasons often varied, but for a lot of developers, rewiring a build that’s already working can be a daunting task.

Babel is a fantastic tool with a vibrant ecosystem that serves millions of developers by transforming the latest JavaScript features to older runtimes and browsers; but it doesn’t do type-checking, which our team believes can bring that experience to another level. While TypeScript itself can do both, we wanted to make it easier to get that experience without forcing users to switch from Babel.

That’s why over the past year we’ve collaborated with the Babel team, and today we’re happy to jointly announce that Babel 7 now ships with TypeScript support!

How do I use it?