This week at Microsoft Ignite 2018, we are excited to announce eight new features in Azure Stream Analytics (ASA). These new features include

- Support for query extensibility with C# custom code in ASA jobs running on Azure IoT Edge.

- Custom de-serializers in ASA jobs running on Azure IoT Edge.

- Live data Testing in Visual Studio.

- High throughput output to SQL.

- ML based Anomaly Detection on IoT Edge.

- Managed Identities for Azure Resources (formerly MSI) based authentication for egress to Azure Data Lake Storage Gen 1.

- Blob output partitioning by custom date/time formats.

- User defined custom re-partition count.

The features that are generally available and the ones in public preview will start rolling imminently. For early access to private preview features, please use our sign up form.

Also, if you are attending Microsoft Ignite conference this week, please attend our session BRK3199 to learn more about these features and see several of these in action.

General availability features

Parallel write operations to Azure SQL

Azure Stream Analytics now supports high performance and efficient write operations to Azure SQL DB and Azure SQL Data Warehouse to help customers achieve four to five times higher throughput than what was previously possible. To achieve fully parallel topologies, ASA will transition SQL writes from serial to parallel operations while simultaneously allowing for batch size customizations. Read Understand outputs from Azure Stream Analytics for more details.

Configuring hi-throughput write operation to SQL

Public previews

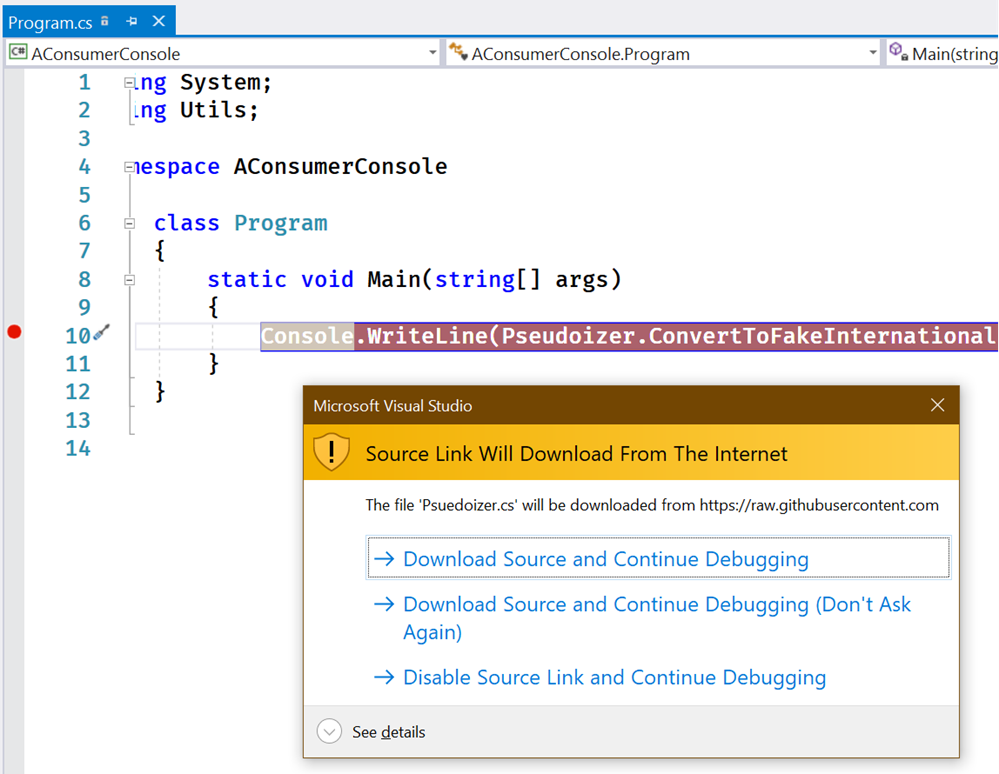

Query extensibility with C# UDF on Azure IoT Edge

Azure Stream Analytics offers a SQL-like query language for performing transformations and computations over streams of events. Though there are many powerful built-in functions in the currently supported SQL language, there are instances where a SQL-like language doesn't provide enough flexibility or tooling to tackle complex scenarios.

Developers creating Stream Analytics modules for Azure IoT Edge can now write custom C# functions and invoke them right in the query through User Defined Functions. This enables scenarios like complex math calculations, importing custom ML models using ML.NET and programming custom data imputation logic. Full fidelity authoring experience is made available in Visual Studio for these functions. You can install the latest version of Azure Stream Analytics tools for Visual Studio.

Find more details about this feature in our documentation.

Definition of the C# UDF in Visual Studio

Calling the C# UDF from ASA Query

Output partitioning to Azure Blob Storage by custom date and time formats

Azure Stream Analytics users can now partition output to Azure Blob storage based on custom date and time formats.

This feature greatly improves downstream data-processing workflows by allowing fine-grained control over the blob output especially for scenarios such as dashboarding and reporting. In addition, partition by custom date and time formats enables stronger alignment with downstream Hive supported formats and conventions when consumed by services such as Azure HDInsight or Azure Databricks. Read Understand outputs from Azure Stream Analytics for more details.

Partition by custom date or time on Azure portal

Ability to partition output to Azure Blob storage by custom field or attribute continues to be in private preview.

Setting partition by custom attribute on Azure portal

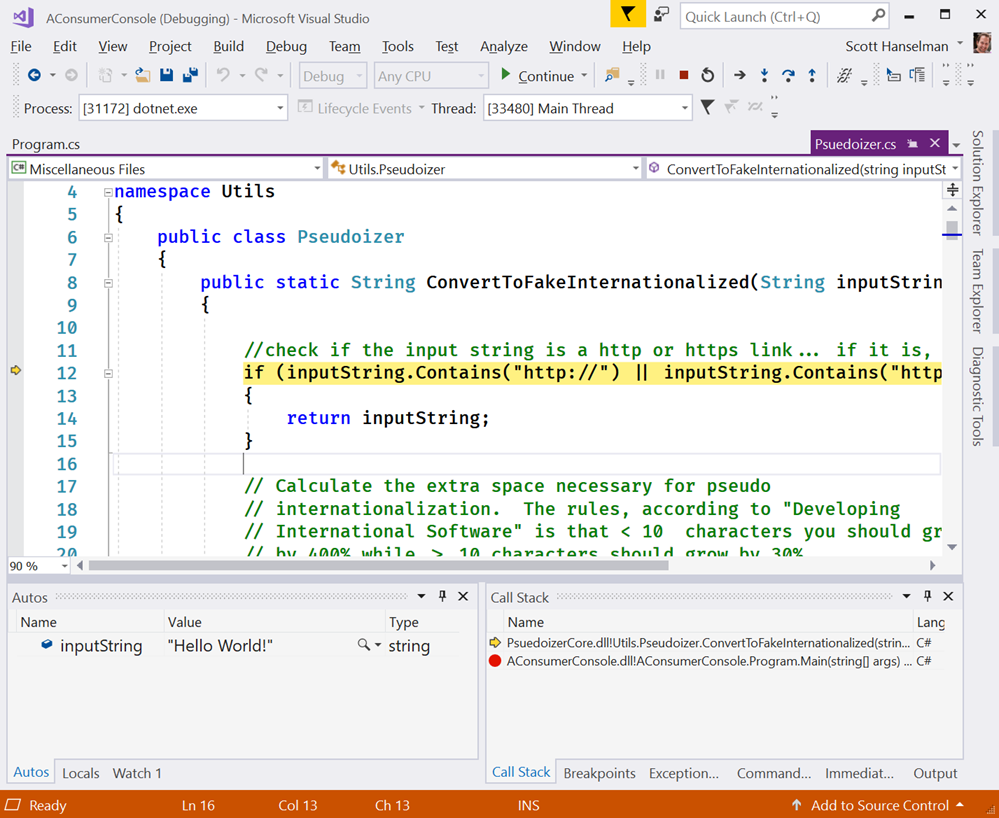

Live data testing in Visual Studio

Available immediately, Visual Studio tooling for Azure Stream Analytics further enhances the local testing capability to help users test their queries against live data or event streams from cloud sources such as Azure Event Hubs or IoT hub. This includes full support for Stream Analytics time policies in local simulated Visual Studio IDE environment.

This significantly shortens development cycles as developers no longer need to start/stop their job to run test cycles. Also, this feature provides a fluent experience for checking the live output data while the query is running. You can install the latest version of Azure Stream Analytics tools for Visual Studio.

Live Data Testing in Visual Studio IDE

User defined custom re-partition count

We are extending our SQL language to optionally enable users to specify the number of partitions of a stream when performing repartitioning. This will enable better performance tuning for scenarios where the partition key can’t be changed to upstream constraints, or when we have fixed number of partitions for output, or partitioned processing is needed to scale out to larger processing load. Once repartitioned, each partition is processed independently of others.

With this new language feature, query developer can simply use a newly introduced keyword INTO after PARTITION BY statement. For example, the query below reads from the input stream (regardless of it being naturally partitioned) and repartition the stream into 10 based on the DeviceID dimension and flush the data to output.

SELECT * INTO [output] FROM [input] PARTITION BY DeviceID INTO 10

Private previews – Sign up for previews

Built-in models for Anomaly Detection on Azure IoT Edge and cloud

By providing ready-to-use ML models right within our SQL-like language, we empower every developer to easily add Anomaly Detection capabilities to their ASA jobs, without requiring them to develop and train their own ML models. This in effect reduces the whole complexity associated with building ML models to a simple single function call.

Currently, this feature is available for private preview in cloud, and we are happy to announce that these ML functions for built-in Anomaly Detection are also being made available for ASA modules running on Azure IoT Edge runtime. This will help customers who demand sub-second latencies, or within scenarios where connectivity to cloud is unreliable or expensive.

In this latest round of enhancements, we have been able to reduce the number of functions from five to two while still detecting all five kinds of anomalies of Spike, Dip, Slow positive increase, Slow negative decrease, and Bi-level changes. Also, our tests are showing a remarkable five to ten times improvement in performance.

Sedgwick is a global provider of technology enabled risk, benefits and integrated business solutions who has been engaged with us as an early adopter for this feature.

“Sedgwick has been working directly with Stream Analytics engineering team to explore and operationalize compelling scenarios for Anomaly Detection using built-in functions in the Stream Analytics Query language. We are convinced this feature holds a lot of potential for our current and future scenarios”.

– Krishna Nagalapadi, Software Architect, Sedgwick Labs.

Custom de-serializers in Stream Analytics module on Azure IoT Edge

Today, Azure Stream Analytics supports input events in JSON, CSV or AVRO data formats out of the box. However, millions of IoT devices are often optimized to generate data in other formats to encode structured data in a more efficient yet extensible format.

Going forward, IoT devices sending data in any format can leverage the power of Azure Stream Analytics. Be it Parquet, Protobuf, XML or any binary format. Developers can now implement custom de-serializers in C# which can then be used to de-serialize events received by Azure Stream Analytics.

Configuring input with a custom serialization format

Managed identities for Azure resources (formerly MSI) based authentication for egress to Azure Data Lake Storage Gen 1

Users of Azure Stream Analytics will now be able to operationalize their real-time pipelines with MSI based authentication while writing to Azure Data Lake Storage Gen 1.

Previously, users depended on Azure Active Directory based authentication for this purpose, which had several limitations. For instance, users will now be able to automate their Stream Analytics pipelines through PowerShell. Secondly, this allows users to have long running jobs without being interrupted for sign-in renewals periodically. Finally, this makes user experience consistent across almost all ingress and egress services that are integrated out-of-the-box with Stream Analytics.

Configuring MSI based authentication to Data Lake Storage

Feedback

Engage with us, and get preview of new features by following our twitter handle @AzureStreaming.

Azure Stream Analytics team is highly committed to listening to your feedback and letting the user voice dictate our future investments. Please join the conversation and make your voice heard via our UserVoice.