About BITS and downloading and uploading files

Programs nowadays often need to download files and data from the internet – maybe they need new content, new configurations, or the latest updates. The Windows Background Intelligent Transfer Service (BITS) is an easy way for programs to ask Windows to download files from or upload files to a remote HTTP or SMB file server. BITS will handle problems like network outages, expensive networks (when your user is on a cell plan and is roaming), and more.

In this blog post I’ll show how you can easily use BITS from a C# or other .NET language program. To use BITS from C++ using its native COM interface, see the GitHub sample at https://github.com/Microsoft/Windows-classic-samples/tree/master/Samples/BacgroundIntelligenceTransferServicePolicy. Your program can create new downloads from and uploads to HTTP web servers and SMB file servers. Your program can also monitor BITS downloads and uploads. If your program runs as admin, you can monitor all the BITS traffic on a machine. If you run just as a user, you can only monitor your own BITS traffic.

The companion program from this blog post, the BITS Manager program, is available both as source code on GitHub at https://github.com/Microsoft/BITS-Manager and as a ready-to-run executable at https://github.com/Microsoft/BITS-Manager/releases.

![The BITS Manager program]()

What all can BITS do?

The most common use of BITS is to download files from the internet. But dig beneath the covers and you’ll find that the “Intelligent” in BITS is well earned!

BITS is careful about the user’s experience

Many downloads (and uploads) need to make forward progress, but also want to be nice to the user and not interfere with the user’s other work. BITS works to make sure that downloads and uploads don’t happen on costed networks and that the background downloads and uploads don’t hurt the user’s foreground experience. BITS does this by looking at both the computer’s available network bandwidth and information about the local network. For uploads BITS will enable LEDBAT (when available). LEDBAT is a new congestion control algorithm that’s built into newer versions of Windows and Windows Server. You can also set different priorities for your transfers so that the more important downloads and uploads happen first.

BITS gives you control over transfer operations. You can specify what your cost requirements are to enable transfers on expensive (roaming) networks and the priority of each download or upload. You can set a transfer to be a foreground priority transfer and have the transfer happen right away or set your transfer to be a low priority transfer and be extra nice to your user. See https://docs.microsoft.com/en-us/windows/desktop/Bits/best-practices-when-using-bits for BITS best practices.

BITS can be managed by an IT department

An enterprise IT department might have a preference about how much bandwidth to allocate to background transfers at different times of the day or might want to control how long a transfer is allowed to take. BITS has rich Group Policy and MDM policies for just these scenarios. See https://docs.microsoft.com/en-us/windows/client-management/mdm/policy-csp-bits for more details on controlling BITS with MDM and https://docs.microsoft.com/en-us/windows/desktop/Bits/group-policies for the available Group Policies.

Quick start: download a file from the web

To download a file from the web using the BITS Manager sample program, select JobsàQuick File Download. The file download dialog pops up:

![Quick file download in the BITS Manager sample program.]()

Type in a URL to download. The Local file field will be automatically updated with a potential download filename taken from the segments portion of the URL. When you tap OK, a BITS job will be created, the remote URL and local file will be added to the job, and the job will be resumed. The job will then automatically download as appropriate and will be switched to a final state at the end.

To have your program download a file with BITS, you need to create a BITS manager, create a job, add the URL and the local file names, and then resume the job. Then you need to wait until the file is done transferring and then complete the job. These steps are shown in this snippet.

In addition to this code, a version 1.5 BITSReference DLL has been added to the C# project’s references, and a using directive added to the C# file.

Sample Code

private BITS.IBackgroundCopyJob DownloadFile(string URL, string filename)

{

// The _mgr value is set like this: _mgr = new BITS.BackgroundCopyManager1_5();

if (_mgr == null)

{

return null;

}

BITS.GUID jobGuid;

BITS.IBackgroundCopyJob job;

_mgr.CreateJob("Quick download", BITS.BG_JOB_TYPE.BG_JOB_TYPE_DOWNLOAD,

out jobGuid, out job);

try

{

job.AddFile(URL, filename);

}

catch (System.Runtime.InteropServices.COMException ex)

{

MessageBox.Show(

String.Format(Properties.Resources.ErrorBitsException,

ex.HResult,

ex.Message),

Properties.Resources.ErrorTitle

);

job.Cancel();

return job;

}

catch (System.UnauthorizedAccessException)

{

MessageBox.Show(Properties.Resources.ErrorUnauthorizedAccessMessage,

Properties.Resources.ErrorUnauthorizedAccessTitle);

job.Cancel();

return job;

}

catch (System.ArgumentException ex)

{

MessageBox.Show(

String.Format(Properties.Resources.ErrorMessage, ex.Message),

Properties.Resources.ErrorTitle

);

job.Cancel();

return job;

}

try

{

SetJobProperties(job); // Set job properties as needed

job.SetNotifyFlags(

(UInt32)BitsNotifyFlags.JOB_TRANSFERRED

+ (UInt32)BitsNotifyFlags.JOB_ERROR);

job.SetNotifyInterface(this);

// Will call JobTransferred, JobError, JobModification based on notify flags

job.Resume();

}

catch (System.Runtime.InteropServices.COMException ex)

{

MessageBox.Show(

String.Format(

Properties.Resources.ErrorBitsException,

ex.HResult,

ex.Message),

Properties.Resources.ErrorTitle

);

job.Cancel();

}

// Unless there was an error, the job is now running. We can exit

// and it will continue automatically.

return job; // Return the job that was created

}

public void JobTransferred(BITS.IBackgroundCopyJob pJob)

{

pJob.Complete();

}

public void JobError(BITS.IBackgroundCopyJob pJob, BITS.IBackgroundCopyError pError)

{

pJob.Cancel();

}

public void JobModification(BITS.IBackgroundCopyJob pJob, uint dwReserved)

{

// JobModification has to exist to satisfy the interface. But unless

// the call to SetNotifyInterface includes the BG_NOTIFY_JOB_MODIFICATION flag,

// this method won't be called.

}

You have to resume the job at the start because all jobs start off suspended, and you have to complete the job so that BITS removes it from its internal database of jobs. The full life cycle of a BITS job is explained at https://docs.microsoft.com/en-us/windows/desktop/Bits/life-cycle-of-a-bits-job.

Using BITS from C#

Connecting COM-oriented BITS and .NET

In this sample, the .NET code uses .NET wrappers for the BITS COM interfaces. The wrappers are in the generated BITSReference DLL files. The BITSReference DLL files are created using the MIDL and TLBIMP tools on the BITS IDL (Interface Definition Language) files. The IDL files, MIDL and TLBIMP are all part of the Windows SDK. The steps are fully defined in the BITS documentation at https://docs.microsoft.com/en-us/windows/desktop/Bits/bits-dot-net.

The automatically defined wrapper classes can be recreated at any time so that you can easily make use of the latest updates to the BITS APIs without depending on a third-party wrapper library.

The sample uses several different versions of the BITSReference DLL files. As the numbers increase, more features are available. The 1_5 version is suitable for running on Windows 7 SP1; the 5_0 version is usable in all versions of Windows 10.

If you don’t want to build your own reference DLL file, you can use the ones that were used to build the sample. They are copied over when you install the sample program and will be in the same directory as the sample EXE.

Add the BITSReference DLLs as references

In Visual Studio 2017, in the Solution Explorer:

- Right-click References and click “Add Reference …”

- In the Reference Manager dialog that pops up, click the “Browse…” button on the bottom-right of the dialog.

- In the “Select the files to reference…” file picker that pops up, navigate to the DLL (BITSReference1_5.dll) and click “Add.” The file picker dialog will close.

- In the “Reference Manager” dialog box, the DLL should be added to the list of possible references and will be checked. Click OK to add the reference.

Keep adding until you’ve added all the reference DLLs that you’ll be using.

![Adding the BITSReference DLLs as references]()

Add using directives

In your code it’s best to add a set of using directives to your C# file. Once you do this, switching to a new version of BITS becomes much easier. The sample has four different using directives for different versions of BITS. BITS 1.5 is usable even on Windows 7 machines and has many of the basic BITS features, so that’s a good starting point for your code. The BITS What’s New documentation at https://docs.microsoft.com/en-us/windows/desktop/Bits/what-s-new contains a list of the changes to BITS. In the BITS Manager sample program, BITS4 is used for the HTTP options, BITS5 is used for job options like Dynamic and cost flags, and BITS10_2 is used for the custom HTTP verb setting.

// Set up the BITS namespaces

using BITS = BITSReference1_5;

using BITS4 = BITSReference4_0;

using BITS5 = BITSReference5_0;

using BITS10_2 = BITSReference10_2;

Make a BITS IBackgroundCopyManager

The BITS IBackgroundCopyManager interface is the universal entry point into all the BITS classes like the BITS jobs and files. In the sample BITS Manager program, a single _mgr object is created when the main window loads in MainWindow.xaml.cs.

_mgr = new BITS.BackgroundCopyManager1_5();

The _mgr object type is an IBackgroundCopyManager interface; that interface is implemented by the BackgroundCopyManager1_5 class. Each of the different BITS reference DLL versions have classes whose name includes a version number. For example, the BITS 10.2 reference DLL calls the class BackgroundCopyManager10_2. Only the class names are changed; the interface names are the same.

Create a job and add a file to a job

Create a new BITS job using the IBackgroundCopyManager interface and the CreateJob() method. The CreateJob() method takes in a string of the job name (it doesn’t have to be unique) and returns a filled-in BITS Job GUID as the unique identifier and a filled in IBackgroundCopyJob object. All versions of the manager will make the original BITS 1.0 version of the job. If you need a new version of the job object, see the section on “Using newer BITS features” (hint: it’s just a cast).

![Create a new job screen.]()

In the sample code, jobs are created in the MainWindow.xaml.cs file in the OnMenuCreateNewJob() method (it’s called when you use the New Job menu entry) and in the QuickFileDownloadWindow.xaml.cs file. Since we’ve already seen the quick file download code earlier, here’s the code that’s called when you use the “New Job” menu:

BITS.GUID jobId;

BITS.IBackgroundCopyJob job;

_mgr.CreateJob(jobName, jobType, out jobId, out job);

try

{

dlg.SetJobProperties(job);

}

catch (System.Runtime.InteropServices.COMException ex)

{

// No need to cancel; the job will show up in the job list and

// will be selected. The user should deal with it as they see fit.

MessageBox.Show(

String.Format(Properties.Resources.ErrorBitsException,

ex.HResult,

ex.Message),

Properties.Resources.ErrorTitle

);

}

RefreshJobList();

In the code, the dlg variable is a CreateNewJobWindow dialog that pops up a window that lets you enter in the job name and job properties. Once a job is created (with _mgr.CreateJob), the dialog has a SetJobProperties method to fill in the job property values. You must specify in the code that jobId and job are both out parameters.

Jobs are always created without any files and in a suspended state.

BITS jobs are always on a per-account basis; this means that when a single user has several programs that all use BITS, all of the jobs from all of the programs will be displayed. If you need to write a program that makes some BITS jobs and sometime later modifies them (for example, to complete them), you should keep track of the job GUID values.

To add a file to a job, call Job.AddFile(remoteUri, localFile) where the remoteUri is a string with the remote filename, and the localFile is a string with the local file. To start a transfer, call job.Resume(). BITS will then decide when it’s appropriate to start the job.

Enumerating Jobs and Files

The BITS interfaces to enumerate (list) jobs and files can be tricky the first time you use them. The key is that you first make an enumerator object and then you keep calling Next() on it until you don’t get a job or file out. The example makes an enumerator for just the user’s BITS jobs; the complete code is below. The _mgr object is an instance of the IBackgroundCopyManager interface.

BITS.IEnumBackgroundCopyJobs jobsEnum = null;

uint njobFetched = 0;

BITS.IBackgroundCopyJob job = null;

_mgr.EnumJobs(0, out jobsEnum); // The 0 means get just the user’s jobs

do

{

jobsEnum.Next(1, out job, ref njobFetched);

if (njobFetched > 0)

{

// Do something with the job

}

}

while (njobFetched > 0);

Listing files is very similar but uses a job’s EnumFiles() to get the enumerator. The EnumFiles() method just takes in the IBackgroundCopyFile object; there aren’t any additional settings.

Be notified when a job is modified or completed

BITS has several ways to let you know when a job is modified or complete. The easiest notification mechanism is to call Job.SetNotifyInterface(IBackgroundCopyCallback callback). The callback object needs to implement the IBackgroundCopyCallback interface; that interface has three methods that you will need to implement.

You must first declare that your class implements the callback:

public partial class QuickFileDownloadWindow : Window, BITS.IBackgroundCopyCallback

After you make the job, call SetNotifyFlags and SetNotifyInterface:

job.SetNotifyFlags(

(UInt32)BitsNotifyFlags.JOB_TRANSFERRED

+ (UInt32)BitsNotifyFlags.JOB_ERROR);

job.SetNotifyInterface(this);

// Will call JobTransferred, JobError, JobModification based on the notify flags

You will also need to implement the callback. The JobTransferred and JobError callbacks are set up to call the job.Complete and job.Cancel methods to move the job into a final state.

public void JobTransferred(BITS.IBackgroundCopyJob pJob)

{

pJob.Complete();

}

public void JobError(BITS.IBackgroundCopyJob pJob, BITS.IBackgroundCopyError pError)

{

pJob.Cancel();

}

public void JobModification(BITS.IBackgroundCopyJob pJob, uint dwReserved)

{

// JobModification has to exist to satisfy the interface. But unless

// the call to SetNotifyInterface includes the BG_NOTIFY_JOB_MODIFICATION flag,

// this method won't be called.

}

In the sample code, the QuickFileDownloadWindow.xaml.cs file demonstrates how to use the IBackgroundCopyCallback interface. The UI of the sample code is updated by the main polling loop but could have been updated by the callbacks.

You can also register a command line for BITS to execute when the file is transferred. This lets you re-run your program after the transfer is complete. See the BITS IBackgroundCopyJob2::SetNotifyCmdLine() method for more information.

Use a downloaded file

Once you’ve got a file downloaded, the next thing you’ll want to do is use it. The IBackgroundCopyFile’s GetLocalName(string) method is how you get the path of the downloaded file. Before you can use it, the job must be in the ACKNOWLEDGED final state. If the job is in the CANCELLED final state, the result file won’t exist. Alternately, if you set the job as a high performance job (using the IBackgroundCopyJob5.SetProperty() method), the file will be available while it’s being downloaded, or you can access the temporary file by looking at the result from a call to the file’s GetTemporaryName() method. In all cases, if BITS isn’t done with the file, you must open it with a file share Write flag.

In the sample code, the FileDetailViewControl.xaml.cs OnOpenFile() method gets the local name of the file and then uses the .NET System.Diagnostics.Process.Start() method to have the operating system open the file with the appropriate program.

string Filename;

_file.GetLocalName(out Filename);

try

{

System.Diagnostics.Process.Start(Filename);

}

catch (System.ComponentModel.Win32Exception ex)

{

MessageBox.Show(String.Format(Properties.Resources.ErrorMessage, ex.Message),

Properties.Resources.ErrorTitle);

}

Using newer BITS features

You’ll find that you often have a BITS 1.0 version of an object and need a more recent one to use more recent BITS features.

For example, when you make a job object with the IBackgroundCopyManager.CreateJob() method, the resulting job is always a version 1.0 job. To make a newer version, use a .NET cast to convert from an older type object to a newer type object. The cast will automatically call a COM QueryInterface as appropriate.

In this example, there’s a BITS IBackgroundCopyJob object named “job”, and we want to convert it to an IBackgroundCopyJob5 object named “job5” so that we can call the BITS 5.0 GetProperty method. We just cast to the IBackgroundCopyJob5 type like this:

var job5 = job as BITS5.IBackgroundCopyJob5;

The job5 variable will be initialized by .NET by using the correct QueryInterface. It’s important to note that .NET doesn’t know about the real relationship between the BITS interfaces. If you ask for the wrong kind of interface, .NET will try to make it for you and fail, and set job5 to null.

Try it out yourself today!

There are plenty more features of BITS for you to use. Complete details are in the docs.microsoft.com documentation. The BITS Manager sample is available as a downloadable executable in the releases link on GitHub. The complete source code is also available including the BITSReferenceDLL files that it uses. Help for using the BITS Manager and to explain the source code organization is in the BITS-Manager GitHub Wiki.

If you prefer coding in C or C++, the documentation will point you to the existing samples.

Good luck, and happy Background File Transfers!

The post Using Background Intelligent Transfer Service (BITS) from .NET appeared first on Windows Developer Blog.

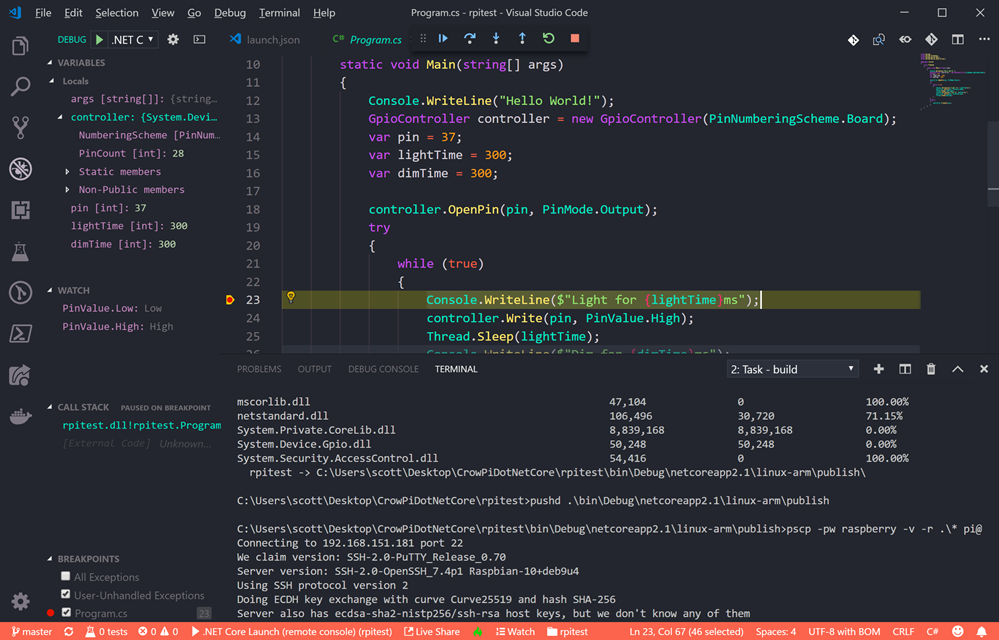

I've written about running .NET Core on Raspberry Pis before, although support was initially limited. Now that Linux ARM32 is a supported distro, what else can we do?

I've written about running .NET Core on Raspberry Pis before, although support was initially limited. Now that Linux ARM32 is a supported distro, what else can we do?

In my

In my

Missing Maps is an open-source collaborative effort founded by the American Red Cross, British Red Cross, Medicine Sans Frontiers, and the

Missing Maps is an open-source collaborative effort founded by the American Red Cross, British Red Cross, Medicine Sans Frontiers, and the