I was hanging out with Miguel de Icaza in New York a few weeks ago and he was sharing with me his ongoing love affair with a NoSQL Database called Azure DocumentDB. I've looked at it a few times over the last year or so and though it was cool but I didn't feel like using it for a few reasons:

- Can't develop locally - I'm often in low-bandwidth or airplane situations

- No MongoDB support - I have existing apps written in Node that use Mongo

- No .NET Core support - I'm doing mostly cross-platform .NET Core apps

Miguel told me to take a closer look. Looks like things have changed! DocumentDB now has:

- Free local DocumentDB Emulator - I asked and this is the SAME code that runs in Azure with just changes like using the local file system for persistence, etc. It's an "emulator" but it's really the essential same core engine code. There is no cost and no sign in for the local DocumentDB emulator.

- MongoDB protocol support - This is amazing. I literally took an existing Node app, downloaded MongoChef and copied my collection over into Azure using a standard MongoDB connection string, then pointed my app at DocumentDB and it just worked. It's using DocumentDB for storage though, which gives me

- Better Latency

- Turnkey global geo-replication (like literally a few clicks)

- A performance SLA with <10ms read and <15ms write (Service Level Agreement)

- Metrics and Resource Management like every Azure Service

- DocumentDB .NET Core Preview SDK that has feature parity with the .NET Framework SDK.

There's also Node, .NET, Python, Java, and C++ SDKs for DocumentDB so it's nice for gaming on Unity, Web Apps, or any .NET App...including Xamarin mobile apps on iOS and Android which is why Miguel is so hype on it.

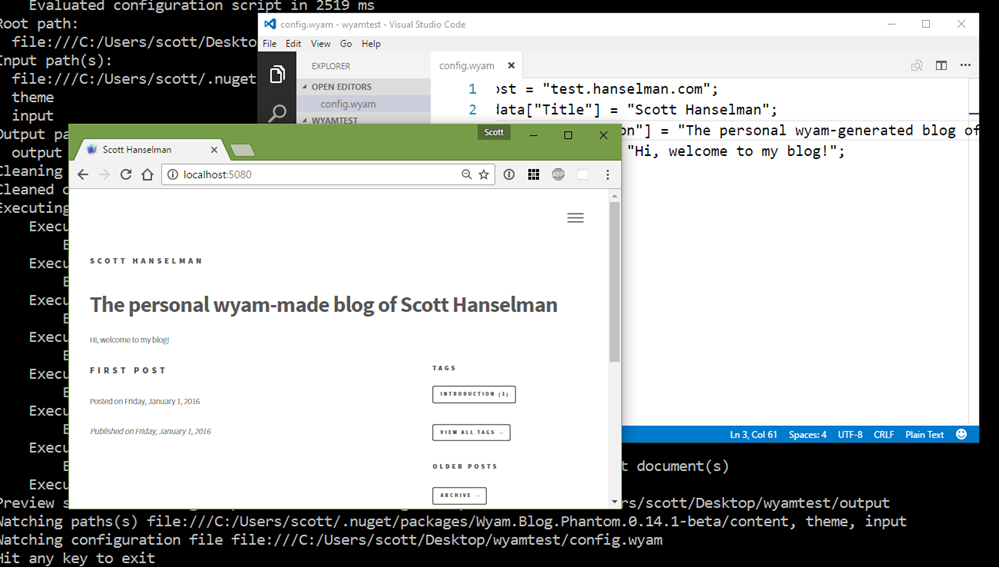

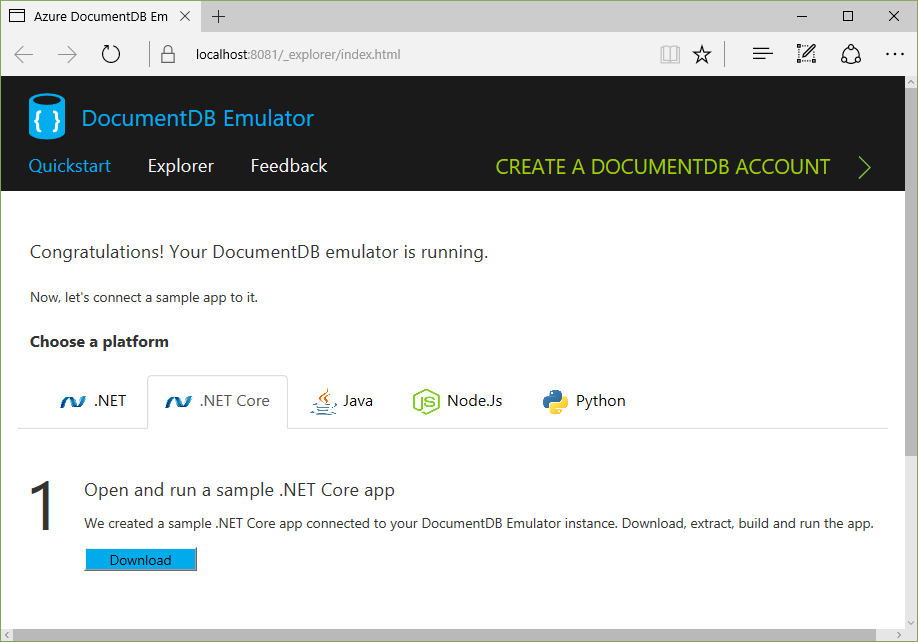

Azure DocumentDB Local Quick Start

I wanted to see how quickly I could get started. I spoke with the PM for the project on Azure Friday and downloaded and installed the local emulator. The lead on the project said it's Windows for now but they are looking for cross-platform solutions. After it was installed it popped up my web browser with a local web page - I wish more development tools would have such clean Quick Starts. There's also a nice quick start on using DocumentDB with ASP.NET MVC.

NOTE: This is a 0.1.0 release. Definitely Alpha level. For example, the sample included looks like it had the package name changed at some point so it didn't line up. I had to change "Microsoft.Azure.Documents.Client": "0.1.0" to "Microsoft.Azure.DocumentDB.Core": "0.1.0-preview" so a little attention to detail issue there. I believe the intent is for stuff to Just Work. ;)

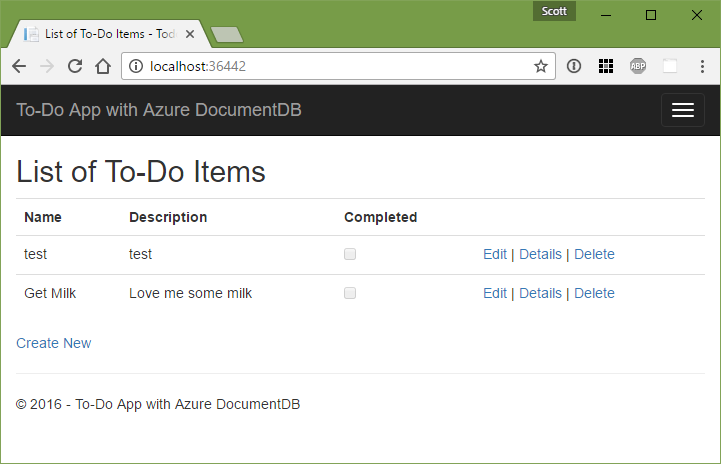

The sample app is a pretty standard "ToDo" app:

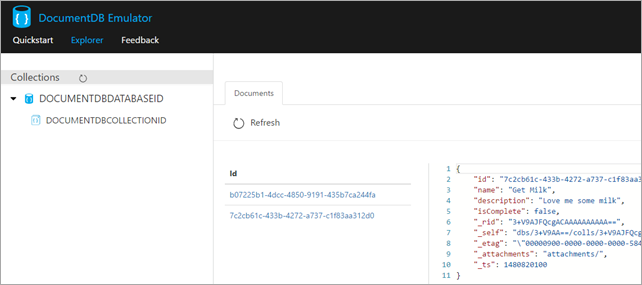

The local Emulator also includes a web-based local Data Explorer:

A Todo Item is really just a POCO (Plain Old CLR Object) like this:

namespace todo.Models

{

using Newtonsoft.Json;

public class Item

{

[JsonProperty(PropertyName = "id")]

public string Id { get; set; }

[JsonProperty(PropertyName = "name")]

public string Name { get; set; }

[JsonProperty(PropertyName = "description")]

public string Description { get; set; }

[JsonProperty(PropertyName = "isComplete")]

public bool Completed { get; set; }

}

}The MVC Controller in the sample uses an underlying repository pattern so the code is super simple at that layer - as an example:

[ActionName("Index")]

public async Task Index()

{

var items = await DocumentDBRepository- .GetItemsAsync(d => !d.Completed);

return View(items);

}

[HttpPost]

[ActionName("Create")]

[ValidateAntiForgeryToken]

public async Task CreateAsync([Bind("Id,Name,Description,Completed")] Item item)

{

if (ModelState.IsValid)

{

await DocumentDBRepository- .CreateItemAsync(item);

return RedirectToAction("Index");

}

return View(item);

} The Repository itself that's abstracting away the complexities is itself not that complex. It's like 120 lines of code, and really more like 60 when you remove whitespace and curly braces. And half of that is just initialization and setup. It's also DocumentDBRepository

The only thing that stands out to me in this sample is the loopp in GetItemsAsync that's hiding potential paging/chunking. It's nice you can pass in a predicate but I'll want to go and put in some paging logic for large collections.

public static async TaskGetItemAsync(string id) { try { Document document = await client.ReadDocumentAsync(UriFactory.CreateDocumentUri(DatabaseId, CollectionId, id)); return (T)(dynamic)document; } catch (DocumentClientException e) { if (e.StatusCode == System.Net.HttpStatusCode.NotFound){ return null; } else { throw; } } } public static async Task > GetItemsAsync(Expression > predicate) { IDocumentQuery query = client.CreateDocumentQuery ( UriFactory.CreateDocumentCollectionUri(DatabaseId, CollectionId), new FeedOptions { MaxItemCount = -1 }) .Where(predicate) .AsDocumentQuery(); List results = new List (); while (query.HasMoreResults){ results.AddRange(await query.ExecuteNextAsync ()); } return results; } public static async Task CreateItemAsync(T item) { return await client.CreateDocumentAsync(UriFactory.CreateDocumentCollectionUri(DatabaseId, CollectionId), item); } public static async Task UpdateItemAsync(string id, T item) { return await client.ReplaceDocumentAsync(UriFactory.CreateDocumentUri(DatabaseId, CollectionId, id), item); } public static async Task DeleteItemAsync(string id) { await client.DeleteDocumentAsync(UriFactory.CreateDocumentUri(DatabaseId, CollectionId, id)); }

I'm going to keep playing with this but so far I'm pretty happy I can get this far while on an airplane. It's really easy (given I'm preferring NoSQL over SQL lately) to just through objects at it and store them.

In another post I'm going to look at RavenDB, another great NoSQL Document Database that works on .NET Core that s also Open Source.

Sponsor: Big thanks to Octopus Deploy! Do you deploy the same application multiple times for each of your end customers? The team at Octopus have taken the pain out of multi-tenant deployments. Check out their latest 3.4 release

© 2016 Scott Hanselman. All rights reserved.